Speeding Up Restores in Percona Backup for MongoDB

Percona

APRIL 26, 2023

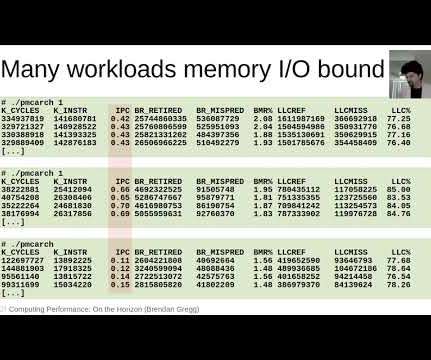

Bringing physical backups in Percona Backup for MongoDB (PBM) was a big step toward the restoration speed. The speed of the physical restoration comes down to how fast we can copy (download) data from the remote storage. We aim to port it to Azure Blob and FileSystem storage types in subsequent releases. Let’s try.

Let's personalize your content