Seeing through hardware counters: a journey to threefold performance increase

The Netflix TechBlog

NOVEMBER 9, 2022

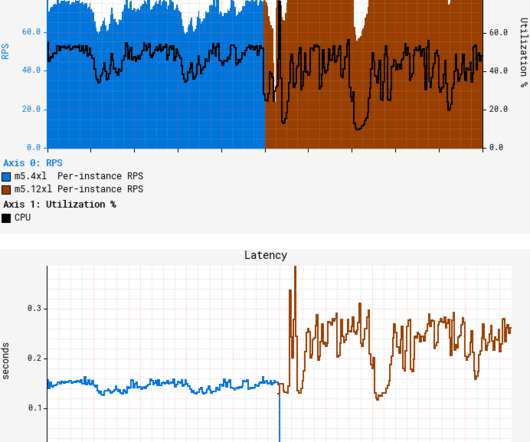

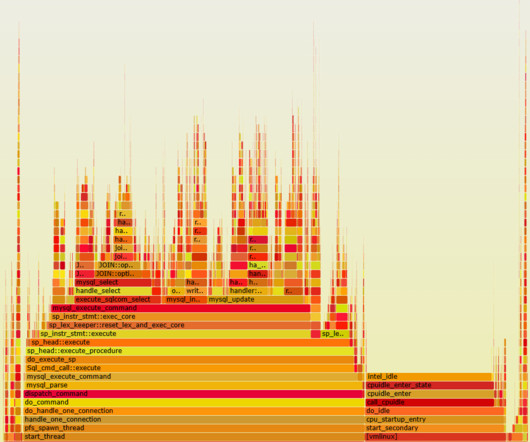

While we understand it’s virtually impossible to achieve a linear increase in throughput as the number of vCPUs grow, a near-linear increase is attainable. What’s worse, average latency degraded by more than 50%, with both CPU and latency patterns becoming more “choppy.” This was our starting point for troubleshooting.

Let's personalize your content