USENIX LISA2021 Computing Performance: On the Horizon

Brendan Gregg

JULY 4, 2021

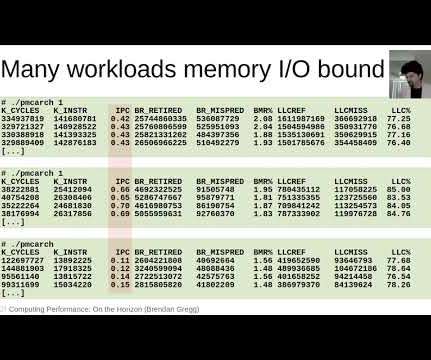

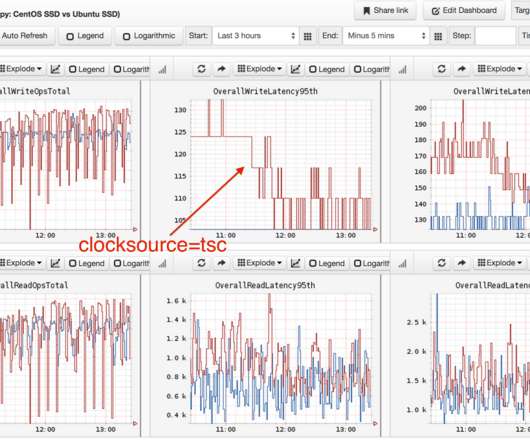

I summarized these topics and more as a plenary conference talk, including my own predictions (as a senior performance engineer) for the future of computing performance, with a focus on back-end servers. This was a chance to talk about other things I've been working on, such as the present and future of hardware performance.

Let's personalize your content