Migrating Netflix to GraphQL Safely

The Netflix TechBlog

JUNE 14, 2023

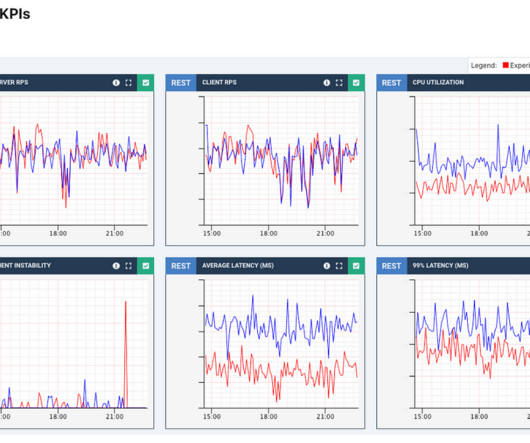

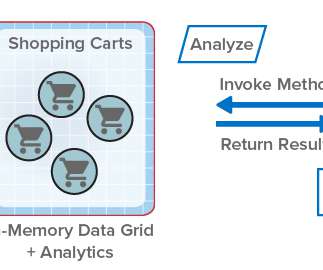

Before GraphQL: Monolithic Falcor API implemented and maintained by the API Team Before moving to GraphQL, our API layer consisted of a monolithic server built with Falcor. A single API team maintained both the Java implementation of the Falcor framework and the API Server. To launch Phase 1 safely, we used AB Testing.

Let's personalize your content