Consistent caching mechanism in Titus Gateway

The Netflix TechBlog

NOVEMBER 3, 2022

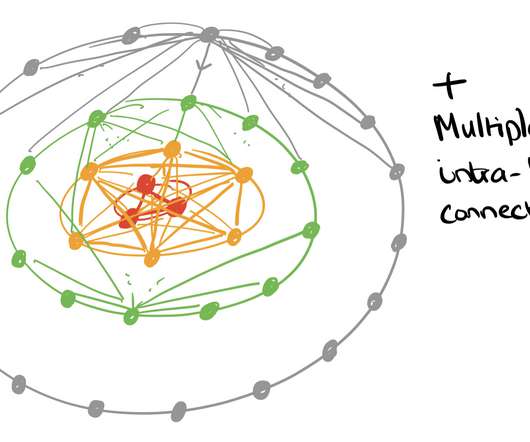

We introduce a caching mechanism in the API gateway layer, allowing us to offload processing from singleton leader elected controllers without giving up strict data consistency and guarantees clients observe. When a new leader is elected it loads all data from external storage. The cache is kept in sync with the current leader process.

Let's personalize your content