Netflix Cloud Packaging in the Terabyte Era

The Netflix TechBlog

SEPTEMBER 24, 2021

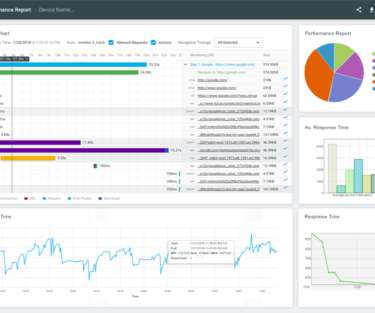

After content ingestion, inspection and encoding, the packaging step encapsulates encoded video and audio in codec agnostic container formats and provides features such as audio video synchronization, random access and DRM protection. Packaging has always been an important step in media processing. is 220 Mbps.

Let's personalize your content