Optimizing data warehouse storage

The Netflix TechBlog

DECEMBER 21, 2020

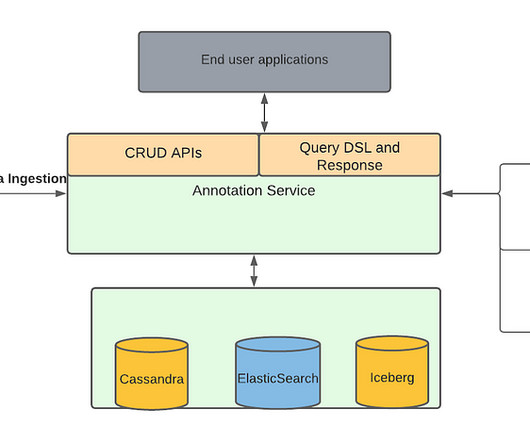

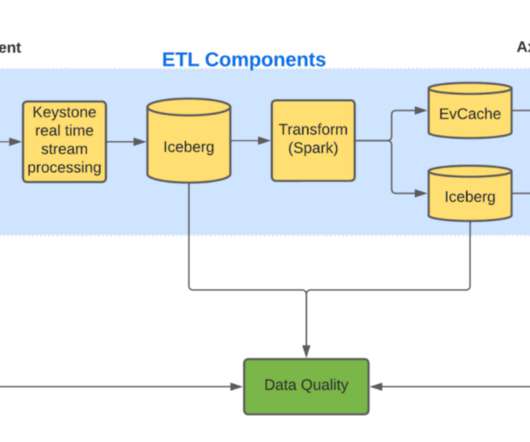

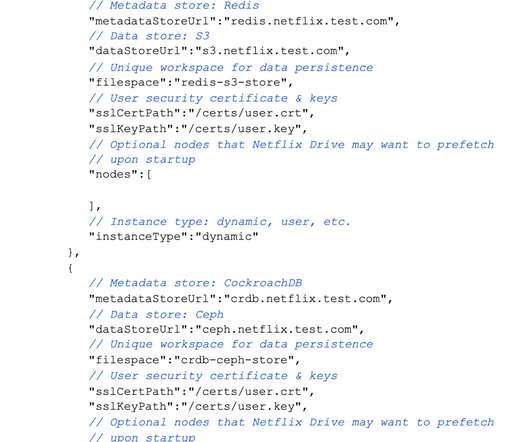

At this scale, we can gain a significant amount of performance and cost benefits by optimizing the storage layout (records, objects, partitions) as the data lands into our warehouse. We built AutoOptimize to efficiently and transparently optimize the data and metadata storage layout while maximizing their cost and performance benefits.

Let's personalize your content