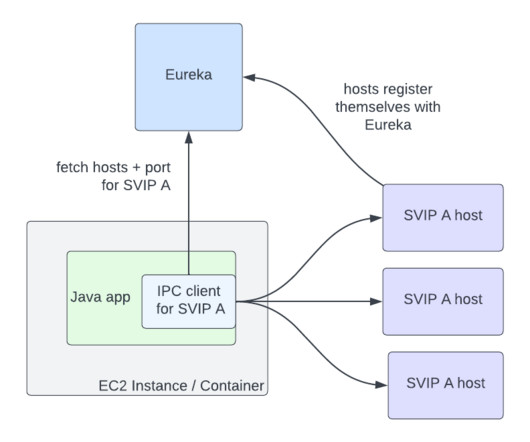

Zero Configuration Service Mesh with On-Demand Cluster Discovery

The Netflix TechBlog

AUGUST 29, 2023

These design principles led us to client-side load-balancing, and the 2012 Christmas Eve outage solidified this decision even further. There is a downside to fetching this data on-demand: this adds latency to the first request to a cluster.

Let's personalize your content