Supporting Diverse ML Systems at Netflix

The Netflix TechBlog

MARCH 7, 2024

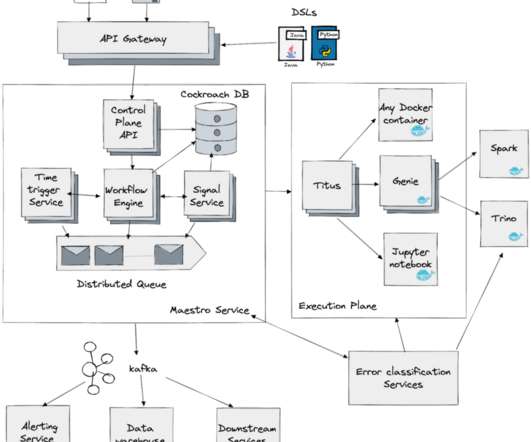

Occasionally, these use cases involve terabytes of data, so we have to pay attention to performance. By targeting @titus, Metaflow tasks benefit from these battle-hardened features out of the box, with no in-depth technical knowledge or engineering required from the ML engineers or data scientist end.

Let's personalize your content