3 Performance Tricks for Dealing With Big Data Sets

DZone

AUGUST 21, 2021

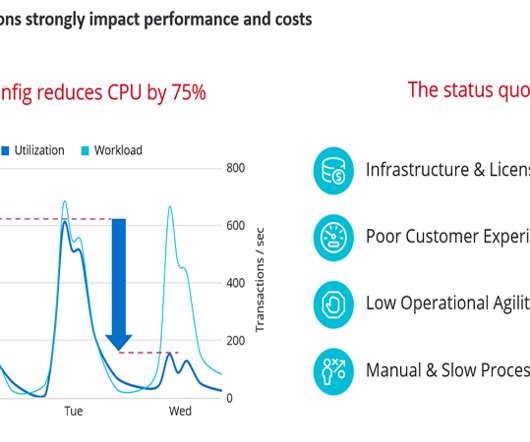

This article describes 3 different tricks that I used in dealing with big data sets (order of 10 million records) and that proved to enhance performance dramatically. Trick 1: CLOB Instead of Result Set.

Let's personalize your content