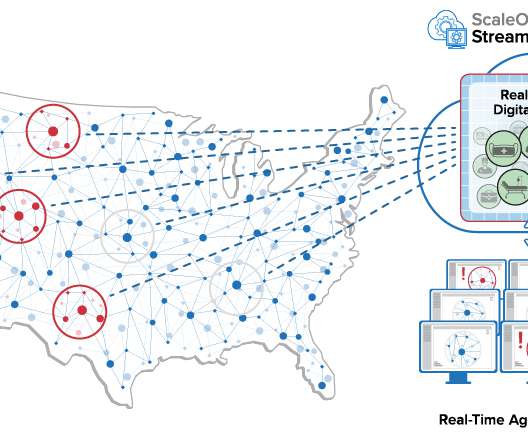

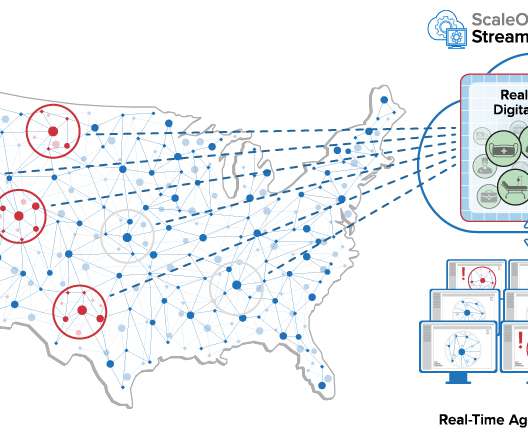

In-Stream Big Data Processing

Highly Scalable

AUGUST 20, 2013

The shortcomings and drawbacks of batch-oriented data processing were widely recognized by the Big Data community quite a long time ago. It became clear that real-time query processing and in-stream processing is the immediate need in many practical applications. Fault-tolerance.

Let's personalize your content