Tech Transforms podcast: Energy department CIO talks national cybersecurity strategy

Dynatrace

SEPTEMBER 20, 2023

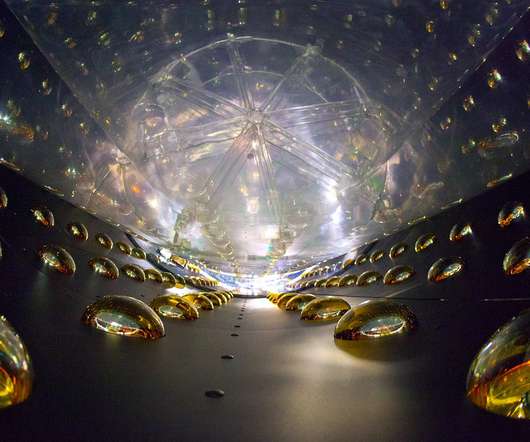

They’re really focusing on hardware and software systems together,” Dunkin said. How do you make hardware and software both secure by design?” The DOE supports the national cybersecurity strategy’s collective defense initiatives. Tune in to the full episode for more insights from Ann Dunkin. government as a whole.

Let's personalize your content