Leveraging Infrastructure as Code for Data Engineering Projects: A Comprehensive Guide

DZone

JULY 3, 2023

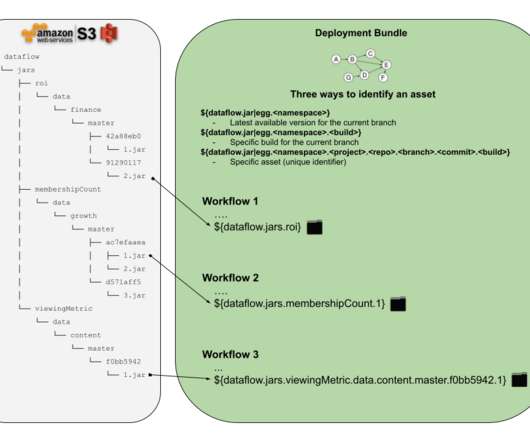

Data engineering projects often require the setup and management of complex infrastructures that support data processing, storage, and analysis. In this article, we will explore the benefits of leveraging IaC for data engineering projects and provide detailed implementation steps to get started.

Let's personalize your content