Multi-CDN Strategy: Benefits and Best Practices

IO River

NOVEMBER 2, 2023

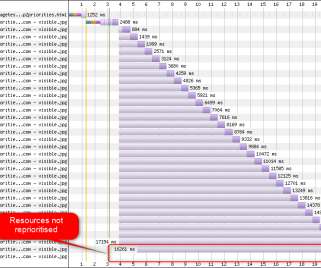

A CDN (Content Delivery Network) is a network of geographically distributed servers that brings web content closer to where end users are located, to ensure high availability, optimized performance and low latency. Multi-CDN is the practice of employing a number of CDN providers simultaneously.

Let's personalize your content