Service level objectives: 5 SLOs to get started

Dynatrace

JUNE 1, 2023

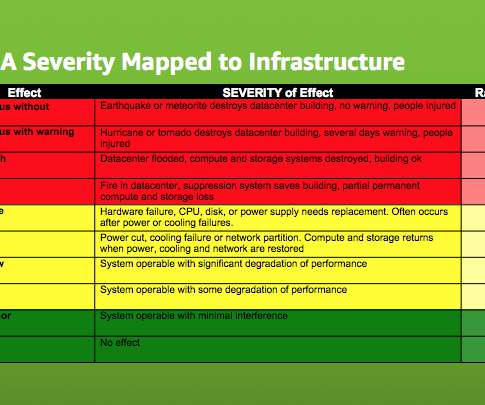

These organizations rely heavily on performance, availability, and user satisfaction to drive sales and retain customers. Availability Availability SLO quantifies the expected level of service availability over a specific time period. Availability is typically expressed in 9’s, such as 99.9%. or 99.99% of the time.

Let's personalize your content