How to use Server Timing to get backend transparency from your CDN

Speed Curve

FEBRUARY 5, 2024

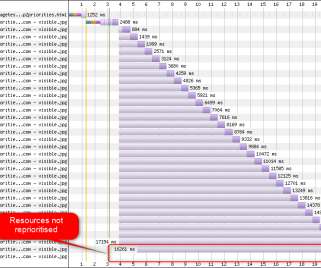

Charlie Vazac introduced server timing in a Performance Calendar post circa 2018. Caching the base page/HTML is common, and it should have a positive impact on backend times. Key things to understand from your CDN Cache Hit/Cache Miss – Was the resource served from the edge, or did the request have to go to origin?

Let's personalize your content