Bending pause times to your will with Generational ZGC

The Netflix TechBlog

MARCH 5, 2024

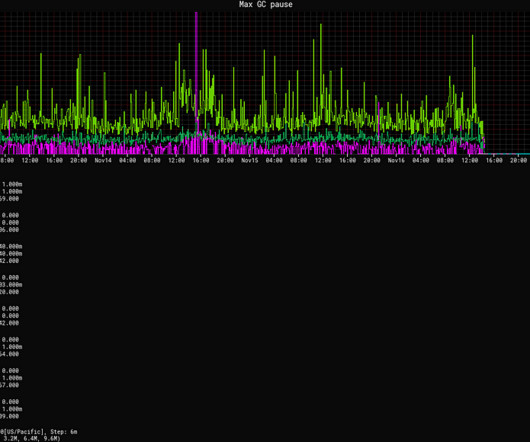

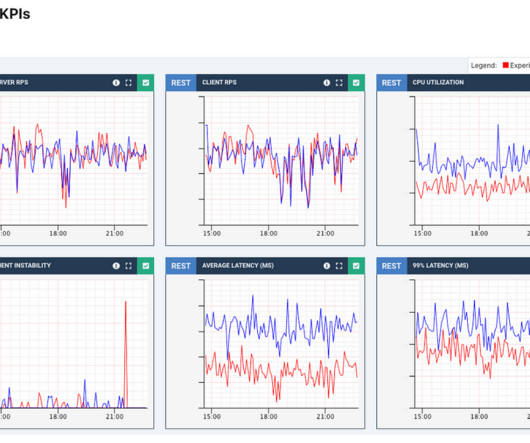

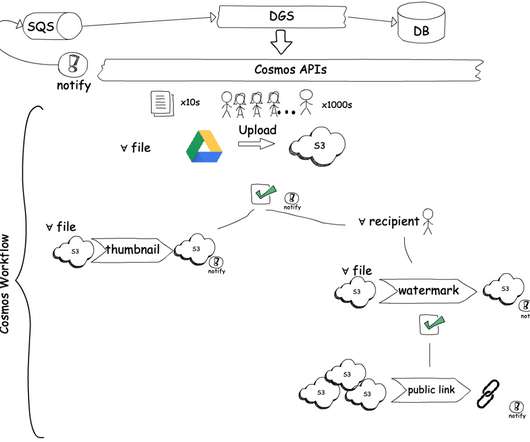

More than half of our critical streaming video services are now running on JDK 21 with Generational ZGC, so it’s a good time to talk about our experience and the benefits we’ve seen. Reduced tail latencies In both our GRPC and DGS Framework services, GC pauses are a significant source of tail latencies.

Let's personalize your content