Seeing through hardware counters: a journey to threefold performance increase

The Netflix TechBlog

NOVEMBER 9, 2022

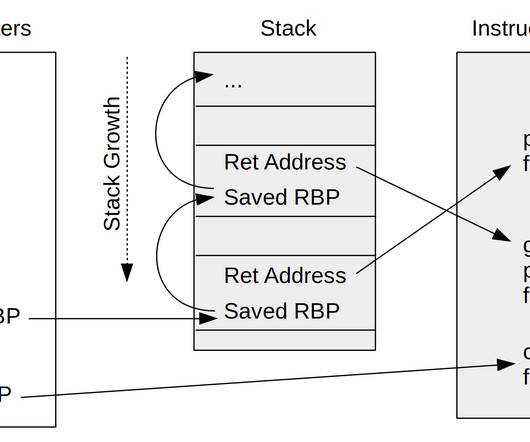

While we understand it’s virtually impossible to achieve a linear increase in throughput as the number of vCPUs grow, a near-linear increase is attainable. We also see much higher L1 cache activity combined with 4x higher count of MACHINE_CLEARS. Cache line is a concept similar to memory page?—? Thread 0’s cache in this example.

Let's personalize your content