Implementing service-level objectives to improve software quality

Dynatrace

DECEMBER 27, 2022

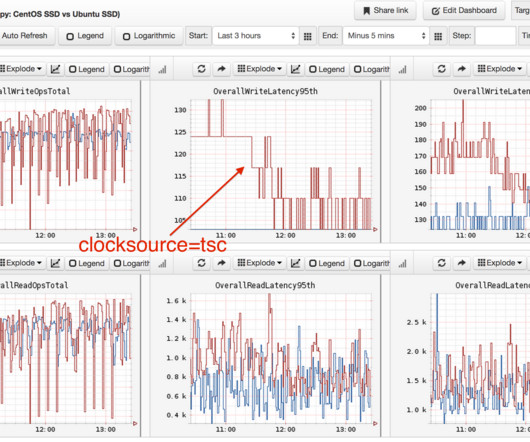

Instead, they can ensure that services comport with the pre-established benchmarks. When organizations implement SLOs, they can improve software development processes and application performance. SLOs improve software quality. Latency is the time that it takes a request to be served. SLOs promote automation. Reliability.

Let's personalize your content