Consistent caching mechanism in Titus Gateway

The Netflix TechBlog

NOVEMBER 3, 2022

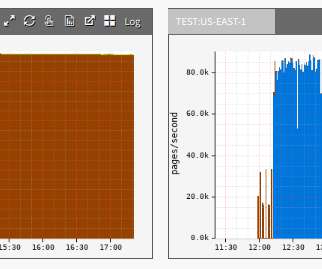

In the time since it was first presented as an advanced Mesos framework, Titus has transparently evolved from being built on top of Mesos to Kubernetes, handling an ever-increasing volume of containers. This blog post presents how our current iteration of Titus deals with high API call volumes by scaling out horizontally.

Let's personalize your content