Edgar: Solving Mysteries Faster with Observability

The Netflix TechBlog

SEPTEMBER 2, 2020

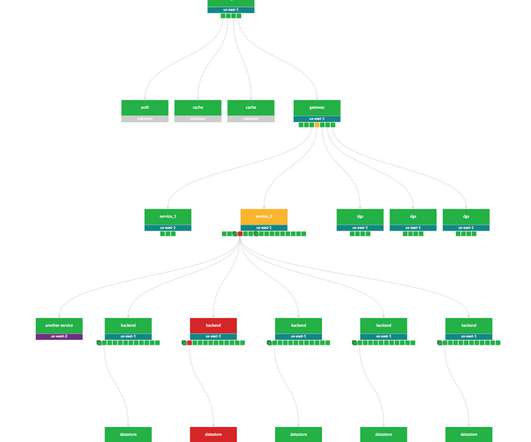

While this abundance of dashboards and information is by no means unique to Netflix, it certainly holds true within our microservices architecture. Distributed tracing is the process of generating, transporting, storing, and retrieving traces in a distributed system. Is this an anomaly or are we dealing with a pattern?

Let's personalize your content