Current status, needs, and challenges in Heterogeneous and Composable Memory from the HCM workshop (HPCA’23)

ACM Sigarch

MAY 31, 2023

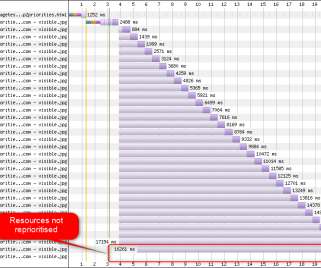

Heterogeneous and Composable Memory (HCM) offers a feasible solution for terabyte- or petabyte-scale systems, addressing the performance and efficiency demands of emerging big-data applications. This article lays out the ideas and discussions shared at the workshop. Figure 1: Heterogeneous memory with CXL (source: Maruf et al.,

Let's personalize your content