Evolving from Rule-based Classifier: Machine Learning Powered Auto Remediation in Netflix Data…

The Netflix TechBlog

MARCH 4, 2024

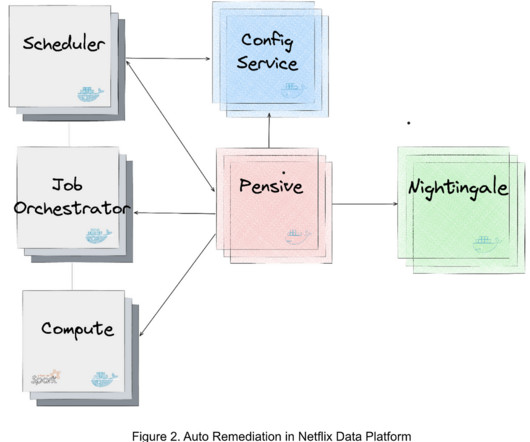

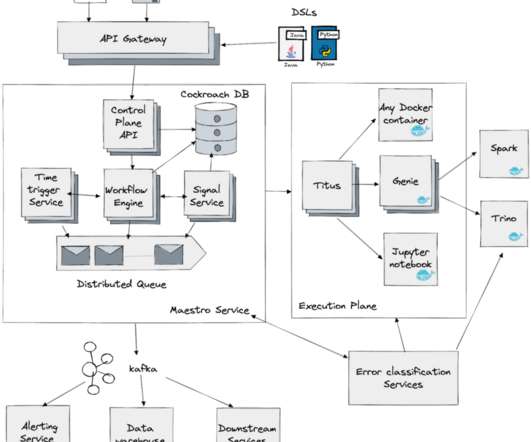

Operational automation–including but not limited to, auto diagnosis, auto remediation, auto configuration, auto tuning, auto scaling, auto debugging, and auto testing–is key to the success of modern data platforms. Auto Remediation generates recommendations by considering both performance (i.e., Multi-objective optimizations.

Let's personalize your content