Building Netflix’s Distributed Tracing Infrastructure

The Netflix TechBlog

OCTOBER 19, 2020

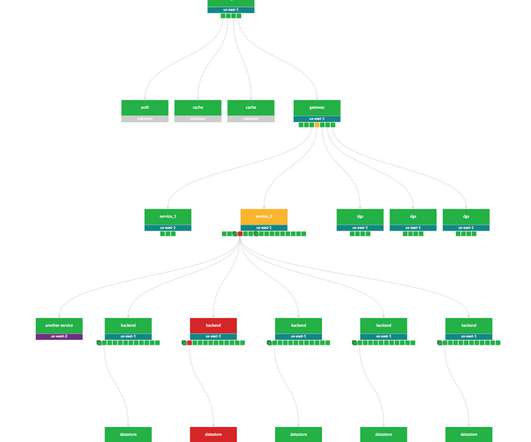

If we had an ID for each streaming session then distributed tracing could easily reconstruct session failure by providing service topology, retry and error tags, and latency measurements for all service calls. Our trace data collection agent transports traces to Mantis job cluster via the Mantis Publish library. What’s next?

Let's personalize your content