Percentiles don’t work: Analyzing the distribution of response times for web services

Adrian Cockcroft

JANUARY 29, 2023

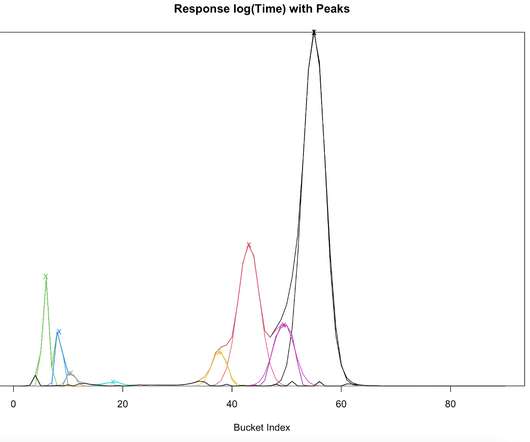

There is no way to model how much more traffic you can send to that system before it exceeds it’s SLA. Every opportunity for delay due to more work than the best case or more time waiting than the best case increases the latency and they all add up and create a long tail. Mu is the mean of each component, the latency.

Let's personalize your content