Best practices and key metrics for improving mobile app performance

Dynatrace

DECEMBER 13, 2023

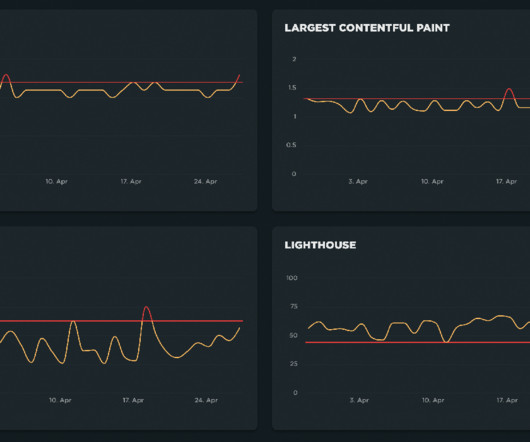

As a result, organizations need to monitor mobile app performance metrics that are meaningful and actionable by gaining adequate observability of mobile app performance. There are many common mobile app performance metrics that are used to measure key performance indicators (KPIs) related to user experience and satisfaction.

Let's personalize your content