What is infrastructure monitoring and why is it mission-critical in the new normal?

Dynatrace

NOVEMBER 2, 2020

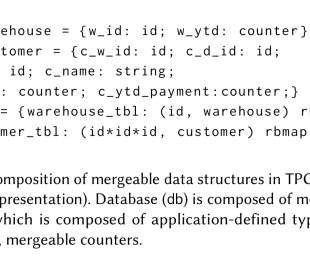

IT infrastructure is the heart of your digital business and connects every area – physical and virtual servers, storage, databases, networks, cloud services. This shift requires infrastructure monitoring to ensure all your components work together across applications, operating systems, storage, servers, virtualization, and more.

Let's personalize your content