Formulating ‘Out of Memory Kill’ Prediction on the Netflix App as a Machine Learning Problem

The Netflix TechBlog

JULY 21, 2022

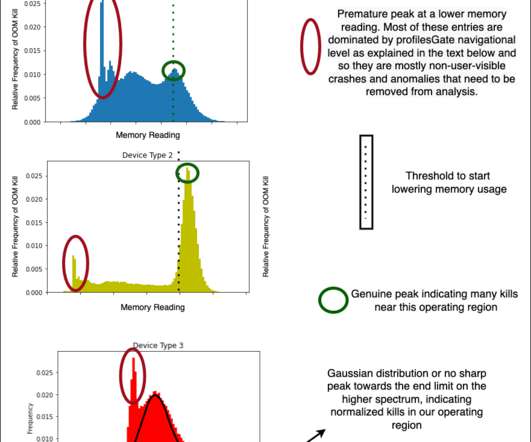

More importantly, the low resource availability or “out of memory” scenario is one of the common reasons for crashes/kills. We at Netflix, as a streaming service running on millions of devices, have a tremendous amount of data about device capabilities/characteristics and runtime data in our big data platform.

Let's personalize your content