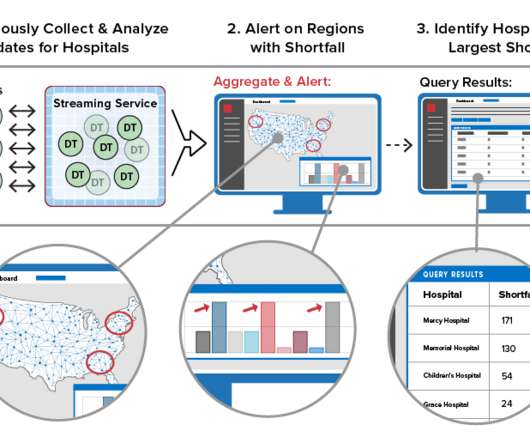

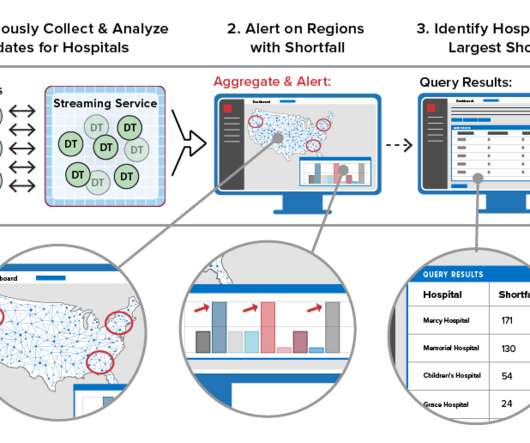

In-Stream Big Data Processing

Highly Scalable

AUGUST 20, 2013

The shortcomings and drawbacks of batch-oriented data processing were widely recognized by the Big Data community quite a long time ago. Towards Unified Big Data Processing. Moreover, techniques like Lambda Architecture [6, 7] were developed and adopted to combine these solutions efficiently.

Let's personalize your content