There are features many of us shy away from, like cursors, triggers, and dynamic SQL. There is no question they each have their use cases, but when we see a trigger with a cursor inside dynamic SQL, it can make us cringe (triple whammy).

Plan guides and sp_prepare are in a similar boat: if you saw me using one of them, you'd raise an eyebrow; if you saw me using them together, you'd probably check my temperature. But, as with cursors, triggers, and dynamic SQL, they have their use cases. And I recently came across a scenario where using them together was beneficial.

Background

We have a lot of data. And a lot of applications running against that data. Some of those applications are difficult or impossible to change, particularly off-the-shelf applications from a third party. So when their compiled application sends ad hoc queries to SQL Server, particularly as a prepared statement, and when we don't have the freedom to add or change indexes, several tuning opportunities are immediately off the table.

In this case, we had a table with a couple million rows. A simplified and sanitized version:

CREATE TABLE dbo.TheThings

(

ThingID bigint NOT NULL,

TypeID uniqueidentifier NOT NULL,

dt1 datetime NOT NULL DEFAULT sysutcdatetime(),

dt2 datetime NOT NULL DEFAULT sysutcdatetime(),

dt3 datetime NOT NULL DEFAULT sysutcdatetime(),

CONSTRAINT PK_TheThings PRIMARY KEY (ThingID)

);

CREATE INDEX ix_type ON dbo.TheThings(TypeID);

SET NOCOUNT ON;

GO

DECLARE @guid1 uniqueidentifier = 'EE81197A-B2EA-41F4-882E-4A5979ACACE4',

@guid2 uniqueidentifier = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F';

INSERT dbo.TheThings(ThingID, TypeID)

SELECT TOP (1000) 1000 + ROW_NUMBER() OVER (ORDER BY name), @guid1

FROM sys.all_columns;

INSERT dbo.TheThings(ThingID, TypeID)

SELECT TOP (1) 2500, @guid2

FROM sys.all_columns;

INSERT dbo.TheThings(ThingID, TypeID)

SELECT TOP (1000) 3000 + ROW_NUMBER() OVER (ORDER BY name), @guid1

FROM sys.all_columns;

The prepared statement from the application looked like this (as seen in the plan cache):

(@P0 varchar(8000))SELECT * FROM dbo.TheThings WHERE TypeID = @P0

The problem is that, for some values of TypeID, there would be many thousands of rows. For other values, there would be fewer than 10. If the wrong plan is chosen (and reused) based on one parameter type, this can be trouble for the others. For the query that retrieves a handful of rows, we want an index seek with lookups to retrieve the additional non-covered columns, but for the query that returns 700K rows, we just want a clustered index scan. (Ideally, the index would cover, but this option wasn't in the cards this time.)

In practice, the application was always getting the scan variation, even though that was the one that was needed about 1% of the time. 99% of the queries were using a 2 million row scan when they could have used a seek + 4 or 5 lookups.

We could easily reproduce this in Management Studio by running this query:

DBCC FREEPROCCACHE;

DECLARE @P0 uniqueidentifier = 'EE81197A-B2EA-41F4-882E-4A5979ACACE4';

SELECT * FROM dbo.TheThings WHERE TypeID = @P0;

GO

DBCC FREEPROCCACHE;

DECLARE @P0 uniqueidentifier = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F';

SELECT * FROM dbo.TheThings WHERE TypeID = @P0;

GO

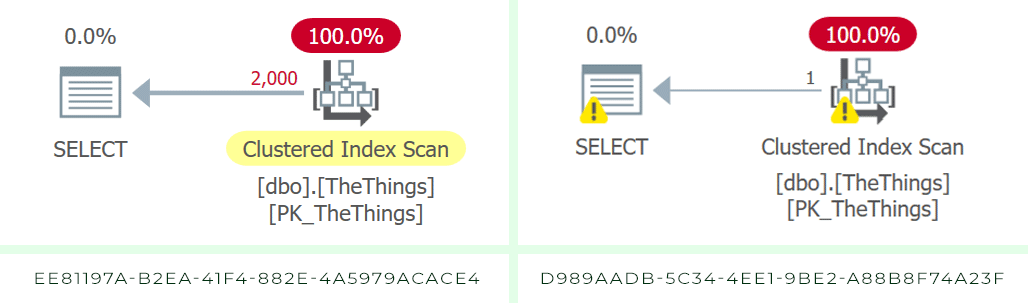

The plans came back like this:

The estimate in both cases was 1,000 rows; the warnings on the right are due to residual I/O.

The estimate in both cases was 1,000 rows; the warnings on the right are due to residual I/O.

How could we make sure the query made the right choice depending on the parameter? We'd need to make it recompile, without adding hints to the query, turning on trace flags, or changing database settings.

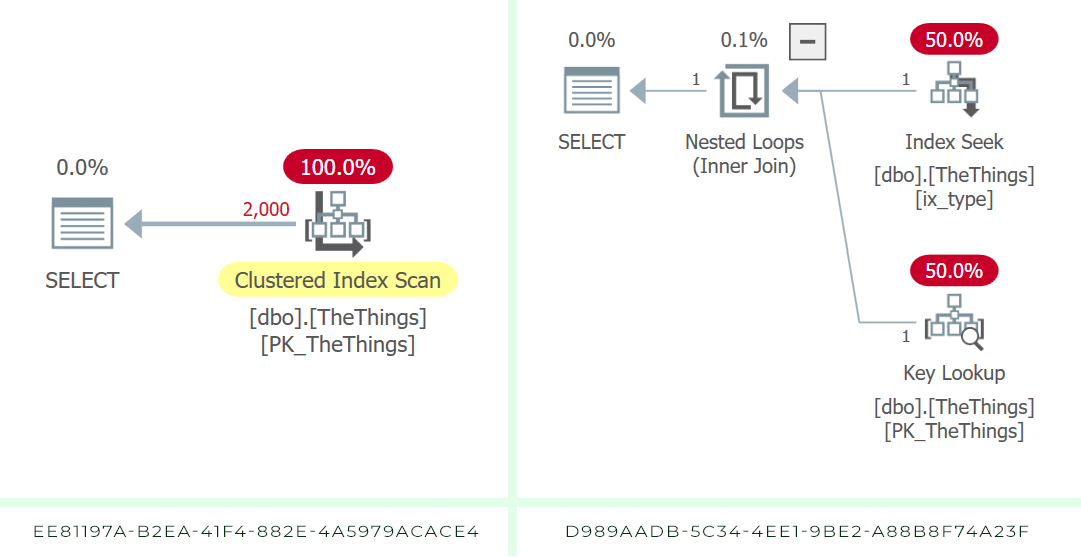

If I ran the queries independently using OPTION (RECOMPILE), I would get the seek when appropriate:

DBCC FREEPROCCACHE;

DECLARE @guid1 uniqueidentifier = 'EE81197A-B2EA-41F4-882E-4A5979ACACE4',

@guid2 uniqueidentifier = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F';

SELECT * FROM dbo.TheThings WHERE TypeID = @guid1 OPTION (RECOMPILE);

SELECT * FROM dbo.TheThings WHERE TypeID = @guid2 OPTION (RECOMPILE);

With RECOMPILE, we get more accurate estimates, and a seek when we need one.

With RECOMPILE, we get more accurate estimates, and a seek when we need one.

But, again, we couldn't add the hint to the query directly.

Let's try a plan guide

Plenty of people warn against plan guides, but we were kind of in a corner here. We'd definitely prefer to change the query, or the indexes, if we could. But this might be the next best thing.

EXEC sys.sp_create_plan_guide

@name = N'TheThingGuide',

@stmt = N'SELECT * FROM dbo.TheThings WHERE TypeID = @P0',

@type = N'SQL',

@params = N'@P0 varchar(8000)',

@hints = N'OPTION (RECOMPILE)';

Seems straightforward; testing it is the problem. How do we simulate a prepared statement in Management Studio? How can we be sure the application is getting the guided plan, and that it's explicitly because of the plan guide?

If we try to simulate this query in SSMS, this gets treated as an ad hoc statement, not a prepared statement, and I could not get this to pick up the plan guide:

DECLARE @P0 varchar(8000) = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F'; -- also tried uniqueidentifier

SELECT * FROM dbo.TheThings WHERE TypeID = @P0

Dynamic SQL also didn't work (this also got treated as an ad hoc statement):

DECLARE @sql nvarchar(max) = N'SELECT * FROM dbo.TheThings WHERE TypeID = @P0',

@params nvarchar(max) = N'@P0 varchar(8000)', -- also tried uniqueidentifier

@P0 varchar(8000) = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F';

EXEC sys.sp_executesql @sql, @params, @P0;

And I couldn't do this, because it also wouldn't pick up the plan guide (parameterization takes over here, and I didn't have the freedom to change database settings, even if this were to be treated like a prepared statement):

SELECT * FROM TheThings WHERE TypeID = 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F';

I can't check the plan cache for the queries running from the app, since the cached plan doesn't indicate anything about plan guide usage (SSMS injects that information into the XML for you when you generate an actual plan). And if the query is truly observing the RECOMPILE hint I'm passing in to the plan guide, how could I ever see any evidence in the plan cache anyway?

Let's try sp_prepare

I've used sp_prepare less in my career than plan guides, and I wouldn't recommend using it for application code. (As Erik Darling points out, the estimate can be pulled from the density vector, not from sniffing the parameter.)

In my case, I don't want to use it for performance reasons, I want to use it (along with sp_execute) to simulate the prepared statement coming from the app.

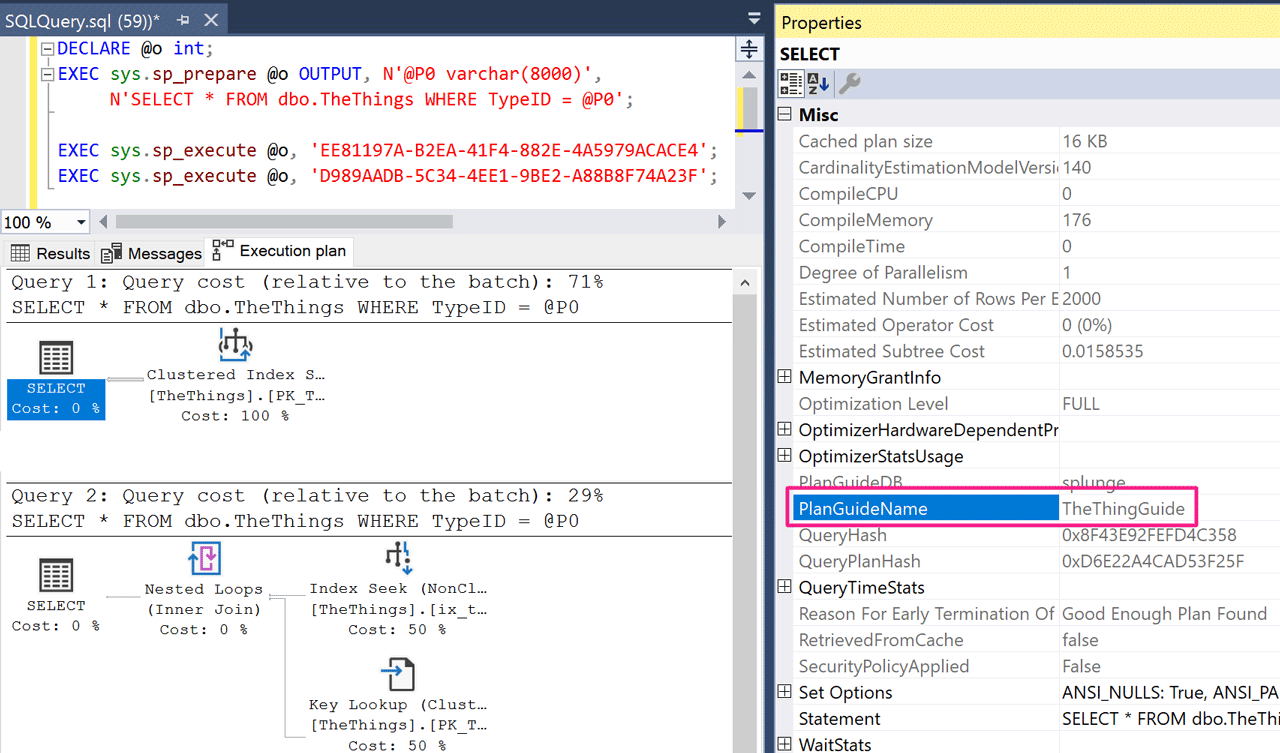

DECLARE @o int;

EXEC sys.sp_prepare @o OUTPUT, N'@P0 varchar(8000)',

N'SELECT * FROM dbo.TheThings WHERE TypeID = @P0';

EXEC sys.sp_execute @o, 'EE81197A-B2EA-41F4-882E-4A5979ACACE4'; -- PK scan

EXEC sys.sp_execute @o, 'D989AADB-5C34-4EE1-9BE2-A88B8F74A23F'; -- IX seek + lookup

SSMS shows us the plan guide was used in both cases.

SSMS shows us the plan guide was used in both cases.

You won't be able to check the plan cache for these results, because of the recompile. But in a scenario like mine, you should be able to see the effects in monitoring, explicitly checking via Extended Events, or observing the relief of the symptom that made you investigate this query in the first place (just be aware that average runtime, query stats etc. may be impacted by additional compilation).

Conclusion

This was one case where a plan guide was beneficial, and sp_prepare was useful in validating that it would work for the application. These aren't often useful, and less often so together, but for me it was an interesting combination. Even without the plan guide, if you want to use SSMS to simulate an app sending prepared statements, sp_prepare is your friend. (Also see sp_prepexec, which can be a shortcut if you're not trying to validate two different plans for the same query.)

Note that this exercise was not necessarily to get better performance all the time – it was to flatten performance variance. Recompiles are obviously not free, but I'll pay a small penalty to have 99% of my queries execute in 250ms and 1% execute in 5 seconds, rather than be stuck with a plan that is absolutely terrible for either 99% of the queries or 1% of the queries.