Measuring the importance of data quality to causal AI success

Dynatrace

JANUARY 4, 2024

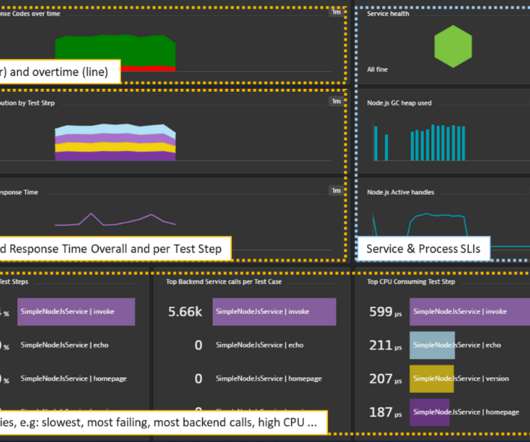

While this approach can be effective if the model is trained with a large amount of data, even in the best-case scenarios, it amounts to an informed guess, rather than a certainty. But to be successful, data quality is critical. Teams need to ensure the data is accurate and correctly represents real-world scenarios. Consistency.

Let's personalize your content