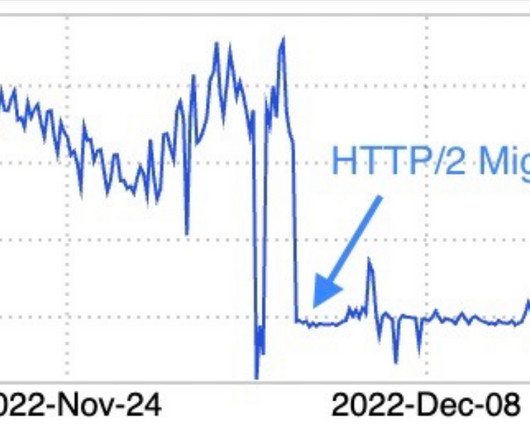

LinkedIn Migrates Espresso to HTTP2 and Reduces Connections by 88% and Latency by 75%

InfoQ

DECEMBER 4, 2023

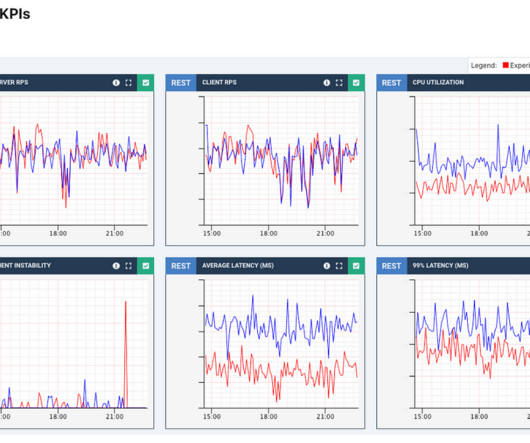

to HTTP2, resulting in a reduction in the number of connections, latency, and garbage collection times. LinkedIn was able to dramatically improve the scalability and performance of its Espresso database by migrating it from HTTP1.1 To achieve these gains, the team had to optimize the Netty’s default HTTP2 stack to make it fit their needs.

Let's personalize your content