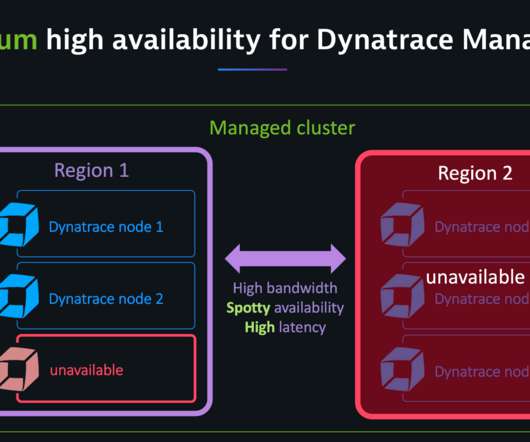

Dynatrace Managed turnkey Premium High Availability for globally distributed data centers (Early Adopter)

Dynatrace

JUNE 25, 2020

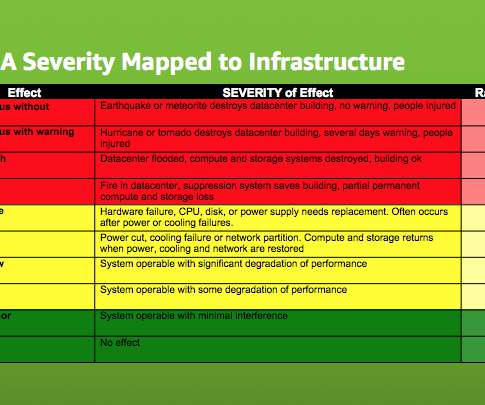

The network latency between cluster nodes should be around 10 ms or less. Our Premium High Availability comes with the following features: Active-active deployment model for optimum hardware utilization. Minimized cross-data center network traffic. Automatic recovery for outages for up to 72 hours.

Let's personalize your content