Seeing through hardware counters: a journey to threefold performance increase

The Netflix TechBlog

NOVEMBER 9, 2022

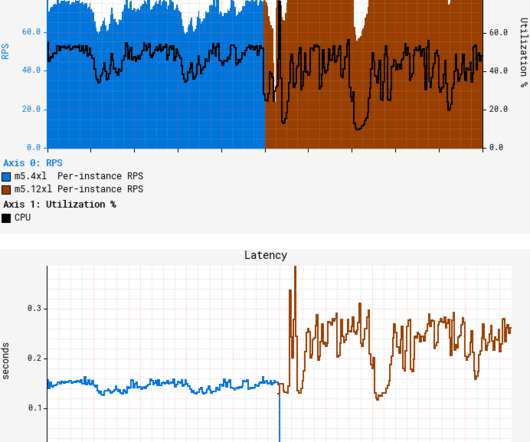

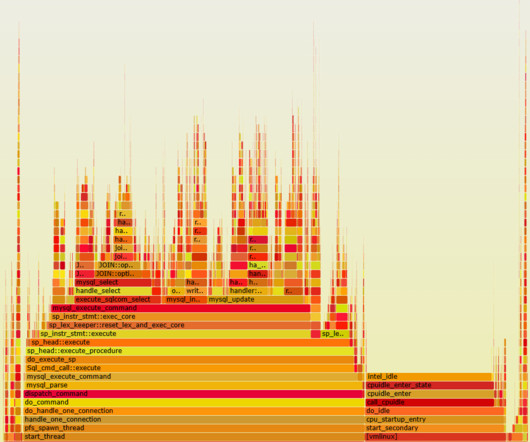

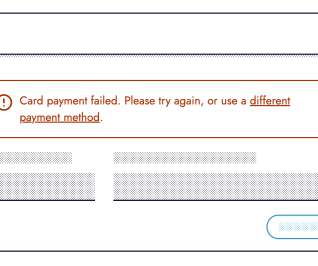

A quick canary test was free of errors and showed lower latency, which is expected given that our standard canary setup routes an equal amount of traffic to both the baseline running on 4xl and the canary on 12xl. What’s worse, average latency degraded by more than 50%, with both CPU and latency patterns becoming more “choppy.”

Let's personalize your content