Processing Time Series Data With QuestDB and Apache Kafka

DZone

APRIL 4, 2023

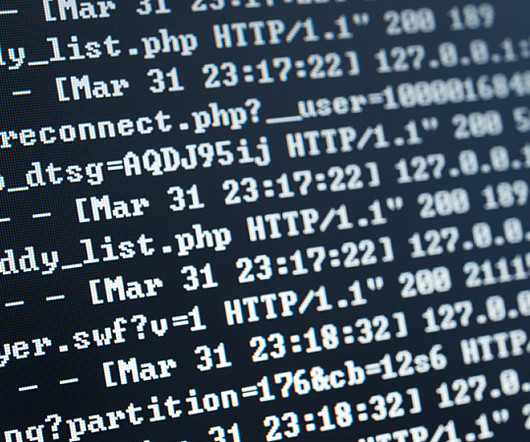

Apache Kafka is a battle-tested distributed stream-processing platform popular in the financial industry to handle mission-critical transactional workloads. Kafka’s ability to handle large volumes of real-time market data makes it a core infrastructure component for trading, risk management, and fraud detection.

Let's personalize your content