Crucial Redis Monitoring Metrics You Must Watch

Scalegrid

JANUARY 25, 2024

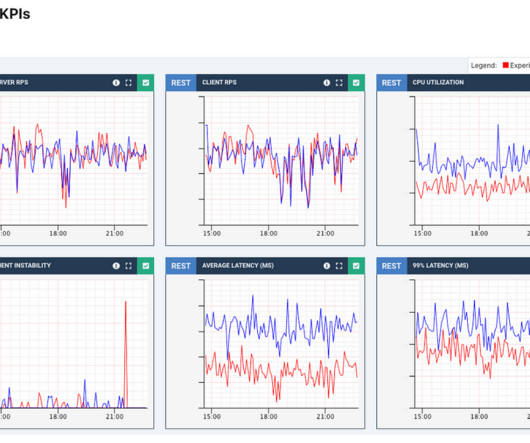

Key Takeaways Critical performance indicators such as latency, CPU usage, memory utilization, hit rate, and number of connected clients/slaves/evictions must be monitored to maintain Redis’s high throughput and low latency capabilities. These essential data points heavily influence both stability and efficiency within the system.

Let's personalize your content