Implementing service-level objectives to improve software quality

Dynatrace

DECEMBER 27, 2022

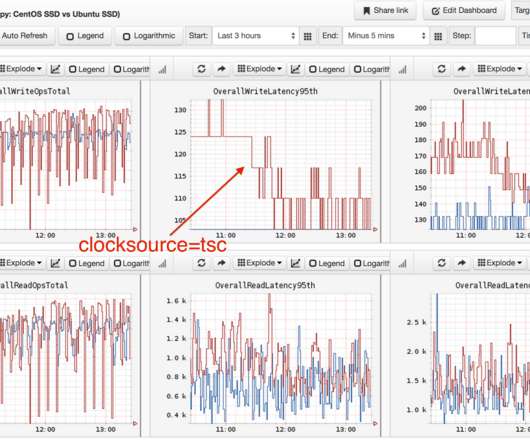

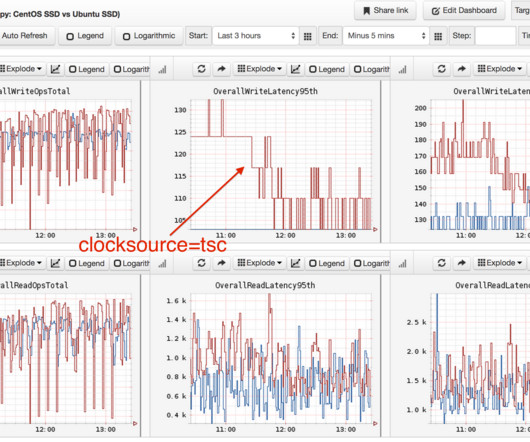

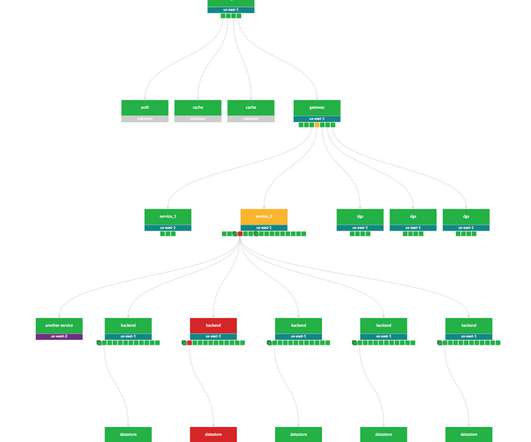

Instead, they can ensure that services comport with the pre-established benchmarks. First, it helps to understand that applications and all the services and infrastructure that support them generate telemetry data based on traffic from real users. Latency is the time that it takes a request to be served. Reliability.

Let's personalize your content