Optimizing data warehouse storage

The Netflix TechBlog

DECEMBER 21, 2020

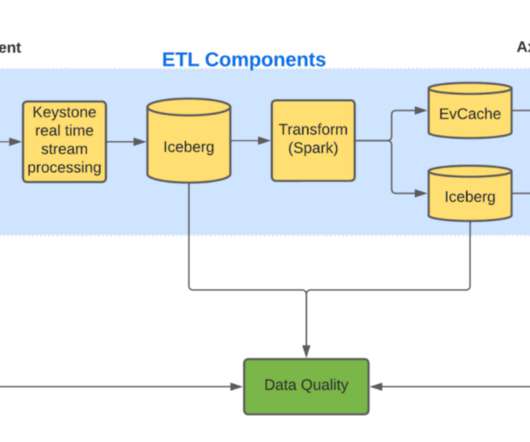

By Anupom Syam Background At Netflix, our current data warehouse contains hundreds of Petabytes of data stored in AWS S3 , and each day we ingest and create additional Petabytes. Some of the optimizations are prerequisites for a high-performance data warehouse. Iceberg plans to enable this in the form of delta files.

Let's personalize your content