Optimizing CDN Architecture: Enhancing Performance and User Experience

IO River

NOVEMBER 2, 2023

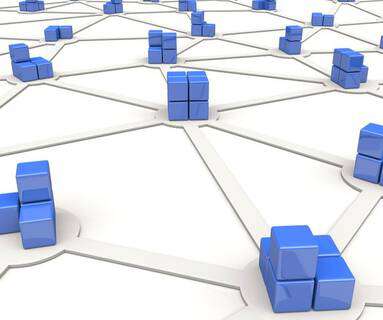

A content delivery network (CDN) is a distributed network of servers strategically located across multiple geographical locations to deliver web content to end users more efficiently. What is CDN Architecture?CDN CDN architecture serves as a blueprint or plan that guides the distribution of CDN provider PoPs.

Let's personalize your content