What is Greenplum Database? Intro to the Big Data Database

Scalegrid

MAY 13, 2020

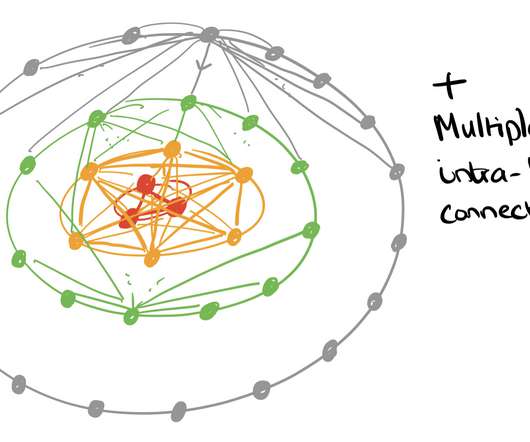

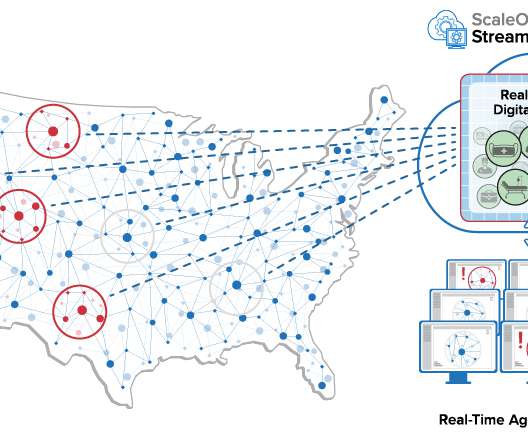

In this blog post, we explain what Greenplum is, and break down the Greenplum architecture, advantages, major use cases, and how to get started. It’s architecture was specially designed to manage large-scale data warehouses and business intelligence workloads by giving you the ability to spread your data out across a multitude of servers.

Let's personalize your content