Grail™, the Dynatrace causational data lakehouse, offers you instant access to any kind of data, enabling anyone to get answers within seconds. As all historical data is immediately available in Grail, no data rehydration is needed, even for analysis of suspicious timeframes that are lengthy or ancient.

To help you raise the quality of your investigation results, Dynatrace offers an easy way of structuring data using DPL Architect. This tool lets you quickly extract typed fields from unstructured text (such as log entries) using the Dynatrace Pattern Language (DPL), enabling you to extract timestamps, determine status codes, identify IP addresses, or work with real JSON objects. This allows you to answer even the most complex questions with ultimate precision. The best thing: the whole process is performed on read when the query is executed, which means you have full flexibility and don’t need to define a structure when ingesting data.

>> Scroll down to see Dynatrace DPL Architect in action (24-second video)

Investigating log data with the help of DQL

Let’s look at a practical example. The simplest log analysis use cases can be solved in seconds by applying a simple filter to the query results. If a CISO asks, “Have we seen the IP address 40.30.20.1 in our logs within the last year?” a simple DQL query seems to suffice:

fetch logs, from: -365d | filter contains(content, “40.30.20.1”)

This query returns all the records that contain the string value 40.30.20.1.

In the next step, adding DQL aggregation functions enables you to answer more complex questions like “How many times in an hour has this IP address visited our website within the last two weeks?” Again, a simple DQL query helps you out:

fetch logs, from: -14d | filter contains(content, “40.30.20.1”) | summarize by: bin(timestamp, 1h), count()

However, this kind of simple filtering using a sub-string search is prone to errors and is not precise enough. The mentioned filters return all records that contain 40.30.20.1, but are not necessarily from the source IP portion of the log format. The string 40.30.20.1 might also appear elsewhere, for example, in query parameters, and a simple string filter will return all records containing this search term:

19.31.99.1 - - [28/Aug/2023:10:27:10 -0300] "GET /index.php?ip=40.30.20.1 HTTP/1.1" 200 3395 40.30.20.109 - - [28/Aug/2023:10:22:04 -0300] "GET / HTTP/1.1" 200 2216

The first log record is matched because of its query parameter—something we don’t care about in our case. The second log record came up because the source IP contains the IP address—this is what we’re really looking for. In a nutshell, if you’re querying terabytes of logs and wish to drill down to specific records, filtering strings from plaintext log content is not enough.

The issue is that questions from CISOs aren’t usually so trivial when it comes to security use cases. Or, the log format where the answers should be looked for is more complex (for example, AWS CloudTrail logs). As a consequence, we need to search structured data to avoid mistakes. To minimize false positives, increase the precision of queries, and get the maximum out of DQL, fields can be extracted from record content.

Extracting patterns using DPL

Dynatrace Pattern Language (DPL) is a pattern language that allows you to describe a schema using matchers, where a matcher is a mini-pattern that matches a certain type of data. These matchers can be used to extract new fields from the schemaless data in Grail to enable more precise log filtering. Consider the same Apache access log example from above:

19.31.99.1 - - [28/Aug/2023:10:27:10 -0300] "GET /index.php?ip=40.30.20.1 HTTP/1.1" 200 3395

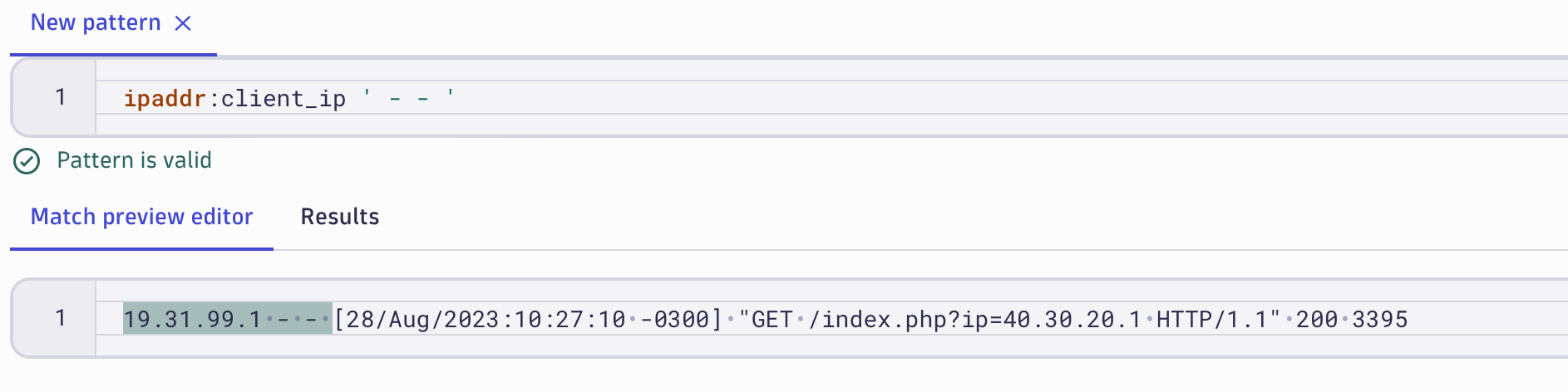

To extract the client IP addresses from the beginning of the log, a simple DPL pattern can be used:

IPADDR:client_ip

The IPADDR matches both versions of IP addresses (IPv4 addresses in dot-decimal notation and IPv6 addresses in hextet notation), leaving all other IP-like strings unmatched. For example, if the log record contains a dot-decimal number that is NOT an IPv4 address (999.999.999.999), it won’t be matched.

DPL patterns can be applied in DQL using the parse command. The simplest way to get started with DPL is to use Dynatrace DPL Architect.

DPL Architect to the rescue

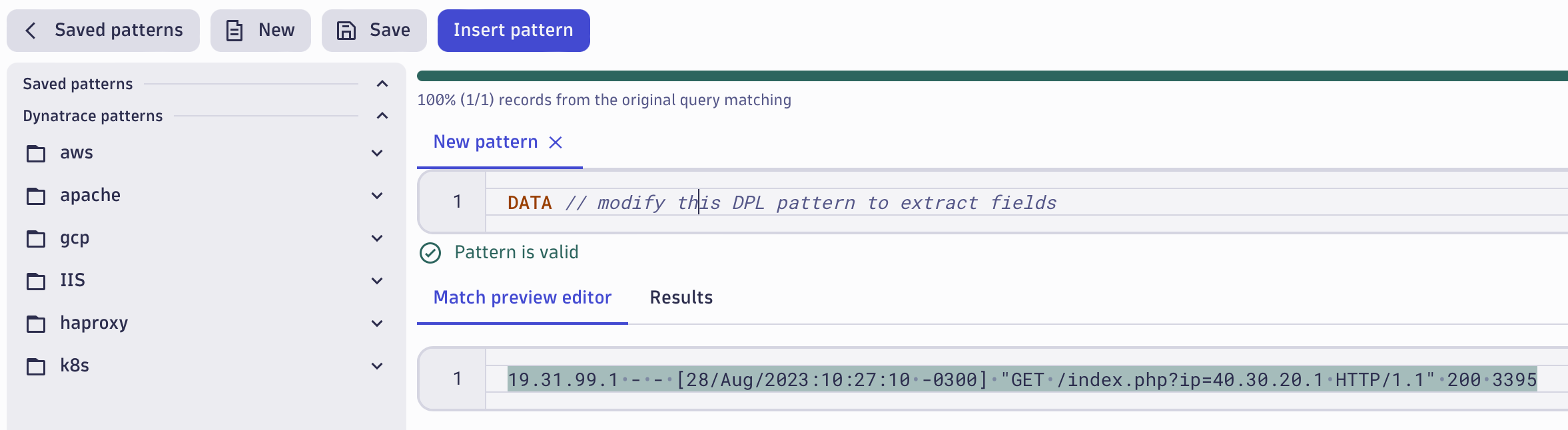

DPL Architect is a handy tool, accessible through the Notebooks app, which supports you in quickly extracting fields from records. It helps create patterns, provides instant feedback, and allows you to save and reuse DPL patterns, for faster access to data analytics use cases.

To open the DQL Architect, you have to execute a DQL query, select the content, and choose Extract fields.

Figure 1: Extract fields in Notebooks using DPL Architect (24-second video)

Starting with preset patterns

The simplest way to extract data is using one of the ready-to-use preset patterns available for the most popular technologies, such as AWS, Microsoft, or GCP. Start exploring AWS VPC Flow logs or analyzing Kubernetes audit logs by choosing the patterns from the panel on the left side. You can also customize the list by adding your own individual patterns.

Developing a new pattern using DPL Architect

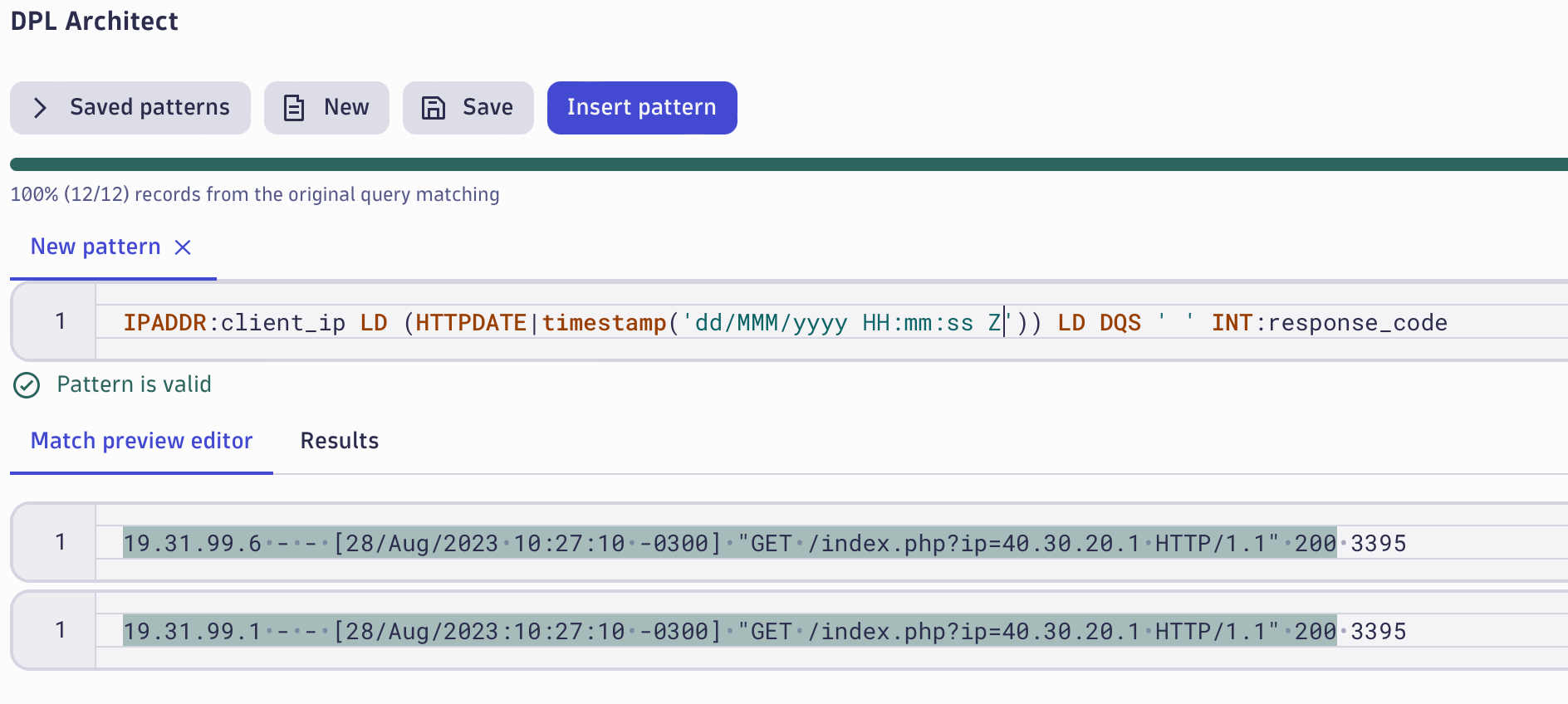

DPL Architect helps you by creating your own patterns and provides instant feedback. Start typing a DPL expression in the pattern field, and review the matching data (highlighted below) in the preview editor.

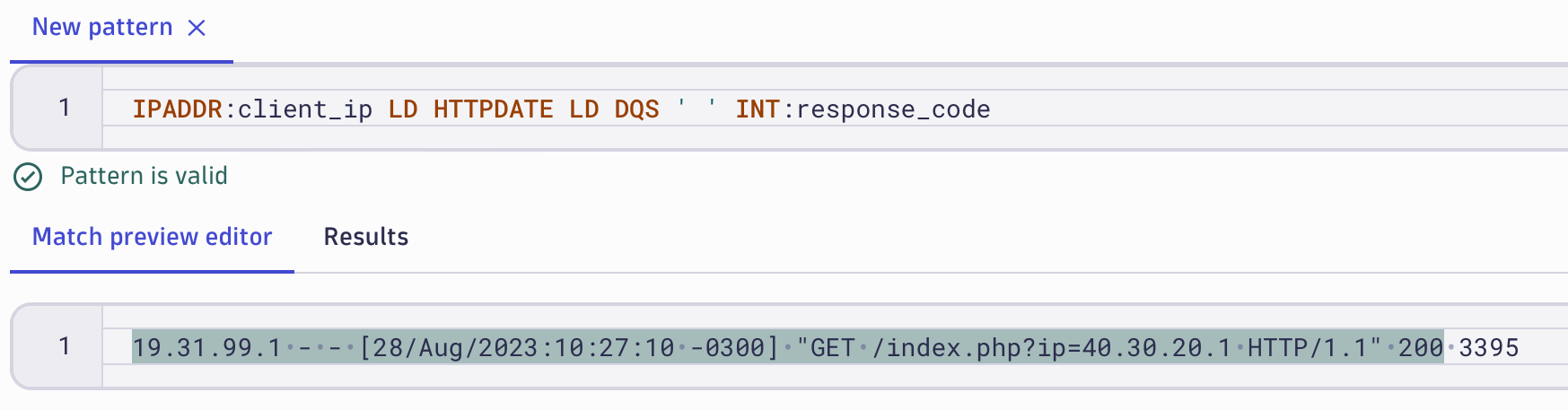

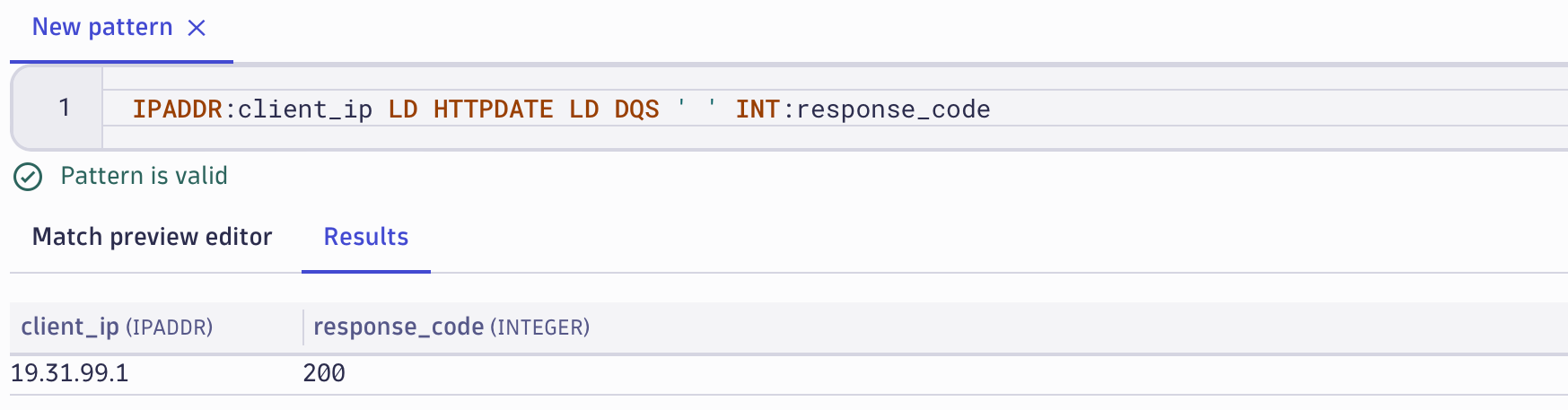

For accessing the extracted fields in the resultset, it’s necessary to add a name. In the example below, we’re only interested in the client_ip and the related response_code. The pattern matches the whole record, but only two fields are being extracted since they have defined extract names. Any other data between those two fields won’t be visible in the results (however, it is very easy to add them later if necessary).

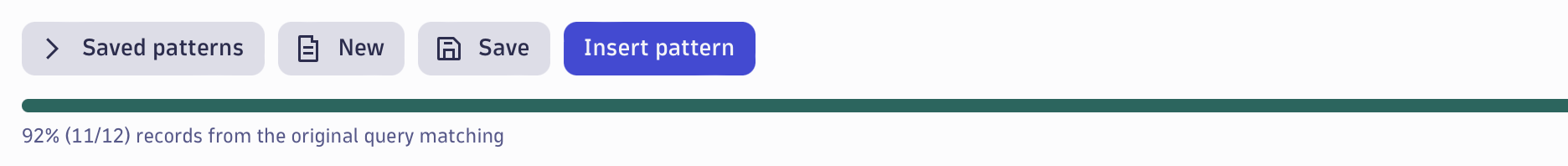

Remember, we started our journey with the DPL Architect by selecting query results in a notebook. The colored bar at the top of the DPL Architect shows how many records from your query result match the current DPL pattern. This enables you to modify the DPL pattern to ensure it matches all records in the resultset.

Records that don’t match the current pattern can easily be added to the Match preview panel by selecting Add to preview.

After changing the DPL pattern, any previously unmatched records will be highlighted and the progress bar on the top informs you that 100% of the records in the Notebooks resultset are matched. It’s now time to insert our pattern into the DQL query by selecting Insert Pattern.

Precise extraction of fields from complex data

DPL also provides matchers for more complex data structures, like key-value pairs, structures, and JSON objects, that enable you to parse individual sub-elements from the whole object. Consider the following log record:

1693230219 230.4.130.168 C "GET / HTTP/1.1" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:105.0) Gecko/20100101 Firefox/105.0" 186 {"username":"james","result":0 }

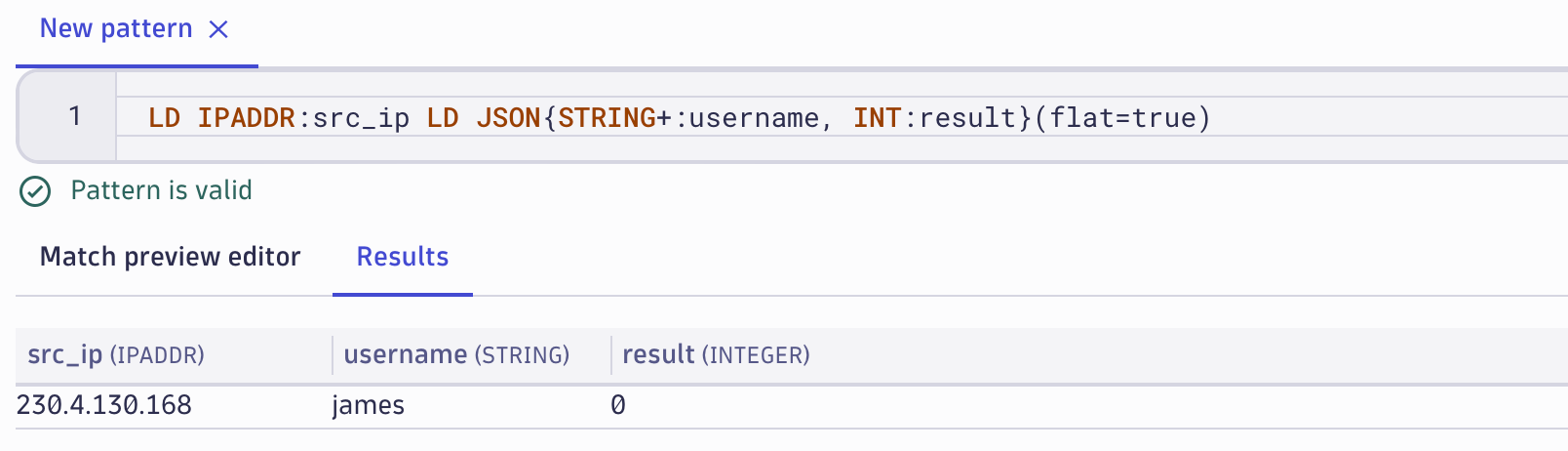

Imagine your CISO comes with a request: “Give me the list of all IP addresses where the user ‘james’ has successfully logged in from.” Extracting these three fields and building a DQL query is as easy as pie:

LD IPADDR:src_ip LD JSON{STRING+:username, INT:result}(flat=true)

The DPL will extract three fields: an IP address from the record, a string from the JSON object element username, and an integer from the JSON element result.

Summarizing the results based on these fields can be done with the following DQL:

fetch logs

| parse content, "LD IPADDR:src_ip LD JSON{STRING+:username, INT:result}(flat=true)"

| filter username == "james" and result == 0

| summarize by: src_ip, count()

Imagine that you don’t have DPL available and need to filter all log records based on only searching for string values: the result would contain a lot of false positives (which only add “noise” to time-critical investigations). This is especially true with more complex log records containing nested JSON objects. The above example was oversimplified intentionally, but considering complex log records like AWS CloudTrail log records, having precise access to data in a specific node of an object makes a huge difference.

Summary

When performing security investigations or threat-hunting activities, it’s important to have precision in place to get reliable results. Historical data needs to be available, and access to object details is required for precise answers. DPL Architect enables you to quickly create DPL patterns, speeding up the investigation flow and delivering faster results. With the possibility of using Dynatrace-provided patterns for selected technology stacks, investigators can deliver answers even faster!

Check out the following video, where Andreas Grabner and I teamed up for a new episode of Dynatrace Observability Clinic. In this video, we dig deeper into the topic of extracting data via DPL, including a live demonstration of DPL Architect.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum