To remain competitive in today’s fast-paced market, organizations must not only ensure that their digital infrastructure is functioning optimally but also that software deployments and updates are delivered rapidly and consistently. Thus, to meet demands and stay ahead of competitors, teams should strive to accelerate releases as much as possible. But how can organizations avoid sacrificing quality while maintaining a fast deployment pace? The key lies within quality gates.

What are quality gates?

Quality gates are checkpoints that require deliverables to meet specific, measurable success criteria before progressing. They help foster confidence and consistency throughout the entire software development lifecycle (SDLC).

Organizations can customize quality gate criteria to validate technical service-level objectives (SLOs) and business goals, ensuring early detection and resolution of code deficiencies. Automating quality gates is ideal, as it minimizes manually checking and validating key metrics throughout the SDLC. Ultimately, quality gates safeguard code viability as it advances through the delivery pipeline.

Benefits of quality gates

Quality gates provide several advantages to organizations, including the following:

- Optimized software performance: Quality gates assess code at different SDLC stages and ensure that only high-quality code progresses. This mechanism significantly boosts the likelihood of optimal functioning upon deployment. By actively monitoring metrics such as error rate, success rate, and CPU load, quality gates instill confidence in teams during software releases.

- Fewer expensive fixes. Introducing well-defined quality gates at regular checkpoints prevents the escalation of issues as software advances through the pipeline. Early identification and resolution of potential deficiencies conserves resources and mitigates the need for expensive fixes later in the SDLC.

- Continuous, informed improvement: Quality gates provide consistent feedback on key metrics. This steady input empowers teams to proactively address issues, which fosters continuous learning from the outset of the SDLC. This approach supports innovation, ambitious SLOs, DevOps scalability, and competitiveness.

Quality gates examples in Dynatrace

Quality gates hold much promise for organizations looking to release better software faster. But how do they function in practice? The following are specific examples that demonstrate quality gates in action:

Security gates

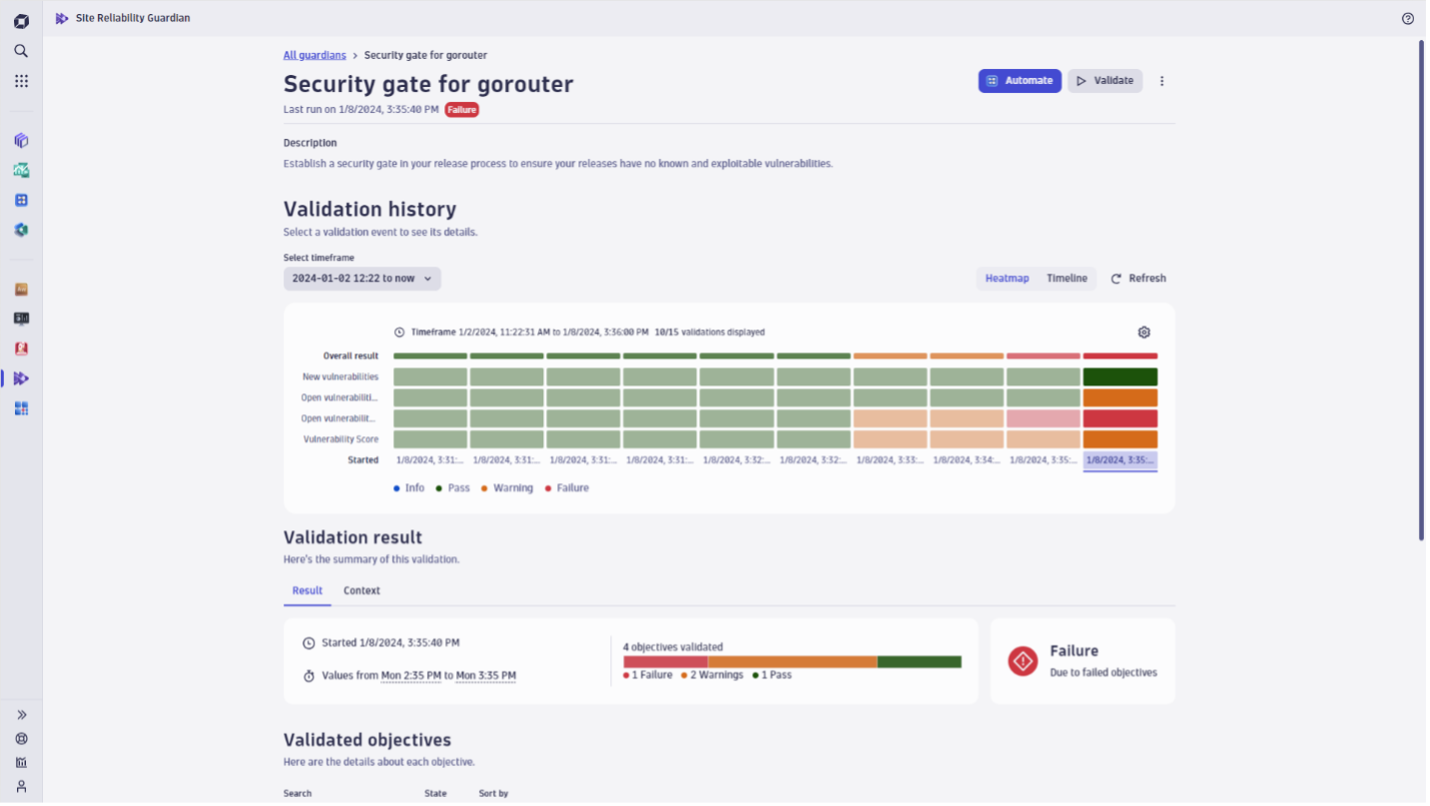

Security gates ensure code meets key security requirements defined by development and security stakeholders. In this example, we will focus on ensuring releases do not have any known vulnerabilities. In this context, before the gorouter service deploys, security gates defined in Dynatrace’s Site Reliability Guardian (SRG) validate whether the latest version of the software has introduced a vulnerability.

In this scenario, the gorouter service must meet specific key metrics relating to the following three security areas:

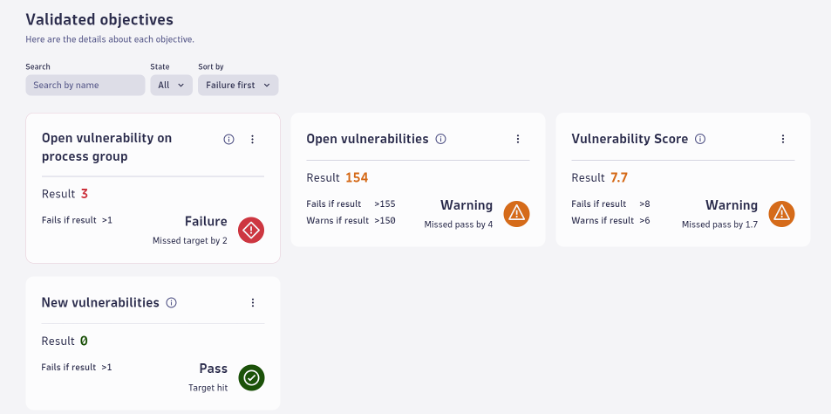

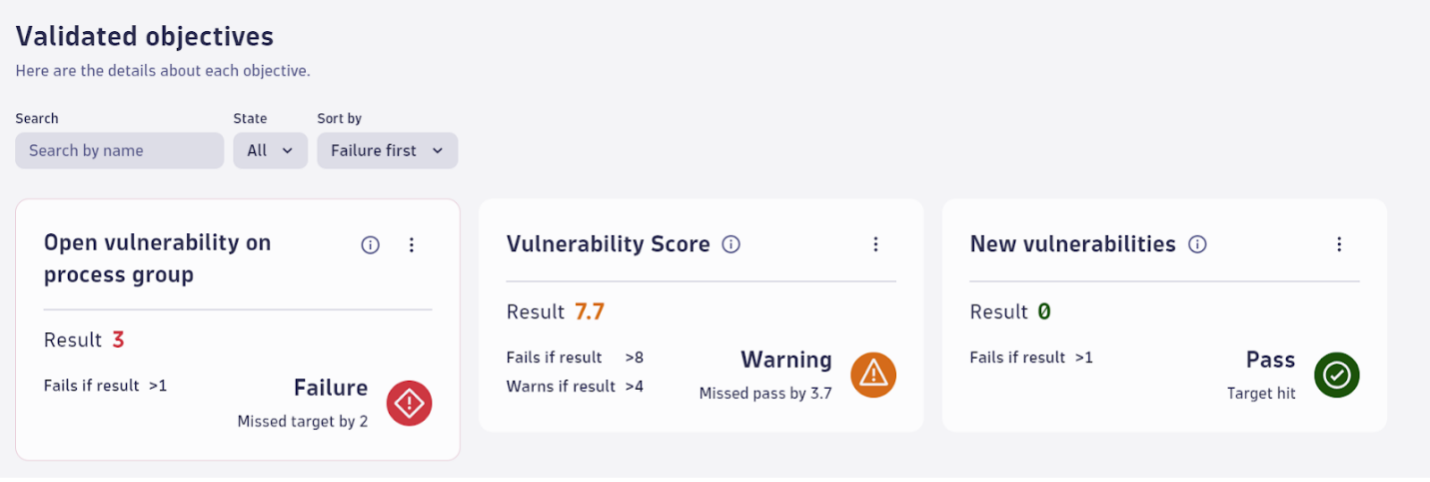

- New vulnerabilities: The newly detected high-profile vulnerabilities in the environment. For each of these areas, the software receives a score from one to 10. Depending on the score thresholds for each SLO, the software will either “fail” or “pass” the gate.

- Open vulnerability on process group: The total number of currently high-profile vulnerabilities related to a process group.

- Open vulnerabilities: The total number of currently open high-profile vulnerabilities in the environment.

- Vulnerability score: The highest vulnerability risk score for a process group.

To view a more in-depth breakdown of these key metrics and their thresholds, simply scroll down to the bottom:

For every new build, the SRG assigns a score for each of these SLO areas. Considering the above scores, this version of software would not pass through the security gates, and the deployment process for the gorouter service would be stalled. If even one of the key metrics receives a failing score —as it did on the open vulnerability on process group — the software cannot progress further. Adjustments must be made accordingly.

Quality gates after load/performance testing

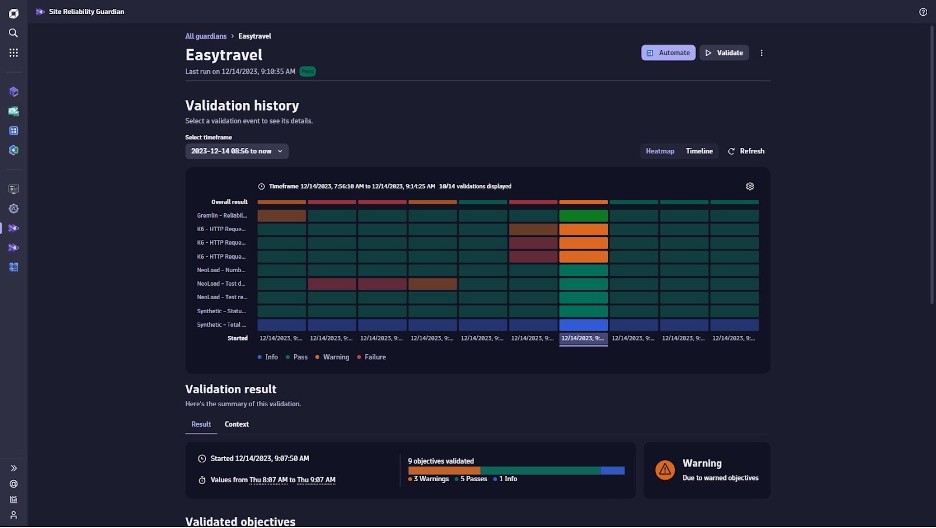

Teams can use quality gates to evaluate performance metrics. Consider “Easytravel,” a customer-facing application used by a travel agency. Before a new version of the application is deployed, the software is subject to a series of load tests that evaluate capacity and performance under a series of simulated traffic and application demands.

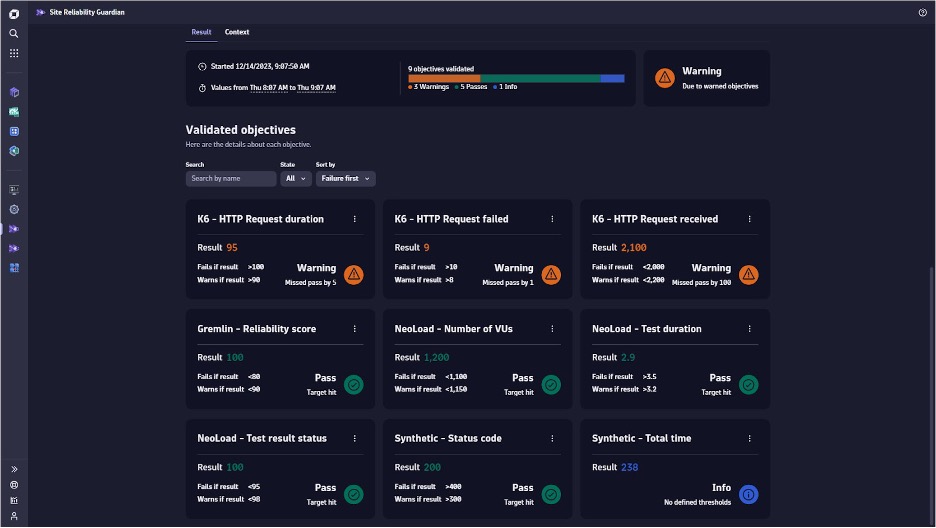

Quality gates consider these metrics and determine if their values fulfill specific SLO requirements. The gates thus allow the application version to pass through if the software meets the criteria and halts the progression if not. Several tools can be used to collect metrics in load/performance testing. In this example, test results are gathered from K6, Gremlin, NeoLoad, and Dynatrace synthetics.

Per the figures above, SRG evaluates Easytravel metrics from all of these tools in one place. This way, the travel agency can easily streamline, organize, and consolidate their quality gates and metric evaluation process. The agency can also efficiently compare the newest version of Easytravel against previous versions of the software with regression testing facilitated by SRG.

The new version of the Easytravel app has passed the load/performance testing quality gates. Although there were a handful of “warnings” for the K6 metrics — this means that the metrics are close to the “failure” threshold — none of the metrics failed to meet the SLO entirely. As a result, this version of Easytravel passes through this set of quality gates and is one step closer to deployment.

Quality gates to validate the “four golden signals”

The “four golden signals” represent the most crucial metrics of a customer-facing system’s performance. These metrics are latency, traffic, errors, and saturation, all of which must be key considerations when curating user experience. Teams can use quality gates to track and evaluate such metrics. Let’s review how a quality gate can function for each of the four golden signals using the same Easytravel application. Below is a sample SRG dashboard for these signals:

- Latency

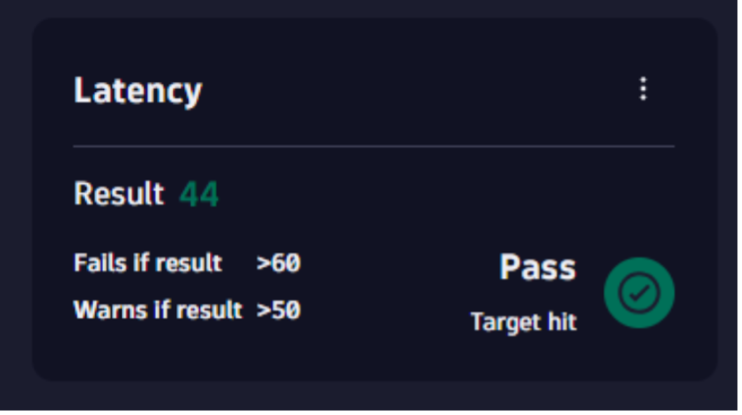

- Latency refers to the amount of time that data takes to transfer from one point to another within a system. In the context of Easytravel, one can measure the speed at which a specific page of the application responds after a user clicks on it.

- The passing threshold is anything below 50 ms.

The warning threshold is 50-60 ms.

The failure threshold is anything above 60ms.

- The passing threshold is anything below 50 ms.

- In this scenario, the response time for the latest software version is 44 ms, thus, it passes through the latency quality gate.

- Latency refers to the amount of time that data takes to transfer from one point to another within a system. In the context of Easytravel, one can measure the speed at which a specific page of the application responds after a user clicks on it.

Teams take a similar approach for the other three signals. In this example, unlike latency, the remaining three signals did not receive a “pass.” SLOs for saturation were not met, and thus, this specific build of Easytravel temporarily halts. In terms of errors and traffic, these SLOs did not receive a failing score but are close enough to the failure threshold to trigger a warning.

What’s next

Quality gates are only as effective as the tools that enable them. Dynatrace’s Site Reliability Guardian provides a platform for in-depth analysis and validation of service availability, performance, and capacity objectives throughout your entire digital environment. Equipped with these capabilities, Site Reliability Guardian can help you implement high-performing quality gates.

Interested in delivering better software faster? Start a 15-day free trial today.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum