While visiting Facebook earlier this month, I gave a shorter version of my “Welcome to the Jungle” talk, based on the eponymous WttJ article. They made a nice recording and it’s now available online here:

While visiting Facebook earlier this month, I gave a shorter version of my “Welcome to the Jungle” talk, based on the eponymous WttJ article. They made a nice recording and it’s now available online here:

Facebook Engineering

Title: Herb Sutter: Welcome to the Jungle

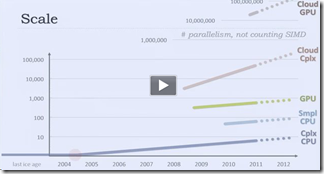

In the twilight of Moore’s Law, the transitions to multicore processors, GPU computing, and HaaS cloud computing are not separate trends, but aspects of a single trend—mainstream computers from desktops to ‘smartphones’ are being permanently transformed into heterogeneous supercomputer clusters. Henceforth, a single compute-intensive application will need to harness different kinds of cores, in immense numbers, to get its job done. — The free lunch is over. Now welcome to the hardware jungle.

The slides are available here. (There doesn’t seem to be a link to the slides on the page itself as I write this.)

For those interested in a longer version, in April I gave a 105-minute + Q&A version of this talk in Kansas City at Perceptive, also available online where I posted before.

A word about “cluster in a box”

I should have remembered that describing a PC as a “heterogeneous cluster in a box” is a big red button for people, in particular because “cluster” implies “parts can fail and program should continue.” So in the Q&A, one commenter made the point that I should have mentioned reliability is an issue.

As I answered there, I half agree – it’s true but it’s only half the story, and it doesn’t affect the programming model (see more below). One of the slides I omitted to shorten this version of the talk highlighted that there are actually two issues when you go from “Disjoint (tightly coupled)” to “Disjoint (loosely coupled)”: reliability and latency, and both are important. (I also mentioned this in the original WttJ article this is based on; just search for “reliability.”)

Even after the talk, I still got strong resistance along the lines that, ‘no, you obviously don’t get it, latency isn’t a significant issue at all, reliability is the central issue and it kills your argument because it makes the model fundamentally different.’ Paraphrasing subsequent email:

‘A fundamental difference between distributed computing and single-box multiprocessing is that in the former case you don’t know whether a failure was a communication failure (i.e. the task was completed but communication failed) or a genuine failure to carry the task. (Hence all complicated two-phase commit protocols etc.) In contrast, in a single-box scenario you can know the box you’re on is working.’

Let me respond further to this here, because clearly these guys know more about distributed systems than I do and I’m always happy to be educated, but I also think we have a disconnect on three things asserted above: It is not my understanding that reliability is more important than latency, or that apps have to distinguish comms failures from app exceptions, or that N-phase commit enters the picture.

First, I don’t agree with the assertion that reliability alone is what’s important, or that it’s more important than latency, for the following reason:

- You can build reliable transports on top of unreliable ones. You do it through techniques like sequencing, redundancy, and retry. A classic example is TCP, which delivers reliable communications over notoriously- and deliberately-unreliable IP which can drop and reorder packets as network nodes and communications paths keep madly appearing and reappearing like a herd of crazed Cheshire cats. We can and do build secure reliable global banking systems on that.

- Once you do that, you have turned a reliability issue into a performance (specifically latency) issue. Both reliability and latency are key issues when moving to loosely-coupled systems, but because you can turn the first into the second, it’s latency that is actually the more fundamental and important one – and the only one the developer needs to deal with.

For example, to use compute clouds like Azure and AWS, you usually start with two basic pieces:

- the queue(s), which you use to push the work items out/around and results back/around; and

- an elastic set of compute nodes, each of which pulls work items from the queue and processes them.

What happens when you encounter a reliability problem? A node can pull a work item but fail to complete it, for example if the node crashes or the system encounters a partial network outage or other communication problem.

Many modern systems already automatically recover and have another node re-pull the same work item to make sure each work item gets done even in the face of partial failures. From the app’s point of view, such failures just manifest as degraded performance (higher latency or time-to-solution) and therefore mainly affect the granularity of parallel work items – they have to be big enough to be worth sending elsewhere and so minimum size is directly proportional to latency so that the overheads do not dominate. They do not manifest as app-visible failures.

Yes, the elastic cloud implementation has to deal with things like network failures and retries. But no, this isn’t your problem; it’s not supposed to be your job to implement the elastic cloud, it’s supposed to be your job just to implement each node’s local logic and to create whatever queues you want and push your work item data into them.

Aside: Of course, as with any retry-based model, you have to make sure that a partly-executed work item doesn’t expose any partial side effects it shouldn’t, and normally you prevent that by doing the work in a transaction and rolling it back on failure, or in the extreme (not generally recommended but sometimes okay) resorting to compensating writes to back out partial work.

That covers everything except the comment about two-phase commit: Citing that struck me as odd because I haven’t heard much us of that kind of coupled approach in years. Perhaps I’m misinformed, but my impression of 2- or N-phase commit protocols was that they have some serious problems:

- They are inherently nonscalable.

- They increase rather than decrease interdependencies in the system – even with heroic efforts like majority voting and such schemes that try to allow for subsets of nodes being unavailable, which always seemed fragile to me.

- Also, I seem to remember that NPC is a blocking protocol, which if so is inherently anti-concurrency. One of the big realizations in modern mainstream concurrency in the past few years is that Blocking Is Nearly Always Evil. (I’m looking at you, future.get(), and this is why the committee is now considering adding the nonblocking future.then() as well.)

So my impression is that these were primarily of historical interest – if they are still current in modern datacenters, I would appreciate learning more about it and seeing if I’m overly jaded about N-phase commit.

It looks like link to the slides should have .pdf instead of -pdf in it: https://developers.facebook.com/attachment/Welcome_to_the_Jungle_-_FB.pdf

I can’t get to the slides.