Stuff The Internet Says On Scalability For December 2nd, 2022

Never fear, HighScalability is here!

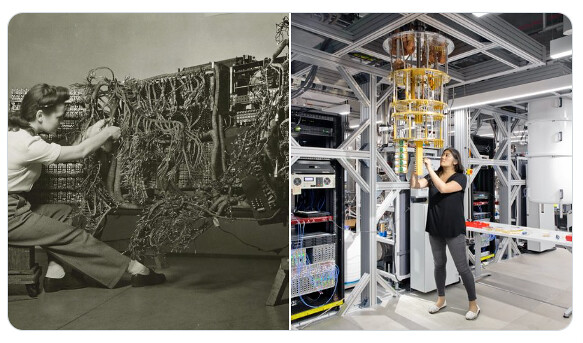

1958: An engineer wiring an early IBM computer 2021: An engineer wiring an early IBM quantum computer. @enclanglement

My Stuff:

- I'm proud to announce a completely updated and expanded version of Explain the Cloud Like I'm 10! This version adds 2x more coverage, with special coverage of AWS, Azure, GCP, and K8s. It has 482 mostly 5 star reviews on Amazon. Here's a 100% organic, globally sourced review:

- Love this Stuff? I need your support on Patreon to keep this stuff going.

Number Stuff:

- 1.5 billion: fields per second across 3000 different data types served by Twitter's GraphQL API.

- 1 million: AWS Lambda customers. 10 trillion monthly requests.

- 11: days Vanuatu's government has been knocked offline by cyber-attacks.

- 6 ronnagrams: weight of the earth—a six followed by 27 zeros.

- $40,000: spent recursively calling lambda functions.

- 1 billion: daily GraphQL requests at Netflix.

- 48 Million: Apple's pitiful bug bounty payout.

- 1 billion: requests per second handled by Roblox's caching system.

- 1 TB: new Starlink monthly data cap.

- 8 billion: oh the humanity.

- 25%: of cloud migrations are lift and shift. Good to see that number declining.

- 433: qubits in IBM's new quantum computer.

- 10%: of John Deere's revenues from subscription fees by 2030.

- 2 trillion: galaxies in the 'verse.

- 80%: Couchbase reduction in latency by deploying at the edge.

- 46 million: requests per second DDoS attack blocked by Google. Largest ever.

- 57 GB: data produced daily by the James Webb Space Telescope.

- $72,577,000,000: annual flash chip revenue.

- 20,000+: RPS handle by Stripe on Cyber Monday with a >99.9999% API success rate.

- 0: memory safety vulnerabilities discovered in Android’s Rust code.

- 1.84 petabits per second: transmitted using a single light source and optical chip, nearly twice the amount of global internet traffic in the same interval.

Quotable Stuff:

- chriswalker171: people are very confused about what’s actually going on here. People trust their NETWORK more than the results that they get in Google. So they’re going to LinkedIn, Tik Tok, Instagram, Podcasts, YouTube, Slack & Discord communities and more to get information. It’s simply a better way. This is the preview.

- Demis Hassabis~ AI might be the perfect description language for biology. In machine learning what we've always found is that the more end to end you can make it the better the system and it's probably because in the end, the system is better at learning what the constraints are than we are as the human designers of specifying it. So anytime you can let it flow end to end and actually just generate what it is you're really looking for.

- @jasondebolt: Ok this is scary. @OpenAI’s ChatGPT can generate hundreds of lines of Python code to do multipart uploads of 100 GB files to an AWS S3 bucket from the phrase “Write Python code to upload a file to an AWS S3 bucket”. It’s not just copying example code. It knows how to edit the code.

- Ben Kehoe: I feel like we're headed in a direction where serverless means "removing things developers have to deal with" when it should be "removing things the business as a whole has to deal with", and we're losing the recognition those are often not well-aligned.

- Ben Kehoe: Strongly agree, I actually used to say that iRobot is not a cloud software company, it's a cloud-enabled-features company.

- @QuinnyPig: On CloudFront 60TB of egress (including free tier) will be anywhere from $4,761.60 to 6,348.80 *depending entirely on where in the world the users requesting the file are*. Yes, that's impossible to predict. WELCOME TO YOUR AWS BILL!

- @mthenw: Graviton, Nitro, Trainium everywhere. in other words, end-to-end optimised hardware designs (another way to optimise overall cloud efficiency). #aws #reinvent

- @esignoretti: Cloudflare is clearly challenging AWS. It's very visible in the marketing layer ("worrying about cold starts seems ridiculous", "zero egress fee object storage").

- @asymco: by year four of the Alexa experiment, "Alexa was getting a billion interactions a week, but most of those conversations were trivial commands to play music or ask about the weather." Those questions aren't monetizable. Apple’s Siri was at 4 billion interactions a week in Aug 2019

- klodolph: Basically, you want to be able to do a “snapshot read” of the database rather than acquiring a lock (for reasons which should be apparent). The snapshot read is based on a monotonic clock. You can get much better performance out of your monotonic clock if all of your machines have very accurate clocks. When you write to the database, you can add a timestamp to the operation, but you may have to introduce a delay to account for the worst-case error in the clock you used to generate the timestamp. More accurate timestamps -> less delay. From my understanding, less delay -> servers have more capacity -> buy fewer servers -> save millions of dollars -> use savings to pay for salaries of people who figured out how to make super precise timestamps and still come out ahead.

- Meta: One could argue that we don’t really need PTP for that. NTP will do just fine. Well, we thought that too. But experiments we ran comparing our state-of-the-art NTP implementation and an early version of PTP showed a roughly 100x performance difference

- @GaryMarcus: 2. LLMs are unruly beasts; nobody knows how to make them refrain 100% of time from insulting users, giving bad advice, or goading them into bad things. Nobody knows how to solve this.

- @copyconstruct: Looking back at the 2010s, one of the reasons why many startups that were successful during that period ended up building overly complex tech stacks was in no small part because of their early hiring practices (a ka, only hiring from Google + FB). This had both pros and cons.

- @rudziiik: It's actually a real thing, called sonder - which is the realization that each random passerby is living a life as vivid and complex as your own. It's a neologism, this term was created by John Koenig, and it has a deep connection with our empathy. THE MORE YOU KNOW

- @B_Nied: Many years ago one of the DB servers at my workplace would occasionally fail to truncate it's transaction log so it would just grow & grow until it filled up that server's HD & stop working & I'd have to manually truncate it. I've thought about that a lot lately.

- @jeremy_daly : Have we strayed so far from the purest definition of #serverless that there's no going back? Or is this just what “serverless” is now?

- @oliverbcampbell: Second is the "Culling." When you've got 90% of the people not performing, they're actually negatively impacting the 10% who ARE performing above and beyond. And that's why the layoffs happened. Paraphrased, 'shit is gonna change around here, get on board or get out'

- @copyconstruct: The most ill-conceived idea that seems to have ossified among certain group of people is that Twitter was failing because the engineering culture wasn’t high-performing enough. The problem with Twitter was product and leadership, and not engineering not executing well enough.

- @ID_AA_Carmack: A common view is that human level AGI will require a parameter count in the order of magnitude of the brain’s 100 trillion synapses. The large language models and image generators are only about 1/1000 of that, but they already contain more information than a single human could ever possibly know. It is at least plausible that human level AGI might initially run in a box instead of an entire data center. Some still hope for quantum magic in the neurons; I think it more likely that they are actually kind of crappy computational elements.

- @saligrama_a: We are moving from GCP for @stanfordio Hack Lab (170+ student course, each student gets a cloud VM) to on-prem for next year The quarterly cost for GCP VMs and bandwidth will be enough for several years worth of on-prem infra - why pay rent when you could own at same price?

- @nixCraft: 7 things all kids need to hear 1 I love you 2 I'm proud of you 3 I'm sorry 4 I forgive you 5 I'm listening 6 RAID is not backup. Make offsite backups. Verify backup. Find out restore time. Otherwise, you got what we call Schrödinger backup 7 You've got what it takes

- MIŠKO HEVERY: This is why I believe that a new generation of frameworks is coming, which will focus not just on developer productivity but also on startup performance through minimizing the amount of JavaScript that the browser needs to download and execute on startup. Nevertheless, the future of JS frameworks is exciting. As we’ve seen from the data, Astro is doing some things right alongside Qwik.

- Vok250: Lambda is insanely cheap. This is a super common pattern, often called "fat Lambda", that has always been a fraction of the cost of the cheapest AWS hardware in my experience. The one exception is if you have enough 24/7 load to require a decently large instance. If you're running those t3.small kind of ec2's then you are probably better off with a fat Lambda.

- MIT Team: Imagine if you have an end-to-end neural network that receives driving input from a camera mounted on a car. The network is trained to generate outputs, like the car's steering angle. In 2020, the team solved this by using liquid neural networks with 19 nodes, so 19 neurons plus a small perception module could drive a car. A differential equation describes each node of that system. With the closed-form solution, if you replace it inside this network, it would give you the exact behavior, as it’s a good approximation of the actual dynamics of the system. They can thus solve the problem with an even lower number of neurons, which means it would be faster and less computationally expensive.

- @jackclarkSF: Stability AI (people behind Stable Diffusion and an upcoming Chinchilla -optimal code model) now have 5408 GPUs, up from 4000 earlier this year - per @EMostaque in a Reddit ama

- @ahidalgosre: Autoscaling is an anti-pattern. Complex services do not scale in any lateral direction, and the vectors via which they do scale are too unknowable/unmeasurable to predict reliably. It’s better to slightly under-provision a few instances to find out when they fail & over-provision others to absorb extra traffic.

- joshstrange: I left Aurora serverless (v1, v2 pricing was insane) for PS and I've been extremely happy. It was way cheaper and less of a headache than RDS and my actual usage was way less than I anticipated (since it's hard to think in terms of row reads/writes when working at a high level). With PS I get a dev/qa/staging/prod DB for $30/mo vs spinning up multiple RDS instances. Even with Aurora Serverless (v1) where you can spin down to 0 it was cheaper to go with PS. 1 DB unit on Aurora Serverless (v1) cost like $45/mo (for my 1 prod instance) so for $15 less I got all my other environments without having to wait for them to spin up after they went to sleep.

- @swshukla: Technical debt does not necessarily mean that the product is poor. Any system that has been running for years will accrue tech debt, it does not imply that engineers are not doing their job, it also does not imply that the management is bad. It's just a culmination of tradeoffs

- @GergelyOrosz: I'm observing more and more startups choose a 'TypeScript stack' for their tech: - Node.JS w TypeScript on the backend - React with TypeScript on the frontend - Some standard enough API approach (eg GraphQL) This is both easy to hire for + easy to pick up for any developer.

- @Nick_Craver: I've seen this confusion: because a service *is* running with a dependency down does not mean it can *start* with that same dependency down. Say for example an auth token not yet expired, but unable to renew, that can spin for a while before failure. But on restart? Dead.

- Gareth321: This is easily one of the most expansive Acts (EU Digital Markets Act) regarding computing devices passed in my lifetime. The summary is in the link. As an iPhone user, this will enable me to: * Install any software * Install any App Store and choose to make it default * Use third party payment providers and choose to make them default * Use any voice assistant and choose to make it default * User any browser and browser engine and choose to make it default * Use any messaging app and choose to make it default

- Seth Dobson: In our experience, depending on your organizational structure and operating model, leveraging Kubernetes at scale comes with more overhead than leveraging other CSP-native solutions such as AWS Elastic Container Service (ECS), AWS Batch, Lambda, Azure App Service, Azure Functions, or Google Cloud Run. Most of this extra overhead comes into play simply because of what Kubernetes is when compared with the other products.

- Chris Crawford: Thus, the heart of any computer is a processing machine. That is the very essence of computing: processing

- flashgordon: That was hilarious. Basically (unless this needs a reframing/realignment/repositioning/reorienting): Q: "are less sensors less safe/effective?" A: "well more sensors are costly to the organization and add more tech debt so safety is orthogonal and not worth answering".

- @bitboss: "Let's introduce Microservices, they will make our delivery faster." A sentence which I hear over and over again and which I consider to be an oversimplification of a complex challenge. A thread 🧵with ten points: 1. How do you define "fast"? Daily releases, hourly releases, weekly releases? What are your quality goals? Do you want to be "fast" in every area of your domain or only certain parts?

- Meta: After we launched this optimization, we saw major gains in compute savings and higher advanced encoding watch time. Our new encoding scheme reduced the cost of generating our basic ABR encodings by 94 percent. With more resources available, we were able to increase the overall watch time coverage of advanced encodings by 33 percent. This means that today more people on Instagram get to experience clearer video that plays more smoothly.

- Leo Kim: Seen in this light, TikTok’s ability to build its transmission model around our relationship to our phones represents a tremendous shift. If TV brought media into people’s homes, TikTok dares to bring it directly into our minds. The immediate, passive reception we experience on the platform relies heavily on the context of the phone, just as the familiar reception of television relies on the context of the home.

- @yahave: Two recent papers suggest a beautiful combination of neural and symbolic reasoning. The main idea is to use LLMs to express the reasoning process as a program and then use a symbolic solver (in this case, program execution) to solve each sub-problem.

- Claudio Masolo: Uber observed a statistically significant boost across all key metrics since it started to provide the information on performances to Freight drivers: -0.4% of late cancellations, +0.6% of on-time pick-up, +1% of on-time drop-off and +1% of auto tracking performances. These performance improvements resulted in an estimated cost saving of $1.5 million in 2021.

- Geoff Huston: In my opinion It’s likely that over time QUIC will replace TCP in the public Internet. So, for me QUIC is a lot more than just a few tweaks to TCP…QUIC takes this one step further, and pushes the innovation role from platforms to applications, just at the time when platforms are declining in relative importance within the ecosystem. From such a perspective the emergence of an application-centric transport model that provides faster services, a larger repertoire of transport models and encompassing comprehensive encryption was an inevitable development.

- Geoff Huston: In the shift to shut out all forms of observation and monitoring have we ourselves become collateral damage here? Not only are we handing our digital assets to the surveillance behemoths, we also don't really understand what assets we are handing over, and nor do we have any practical visibility as to what happens to this data, and whether or not the data will be used in contexts that are hostile to our individual interests. I have to share Paul’s evident pessimism. Like many aspects of our world these days, this is not looking like it will end all that well for humanity at large!

- Meltwater: These two figures [ Elasticsearch] show the improvements that were made in heap usage patterns. The left figure shows that the heap usage is basically flat for the new cluster. The right figure shows that the sum of the heap usage is also lower now (14 TiB vs 22 TiB) even though that was not a goal by itself. We can also see in the above figures that we have been able to further optimize and scale down the new cluster after the migration was completed. That would not have been possible in the old version where we always had to scale up due to the constant growth of our dataset.

- Jeremy Milk: Time and time again we see hackers creating new tactics, and simple non-negotiation doesn’t protect your business or solve for operational downtime. We’ve seen that paying ransoms doesn’t stop attacks, and engaging in counterattacks rarely has the desired outcome. Strong defensive strategies, like object lock capability, can’t block cybercriminals from accessing and publishing information, but it does ensure that you have everything you need to bring your business back online as quickly as possible.

- Backblaze: What would the annualized failure rate for the least expensive choice, Model 1, need to be such that the total cost after five years would be the same as Model 2 and then Model 3? In other words, how much failure can we tolerate before our original purchase decision is wrong?

- Charlie Demerjian: Genoa stomps the top Ice Lake in SPECInt_Rate 2017 (SIR17), 1500+ compared to 602 for Ice Lake

- Matthew Green: Our work is about realizing a cryptographic primitive called the One-Time Program (OTP). This is a specific kind of cryptographically obfuscated computer program — that is, a program that is “encrypted” but that you can mail (literally) to someone who can run it on any untrusted computer, using input that the executing party provides. This ability to send “secure, unhackable” software to people is all by itself of a holy grail of cryptography, since it would solve so many problems both theoretical and practical. One-time programs extend these ideas with a specific property that is foreshadowed by the name: the executing computer can only run a OTP once.

- Robert Graham: The term "RISC" has been obsolete for 30 years, and yet this nonsense continues. One reason is the Penisy textbook that indoctrinates the latest college students. Another reason is the political angle, people hating whoever is dominant (in this case, Intel on the desktop). People believe in RISC, people evangelize RISC. But it's just a cult, it's all junk. Any conversation that mentions RISC can be improved by removing the word "RISC".

- nichochar: My prediction: before the end of the decade, cruise and waymo have commoditized fleets doing things that most people today would find unbelievable. Tesla is still talking a big game but ultimately won't have permits for you to be in a Tesla with your hands off of the wheel.

- Linus: I absolutely *detest* the crazy industry politics and bad vendors that have made ECC memory so "special".

- Roblox: Specifically, by building and managing our own data centers for backend and network edge services, we have been able to significantly control costs compared to public cloud. These savings directly influence the amount we are able to pay to creators on the platform. Furthermore, owning our own hardware and building our own edge infrastructure allows us to minimize performance variations and carefully manage the latency of our players around the world. Consistent performance and low latency are critical to the experience of our players, who are not necessarily located near the data centers of public cloud providers.

- benbjohnson: BoltDB author here. Yes, it is a bad design. The project was never intended to go to production but rather it was a port of LMDB so I could understand the internals. I simplified the freelist handling since it was a toy project. At Shopify, we had some serious issues at the time (~2014) with either LMDB or the Go driver that we couldn't resolve after several months so we swapped out for Bolt. And alas, my poor design stuck around.

- Ann Steffora Mutschler: Dennard scaling is gone, Amdahl’s Law is reaching its limit, and Moore’s Law is becoming difficult and expensive to follow, particularly as power and performance benefits diminish. And while none of that has reduced opportunities for much faster, lower-power chips, it has significantly shifted the dynamics for their design and manufacturing.

- arriu: Minimum order of 25 [for Swarm Iot], so roughly $3725 for one year unless you get the "eval kit" @ $449. USD $5/MO PER DEVICE. Provides 750 data packets per device per month (up to 192 Bytes per packet), including up to 60 downlink (2-way) data packets

- antirez: For all those reasons, I love linked lists, and I hope that you will, at least, start smiling at them.

- brunooliv: If you don't use the SDK, how can you judge anything as being "overly complicated"? I mean, I don't know about you, but, last time I checked, signatures, certificates, security and all that stuff IS SUPPOSED to be super complicated because it's a subject with a very high inherent complexity in and of itself. The SDK exists and is well designed to precisely shield you from said complexity. If you deliberately choose not to use it or can't for some reasons then yes... The complexity will be laid bare

- krallja: FogBugz was still on twelve ElasticSearch 1.6 nodes when I left in 2018. We also had a custom plugin (essentially requesting facets that weren't stored in ElasticSearch back from FogBugz), which was the main reason we hadn't spent much time thinking about upgrading it. To keep performance adequate, we scheduled cache flush operations that, even at the time, we knew were pants-on-head crazy to be doing in production. I can't remember if we were running 32-bit or 64-bit with Compressed OOPs.

- consumer451: Simulation theory is deism for techies.

- Roman Yampolskiy: The purpose of life or even computational resources of the base reality can’t be determined from within the simulation, making escape a necessary requirement of scientific and philosophical progress for any simulated civilization.

- Mark Twain: A lie can travel around the world and back again while the truth is lacing up its boots.

- @dfrasca80: I have a #lambda written in #rustlang with millions of requests per day that does worse-case scenarios 2 GetItem and 1 BatchGetItem to #dynamodb. It usually runs between 10ms & 15ms. I decided to cache the result in the lambda execution context and now < 3ms #aws #serverless

- @leftoblique: The people who work for you have three resources: time, energy, and give-a-fuck. Time is the cheapest. It replenishes one hour every hour. Energy is more expensive. When you're out you need lots of time off to recharge. Once give-a-fuck is burned, it's gone forever.

- @kwuchu: One of my cofounder friends asked me how much downtime we have when deploying code and I looked her dead in the eye and said, "What downtime? We don't have any downtime when we deploy. If we do, something's gone wrong." So I prodded her for more context on why she asked…

- @houlihan_rick: Every engineer who looks at a running system will tell you exactly how broken it is and why it needs to be rebuilt, including the one who looks at the system after it is rebuilt.

- Jeremy Daily: I think the goal should be to bring the full power of the cloud directly into the hands of the everyday developer. That requires both guardrails and guidance, as well as the right abstractions to minimize cognitive overhead on all that undifferentiated stuff.

- @kocienda: No. This is false. I helped to create two different billion-user projects at Apple: WebKit and iPhone. I was on these teams from the earliest stages and I never once came close to sleeping at the office. It’s not an essential part of doing great work.

- Videos from Facebook's Video @Scale 2022 are available. You may be interested in Lessons Learned: Low Latency Ingest for live broadcast.

- @swardley: X : Did you see "Why we're leaving the cloud" - Me : Nope. X : And? Me : Why are you talking infrastructure clouds to me? This is 2022. You should have migrated to severless years ago. I'm not interested in niche edge cases.

- @BorisTane~ I spoke with a team yesterday that is moving away from serverless in favor of managed k8s on aws it wasn't an easy decision for them and they went through a thorough review of their architecture the reasons they highlighted to me are: operational complexity, complex CI/CD, local testing, monitoring/observability, relational database, environment variables and secrets, cost.

- @theburningmonk: First of all, serverless has been successfully adopted at a much bigger scale in other companies. LEGO for example have 26 squads working mostly with serverless, and PostNL has been all in on serverless since 2018. So technology is probably not the problem.

- @adrianco: @KentBeck talking about the cost of change is driven by the cost of coupling in the system. My observation is that “there is no economy of scale for change”, when you bundle changes together the work and failure rate increases non-linearly

- @planetscaledata: "We saved 20-30% by switching from AWS Aurora to PlanetScale." - Andrew Barba, Barstool Sports

- @MarcJBrooker: Distributed systems are complex dynamical systems, and we have relatively little understanding of their overall dynamics (the way load feeds back into latency, latency feeds into load, load feeds into loss, loss feeds into load, etc)...Which is fun, but we should be able to do that kind of thing directly from our formal specifications (using the same spec to specify safety/liveness and these emergent dynamic properties)!

- @rakyll: When cloud providers think about scale in databases, they are obsessed about horizontal scaling. OTOH, small to medium companies are getting away mostly with vertical scaling. Managing the vertical fleet is the actual problem.

- @QuinnyPig: It starts with building a model of unit economics. For every thousand daily active users (or whatever metric), there is a cost to service them. Break that down. What are the various components? Start with the large categories. Optimize there. “Half of it is real-time feed?! Okay, what can we do to reasonably lower that? Is a 30 second consistency model acceptable?” “Huh, 5% is for some experiment we don’t care about. Let’s kill it,” becomes viable sometimes as well; this economic model lets the business reason about what’s otherwise an unknown cost.

- @Carnage4Life: Most tech companies need people who can use SQL but they hire and compensate like they need employees who could invent SQL.

- @RainofTerra: So something I think people should know: the majority of Twitter’s infrastructure when I was interviewing there early in 2022 was bare metal, not cloud. They have their own blob store for storing media. These teams are now gutted, and those things don’t run themselves.

- @emmadaboutlife: i remember at an old job we got acquired and the new CEO showed up for a tour and part way through he just unplugged a bunch of datacentre servers without telling anyone because "they weren't doing anything and were using too much power"

- @MarkCallaghanDB: The largest cost you pay to get less space-amp is more CPU read-amp, IO read-amp is similar to a B-Tree. It is remarkable that LSM was designed with disks in mind (better write efficiency) but also work great with flash (better space efficiency).

- CNRS: Specifically, the researchers have made use of the reactions of three enzymes to design chemical “neurons” that reproduce the network architecture and ability for complex calculations exhibited by true neurons. Their chemical neurons can execute calculations with data on DNA strands and express the results as fluorescent signals.

- Anton Howes: People can have all the incentives, all the materials, all the mechanical skills, and even all the right general notions of how things work. As we’ve seen, even Savery himself was apparently inspired by the same ancient experiment as everyone else who worked on thermometers, weather-glasses, egg incubators, solar-activated fountains, and perpetual motion machines. But because people so rarely try to improve or invent things, the low-hanging fruit can be left on the tree for decades or even centuries.

- K-Optional: Firebase increasingly shepherds users over to GCP for essential services.

- dijit: So instead I became CTO so I can solve this mess properly, I don’t hire devops, I hire infra engineers, build engineers, release engineers and: backend engineers. Roles so simple that you already have a clue what they do, which is sort of the point of job titles.

- Will Douglas Heaven: The researchers trained a new version of AlphaZero, called AlphaTensor, to play this game. Instead of learning the best series of moves to make in Go or chess, AlphaTensor learned the best series of steps to make when multiplying matrices. It was rewarded for winning the game in as few moves as possible…In many other cases, AlphaTensor rediscovered the best existing algorithm.

- Marc Brooker: For example, splitting a single-node database in half could lead to worse performance than the original system. Fundamentally, this is because scale-out depends on avoiding coordination and atomic commitment is all about coordination. Atomic commitment is the anti-scalability protocol.

- Rodrigo Pérez Ortega: Plants ignore the most energy-rich part of sunlight because stability matters more than efficiency, according to a new model of photosynthesis.

- monocasa: The most common way to abstractly describe state machines is the directed graph, but (like nearly all graph problems) it's often just as illuminating if not more to decompose the graph into a matrix as well. So rows are states, columns are events, and the intersections are transitions. Being able to run down a column and see what happens in each of the states when a certain event is processed has made a lot of bugs much more obvious for me.

- Charity Majors: Everyone needs operational skills; even teams who don’t run any of their own infrastructure. Ops is the constellation of skills necessary for shipping software; it’s not optional. If you ship software, you have operations work that needs to be done. That work isn’t going away. It’s just moving up the stack and becoming more sophisticated, and you might not recognize it.

- the_third_wave: I want this [open sourced tractor protocol], my neighbour wants this, his neighbour - who runs an older, pre-proprietary John Deere wants this. Farmers need their tractors to work and anything that helps there is a boon. While ag contractors may run the latest most modern equipment farmers tend to have a few tractors themselves which tend to be a bit older, a bit more run-down than those shiny new JD/MF/NH/Valtra (in Sweden and Finland)/etc. machines. They can still do with some of the nicer parts of the electromagic on those machines.

- sgtfrankieboy: We have multiple CockroachDB clusters, have been for 4+ years now. From 2TB to 14TB in used size, the largest does about 3k/req sec. We run them on dedicated hosts or on Hetzner cloud instances. We tested out RDS Postgres, but that would've literally tripled our cost for worse performance.

- @donkersgood: Serverless completely inverses the load-vs-performance relationship. As we process more requests/sec, latency goes down

- @zittrain: This @newsbeagle and @meharris piece describes a retinal implant used by hundreds of people to gain sight -- one that has been declared by its vendor to be obsolete, leaving customers -- patients -- with no recourse against hardware or software bugs or issues.

- @SalehYusefnejad: "...Partitioning the ConcurrentQueue based on the associated socket reduces contention and increases throughput on machines with many CPU cores." basically, they utilize a high number of CPU cores much better now.

- @houlihan_rick: RDBMS data models are agnostic to all access patterns, which means they are optimized for none. #NoSQL tunes for common access patterns to drive efficiency where it counts. Being less efficient at everything is not a winning TCO strategy.

- @houlihan_rick: It does not matter if an RDBMS can respond to a single request with similar latency to a #NoSQL database. What matters is under load the throughput of that RDBMS will be less than half that of the #NoSQL database because joins eat CPU like locusts. It's about physics.

- @houlihan_rick: 70% of the access patterns for RDBMS workloads at Amazon touched a single row. 20% touched multiple rows on a single table. I often talk about how the other 10% drove the vast majority of infrastructure utilization because they were complex queries and joins are expensive.

- @webriots: We deploy hundreds of CloudFormation stacks and thousands of executables across all US AWS regions in many accounts in a fully reproducible fashion. It's the only system I've used that makes me feel like I have deployment superpowers. It's pretty cool:

- @tapbot_paul: Lower end SSDs are quickly racing to a $50/TB price point. I think around $25/TB I’ll do a mass migration away from spinners. Hopefully SATA 4/8TB drives are a common thing by then.

- @tylercartrr: We did 2+ billion writes to DynamoDB (@awscloud) last month 😬 Serverless + Dynamo has allowed us to scale with minimal infra concerns, but...Thankfully we are migrating that traffic to Kinesis before the ecommerce holiday season 🤑

- Neuron: Neocortex saves energy by reducing coding precision during food scarcity.

- lukas: Profiling had become a dead-end to make the calculation engine faster, so we needed a different approach. Rethinking the core data structure from first principles, and understanding exactly why each part of the current data structure and access patterns was slow got us out of disappointing, iterative single-digit percentage performance improvements, and unlocked order of magnitude improvements. This way of thinking about designing software is often referred to as data-oriented engineering,

- ren_engineer: fundamental problem is cargo cult developers trying to copy massive companies architectures as a startup. They fail to realize those companies only moved to microservices because they had no other option. Lots of startups hurting themselves by blindly following these practices without knowing why they were created in the first place. Some of it is also done by people who know it isn't good for the company, but they want to pad their resume and leave before the consequences are seen.

- Rob Carte: We’ve [FedEx] shifted to cloud...we’ve been eliminating monolithic applications one after the other after the other...we’re moving to a zero data center, zero mainframe environment that’s more flexible, secure, and cost-effective…While we’re doing this, we’ll achieve $400 million of annual savings.

- Gitlab: Organizing your local software systems using separate processes, microservices that are combined using a REST architectural style, does help enforce module boundaries via the operating system but at significant costs. It is a very heavy-handed approach for achieving modularity.

- @kellabyte: It’s a spectacular flame out when you re-platform 6-12 months on Kubernetes focusing only on automation, api gateways, side cars & at end of day $10M of salaries out the window and other dev drastically stalled only to deploy system to prod that has more outages than the last one

- @ahmetb: If you aren’t deploying and managing Kubernetes on-prem, you haven’t seen hell on earth yet.

- Douglas Crockford : The best thing we can do today to JavaScript is to retire it.

- c0l0: I am worried that the supreme arrogance of abstraction-builders and -apologists that this article's vernacular, too, emanates will be the cause of a final collapse of the tech and IT sector. Or maybe I even wish for it to happen, and fast. Everything gets SO FRICKIN' COMPLEX these days, even the very simple things. On the flip side, interesting things that used to be engineering problems are relegated to being a "cloud cost optimization" problem. You can just tell your HorizontalPodAutoscaler via some ungodly YAML incantation to deploy your clumsy server a thousand time in parallel across the rent-seekers' vast data centers. People write blogs on how you can host your blog using Serverless Edge-Cloud-Worker-Nodes with a WASM-transpiled SQLite-over-HTTP and whatnot.

- @moyix: I just helped audit ~60 singly linked list implementations in C for as many vulnerabilities as we could find. It is *astonishing* that we still use this language for anything.Out of ~400 implemented functions we looked at (11 API functions x 60 users, but some were unimplemented or didn't compile), I think I can count the number of vulnerability-free functions we encountered on one hand.

- Packet Pushers: The basic pitch around [DPUs] is to bring networking, security, and storage closer to workloads on a physical server, without having to use the server’s CPU and memory. The DPU/IPU bundles together a network interface along with its own programmable compute and memory that can run services and applications such as packet processing, virtual switching, security functions, and storage.

- Impact Lab: Since the electron transfer and proton coupling steps inside the material occur at very different time scales, the transformation can emulate the plastic behaviour of synapse neuronal junctions, Pavlovian learning, and all logic gates for digital circuits, simply by changing the applied voltage and the duration of voltage pulses during the synthesis, they explained.

Useful Stuff:

- I wonder if Snap knows they shouldn't be using the cloud? Snap: Journey of a Snap on Snapchat Using AWS:

- 300 million daily active users.

- 5 billion+ snaps per day.

- 10 million QPS.

- 400 TB stored in DynamoDB, Nightly scans run a 2 billion rows per minute. They look for friend suggestions and deleting ephemeral data.

- 900+ EKS clusters, 1000+ instances per cluster.

- Sending a snap: client (iOS, Android) sends a request to their gateway service (GW) that runs in EKS. GW talks to a media delivery service (MEDIA). MEDIA sends the snap to cloudfront and S3 so it's closer to the recipient when they need it.

- Once the client has persisted the media it sends a request to the core orchestration services (MCS). MSC checks the friend graph service, which does a permission check on if the message can be sent. The metadata is persisted into SnapDB.

- SnapDB is a database that uses DynamDB as its backend. It handles transactions, TTL, and efficiently handles ephemeral data and state synchronization. The controls helps control costs.

- Receiving a snap is very latency sensitive. MCS looks up a persistent connection for each user. in Elasticache. The server hosting the connection is found and the message is sent through that server. The media ID is used to get media from Cloudfront.

- Moving to this architecture reduced P50 latency by 24%.

- Use auto-scaling and instant type optimization (Graviton) to keep compute costs low.

- Also, How do we build a simple 𝐜𝐡𝐚𝐭 𝐚𝐩𝐩𝐥𝐢𝐜𝐚𝐭𝐢𝐨𝐧 using 𝐑𝐞𝐝𝐢𝐬?.

- Twitter is performing one of the most interesting natural experiments we've ever seen: can you fire most of your software development team and survive? The hard truth is after something is built there's almost always a layoff simply because you don't need the same number of people to maintain a thing as it takes to build a thing. But Twitter made a deep cut. It seems likely stuff will stay up in the short term because that's the way it's built. But what about when those little things go wrong? Or when you want to change something? Or figure out those trace through those elusive heisenbergs? It will be fascinating to see.

- @petrillic: for people blathering about “just put twitter in the cloud” we tried that. i was there. so just to put some numbers out there, let’s assume aws gave you a 60% discount. now you’re talking approximately 500,000 m6gd.12xlarge systems (new hw was bigger). that’s $300MM monthly. and before you add in little things like bandwidth. probably $10MM a month in S3. it’s just absurd. there is a reason after investing many thousands of staff+ hours twitter was careful about what it moved. even doing experiments in the cloud required careful deep coordination with the providers. for example, i accidentally saturated the network for an entire region for a few minutes before some sre freaked out and asked what was going on. 1,000 node presto cluster was going on. or the gargantuan bandwidth twitter uses (you think we ran a global network for nothing?). with a 90% discount on aws it would be probably be another $10MM monthly. maybe more. oh and for those going on and on about elastic scale... good luck asking for 50,000 EC2 instances instantly. just because you can provision 20 doesn't mean you can provision 50,000. even running 1,000 node presto cluster required warming it up at 100,250,500,750 first. not sure i'm the person for that, but realistically, it was mostly latency reduction. there were lots of efforts in 2019-2021 on reducing (tail) latency on requests. also, optomizing a 400Gbps backbone in the datacenter.

- @atax1a: so now that the dust has settled — it sounds like the last person with membership in the group that allows them to turn on writes to config-management left while writes were still locked, and the identity management that would allow them to get into the group is down (:..then there's all the services that _aren't_ in Mesos — a bunch of the core UNIX stuff like Kerberos, LDAP, and Puppet itself is on bare metal, managed by Puppet. Database and storage clusters have some of their base configs deployed there, too. None of that was on Musk's diagram.

- The best outcome is we're getting some details and anti-details about how Twitter works now. It's definitely a grab the elephant situation.

- @jbeda: I’m still on the RPC thing. How many RPCs do you think happen when you do a cold search on Google? I wouldn’t be surprised if it were >1000. Between search, ads, user profile, nav bar on top, location databases, etc. This is just how mature distributed distributed systems work...*sigh* it doesn’t work like that. Typically you have a few API calls from the client to a datacenter and then, within the datacenter, you have a flurry of traffic to pull everything together.

- @suryaj: Back in 2014 when I worked on Bing, 1 query translated to 20s of requests from browser to FrontDoor (entry point to Bing) to 2000+ RPCs within the DCs (multiple).

- @theyanis: i worked at Vine in 2015 and it was in the AWS cloud while a Twitter property. I think our cloud and CDN bill was $12M yearly and that’s for 30 million MAU. Twitter has 450 and the migration would cost like 1000 man years

- @MosquitoCapital: I've seen a lot of people asking "why does everyone think Twitter is doomed?" As an SRE and sysadmin with 10+ years of industry experience, I wanted to write up a few scenarios that are real threats to the integrity of the bird site over the coming weeks. 1) Random hard drive fills up. You have no idea how common it is for a single hosed box to cause cascading failures across systems, even well-engineered fault-tolerant ones with active maintenance. Where's the box? What's filling it up? Who will figure that out?

- @jbell: Flash forward to 2022. Over 5 years, we’ve migrated almost every api used by the Twitter app into a unified graphql interface. Getting api changes into production used to be measured in weeks, now it's measured in hours. For the last 2 years, EVERY SINGLE new Twitter feature was delivered on this api platform. Twitter blue, spaces, tweet edit, birdwatch, and many many many others. This api platform serves your home timeline, every tweet embedded in your favorite nytimes article, and the entirety of the 3rd-party developer api which uses our graphql system to fulfill every part of every api request. Just in this calendar year, there have been over 1000 changes to this graphql api by over 300 developers at Twitter. The api serves 1.5 BILLION graphql fields per second across 3000 different data types. The system is vast, developerable, and efficient.

- @schrep: Basic internet math for @elonmusk's claim load time in India is 20s because of 1200 RPC calls (v.s. 2s in US): Bangalore->SF is ~250ms 1200 *.25s = 5 minutes! Way off!! 20s load time = MAX of 80 sequential calls Reality is likely 5-10? 1200 is happing inside datacenter?

- AWS re:Invent 2022 videos are now available.

- The Serverless Spectrum. I really don't think serverless is a spectrum. Something is serverless if you can use the service through an API without having to worry about the underlying architecture. When you charge a minimum of $700, like AWS does for Open Search, it's not serverless. That minimum charge means you are paying for fixed resources. That's not serverless. That's not on the spectrum. FaaS is different from serverless because we've seen new container based HTTP serverless options like Cloud Run and App Runner that aren't oriented around functions, so we need to keep FaaS to label services like AWS Lambda.

- Roblox outage because of new features under load lead to a 73 hour outage at Roblox. Great incident report.

- Roblox’s core infrastructure runs in Roblox data centers. We deploy and manage our own hardware, as well as our own compute, storage, and networking systems on top of that hardware. The scale of our deployment is significant, with over 18,000 servers and 170,000 containers.

- we leverage a technology suite commonly known as the “HashiStack.” Nomad, Consul and Vault

- At this point, the team developed a new theory about what was going wrong: increased traffic…the team started looking at Consul internals for clues..Over the next 10 hours, the engineering team dug deeper into debug logs and operating system-level metrics. This data showed Consul KV writes getting blocked for long periods of time. In other words, “contention.”

- On October 27th at 14:00, one day before the outage, we enabled this feature on a backend service that is responsible for traffic routing. As part of this rollout, in order to prepare for the increased traffic we typically see at the end of the year, we also increased the number of nodes supporting traffic routing by 50%. We disabled the streaming feature for all Consul systems, including the traffic routing nodes. The config change finished propagating at 15:51, at which time the 50th percentile for Consul KV writes lowered to 300ms. We finally had a breakthrough.

- Why was streaming an issue? HashiCorp explained that, while streaming was overall more efficient, it used fewer concurrency control elements (Go channels) in its implementation than long polling. Under very high load – specifically, both a very high read load and a very high write load – the design of streaming exacerbates the amount of contention on a single Go channel, which causes blocking during writes, making it significantly less efficient

- The caching system was unhealthy so they restarted it: To avoid a flood, we used DNS steering to manage the number of players who could access Roblox. This allowed us to let in a certain percentage of randomly selected players while others continued to be redirected to our static maintenance page. Every time we increased the percentage, we checked database load, cache performance, and overall system stability. Work continued throughout the day, ratcheting up access in roughly 10% increments.

- Running all Roblox backend services on one Consul cluster left us exposed to an outage of this nature. We have already built out the servers and networking for an additional, geographically distinct data center that will host our backend services. We have efforts underway to move to multiple availability zones within these data centers

- Basecamp and HEY are moving on out—of the cloud. Why we're leaving the cloud and podcast and Why You Should Leave the Cloud – David Heinemeier Hannsson DHH.

- we have a pretty large cloud budget and gonna have some of the more specific numbers, but I think we’re at about $3 million a year

- Renting computers is (mostly) a bad deal for medium-sized companies like ours with stable growth. The savings promised in reduced complexity never materialized. So we're making our plans to leave.

- Let's take HEY as an example. We're paying over half a million dollars per year for database (RDS) and search (ES) services from Amazon. Do you know how many insanely beefy servers you could purchase on a budget of half a million dollars per year?

- Anyone who thinks running a major service like HEY or Basecamp in the cloud is "simple" has clearly never tried.

- We have gone from a hundred percent of our servers being, or more or less a hundred percent of our servers being on Preem and knowing what that takes to operate. We currently run base cam three, our, well four now actually base cam four. We run base cam four on our own servers predominantly.

- First of all, we don’t even rack the hardware. No one goes when we buy a new server. No one drives from wherever they live in the country to the data center, unpack the box, slot it into machine. No, no, no, they’re data centers who rent out what’s called this white glove service. They unwrap it, they put it in your rack, they connect the the cables and then we remotely set it up. And what we’ve just found is like, that’s just not where the bulk of the complexity is hidden.

- And I think there’s just something for me, aesthetically offensive about that. Not just a perversion of the Internet’s architecture and, and setup, but also this idea that these big tech companies, and I certainly include both Amazon and Google in that camp they’re already too big, too powerful.

- Thus I consider it a duty that we at 37signals do our part to swim against the stream. We have a business model that's incredibly compatible with owning hardware and writing it off over many years. Growth trajectories that are mostly predictable. Expert staff who might as well employ their talents operating our own machines as those belonging to Amazon or Google.

- The cloud is not should you build your own power plant, but should you buy or rent your own dishwasher.

- They were not saving on complexity. They were not running using fewer operations people, so why bother with the cloud?

- Will be using S3 for quite a while.

- OK, they aren't actually swimming against the stream. They are exactly in the stream making decisions based on their skill set, requirements and workload. Since it doesn't sound like they have a very cloud native architecture, the transition should be relatively seamless. Many people do not have the ops people and the DBAs to run these kinds of systems, so others will make different decisions based on their needs.

- Also, Building Basecamp 4. Though we don't really know what their architecture is like. I assume they are just renting machines, which if you have a good ops team is not an efficient use of funds.

- Cloudflare is making a strong case for being your serverless cloud platform of choice--based on price and features. ICYMI: Developer Week 2022 announcements. Remember, the difference between Cloudflare and other cloud platforms is that they are an edge platform provider. Your code works everywhere they have a point of presence, not in just a datacenter. So it's a harder problem to solve. They are piecing together all the base parts of a platform. They call it the Supercloud. As everyone seems to hate every neologism, I'm assuming Supercloud will be derided as well. What's on their platform?

- Durable Objects. An interesting take on the Actor model. I haven't used these yet, but I plan to.

- Queues. Work needs to pile up somewhere.

- R2. Cheaper than S3. No egress fees. Interested now?

- Workers. Still good.

- Cache Reserve. Is it a bank of some kind? No, it's a CDN with lower egress fees: During the closed beta we saw usage above 8,000 PUT operations per second sustained, and objects served at a rate of over 3,000 GETs per second. We were also caching around 600Tb for some of our large customers.

- Cloudflare Logs. Yep, logging.

- D1. A relational database you can't use yet.

- Cloudflare Pages + Cloudflare Functions. Static website hosting + server-side JavaScript code.

- Various stuff I wasn't interested in.

- @dpeek_: My recommendation to anyone building a new SaaS product: build on @Cloudflare. @EstiiHQ is completely hosted on Pages / Workers / KV / DurableObjects and we still haven’t exceeded the “pro” tier ($5 a month). I have no idea how they make money!

- Wired up predefined access patterns in NoSQL are more performant than SQL queries on a relational database. But why can't relational databases compete using materialized views? Maybe they can. How PlanetScale Boost serves your SQL queries instantly:

- PlanetScale Boost, which improves the performance and throughput of your application’s SQL queries by up to 1,000×. PlanetScale Boost is a state-of-the-art partial materialization engine that runs alongside your PlanetScale database.

- With PlanetScale Boost, we’re trying to do the opposite. Instead of planning how to fetch the data every time you read from your database, we want to plan how to process the data every time you write to your database. We want to process your data, so that reading from it afterward becomes essentially free. We want a very complex plan to apply to your writes, so there’s no planning to be done for your reads; a plan where we push data on writes instead of pulling data on reads.

- A query in PlanetScale Boost can also miss, but we try to be much smarter about the way we resolve these misses.

- Jonhoo: I'm so excited to see dynamic, partially-stateful data-flow for incremental materialized view maintenance becoming more wide-spread! I continue to think it's a _great_ idea, and the speed-ups (and complexity reduction) it can yield are pretty immense, so seeing more folks building on the idea makes me very happy.

- @houlihan_rick: With the correct data model, the time complexity of every #NoSQL query is O(log(n)). This is blazing fast compared to the simplest RDBMS join. One accurate measurement is worth a thousand expert opinions. Run the workload on both platforms with a proper data model, then laugh.

- @houlihan_rick: Sometimes people who have limited experience in #NoSQL try to tell me I am wrong. Most of Amazon's retail infrastructure runs on some variation of the #NoSQL design patterns invented by my team.

- Ok, Los Altos Hills is one of the richest neighborhoods in the world, but it's good to see people realizing networking isn't that hard.

- Comcast told him it would cost $17,000 to speed up his internet. He rallied 41 South Bay neighbors to build their own lightning-fast fiber-optic network instead: Gentry’s company handled the infrastructure procurement, contracts, logistics and retail — essentially providing the residents a turnkey fiber optic internet service — while Vanderlip and two of his neighbors, who joined with an investment of $5,000 each, bought the fiber optic infrastructure, crowdsourced new members and mapped out an initial fiber route to their houses.

- Also, The Fibre Optic Path: An alternative approach is to use a collection of cores within a multi-core fibre and drive each core at a far lower power level. System capacity and power efficiency can both be improved with such an approach.

- AI continues to be almost most useful.

- Kite is saying farewell: We built the most-advanced AI for helping developers at the time, but it fell short of the 10× improvement required to break through because the state of the art for ML on code is not good enough. You can see this in Github Copilot, which is built by Github in collaboration with Open AI. As of late 2022, Copilot shows a lot of promise but still has a long way to go. The largest issue is that state-of-the-art models don’t understand the structure of code, such as non-local context.

- AI Found a Bug in My Code: I had the model look at some existing code and rank the probability of each token appearing given the previous tokens. I also had it suggest its own token and compared the probability of my token to the probability of the model's token…I did not plan this, but it turns out there is a bug in my code. When an event listener is removed during dispatch, I return from the function. Hovering over the suspicious code, the model correctly suggests continue.

- nradov: Yes and I raised the same concern when GitHub Copilot was released. If our code contains so little entropy that an AI can reliably predict the next sequence of tokens then that is evidence we are operating at too low a level of abstraction. Such tools can certainly be helpful in working with today's popular languages, but what would a future language that allows for abstracting away all that boilerplate look like?

- Good question. What will Serverless 2.0 look like?:

- Make all services serverless so we don't have to manage anything, they scale to zero, and we shouldn't have to worry about instance sizes.

- Better caching, orchestration, HPC, etc.

- DynamoDB is easy to adopt and hard to change and adapt to new access patterns.

- Deploying functions never works the first time. Permissions wrong. Wiring wrong. Need something to check that a function will run when deployed.

- Need faster deployment.

- Infrastructure as code for works for functions, but not for everything else. Need better support for wiring, networking, and security.

- Microsoft invested a lot in owning where developers live: Cloud-based editor, VsCode GitHub, and NPM. You can build and deploy with GitHub actions. Copilot can write half the code for you.

- Just use NoSQL from the start. From Postgres to Amazon DynamoDB:

- Instacart is the leading online grocery company in North America. Users can shop from more than 75,000 stores with more than 500 million products on shelves. We have millions of active users who regularly place multiple orders with tens of items in their cart every month.

- Our primary datastore of choice is Postgres, and we have gotten by for a long time by pushing it to its limits, but once specific use cases began to outpace the largest Amazon EC2 instance size Amazon Web Services (AWS) offers, we realized we needed a different solution. After evaluating several other alternatives, we landed on Amazon DynamoDB being the best fit for these use cases.

- With a few features planned for release, we were projecting to send 8x more notifications every day than our baseline! We knew that as we continued to scale, a single Postgres instance would not be able to support the required throughput.

- The ability to scale on-demand would be a plus to general cost efficiency as well as our ability to test and launch future features that change the volume of messages sent dramatically

- we elected to thinly wrap an open source library (Dynamoid) that exposed a similar interface to the ActiveRecord

- In real terms, the specific design for the push notifications service, not only solved our scaling issues, but also helped us in cutting our costs by almost half.

- In just the past 6 months we have grown from 1 to more than 20 tables, supporting 5–10 different features across different organizations!

- @stefanwild: Yes, that’s roughly our approach. If it’s a new, somewhat standalone table that is either a straightforward fit for Dynamo and/or a workload that Postgres won’t handle well, we do use Dynamo from the start. The bar to migrate existing Postgres tables is a bit higher…Rather difficult for our heaviest workloads. But that and challenges with denormalization are probably topics for another blog post or two

- How is saying something is the way it is because all the other options are improbable any different than saying because god wants it that way? : . Our universe is the way it is, according to Neil Turok of the University of Edinburgh and Latham Boyle of the Perimeter Institute for Theoretical Physics in Waterloo, Canada, for the same reason that air spreads evenly throughout a room: Weirder options are conceivable, but exceedingly improbable.

- How we built the Tinder API Gateway:

- We have more than 500 microservices at Tinder, which talk to each other for different data needs using a service mesh under the hood.

- Tinder is used in 190 countries and gets all kinds of traffic from all over the world.

- Before TAG existed, we leveraged multiple API Gateway solutions and each application team used a different 3rd party API Gateway solution. Since each of the gateways was built on a different tech stack, managing them became a cumbersome effort.

- TAG is a JVM-based framework built on top of Spring Cloud Gateway. Application teams can use TAG to create their own instance of API Gateway by just writing configurations. It centralizes all external facing APIs and enforces strict authorization and security rules at Tinder. TAG extends components like gateway and global filter of Spring Cloud Gateway to provide generic and pre-built filters: Weighted routing, Request/Response transformations, HTTP to GRPC conversion, and more

- TAG is also used by other Match Group brands like Hinge, OkCupid, PlentyOfFish, Ship, etc. Thus TAG is serving B2C and B2B traffic for Tinder.

- My first take was converting 10 million lines of code from Java to Kotlin was just crazy. But it's Meta, so I'm sure they can pull it off. Not something mortals should do however. And the results don't seem worth the squeeze. From zero to 10 million lines of Kotlin: On average, we’ve seen a reduction of 11 percent in the number of lines of code from this migration; We found that Kotlin matched the performance of Java; We expected build times would be longer with Kotlin.

- A good example of pretty much every modern cloud architecture. WEGoT aqua: Scaling IoT Platform for Water Management & Sustainability. Also How Shiji Group created a global guest profile store on AWS.

- How Honeycomb Used Serverless to Speed up Their Servers: Jessica Kerr at QCon San Francisco 2022:

- Honeycomb observed a 50ms median startup time in their lambda functions, with very little difference between hot and cold startups. They tend to (90%) return a response within 2.5 seconds. They are 3 - 4 times more expensive but much more infrequent than EC2s for the same amount of compute.

- Use Lambda for real-time bulk workloads that are urgent

- Make data accessible in the cloud and divide them into parallel workloads

- Before scaling out, tune and optimize properly, use observability layers, and measure (especially cost) carefully

- Last but not least, architecture doesn’t matter unless users are happy.

- Storage Engine: lock Free B+ tree for indexing with log-structured storage; Local disk with SSDs (not Remote Attached); Batching to reduce network/disk IO; Custom allocators to optimize for request patterns; Custom async scheduler with coroutines

- Azure Cosmos DB: Low Latency and High Availability at Planet Scale:

- an example of a customer having a single instance scalability in production with 100 million requests per second over petabytes of storage and globally distributed over 41 regions.

- Understanding your hardware that it depends on, we never span our process across Non-uniform memory access (Numa) nodes. When a process is across the Numa node, memory access latency can increase if the cache misses. Not crossing the Numa node gives our process a more predictable performance.

- Shopify Reducing BigQuery Costs: How We Fixed A $1 Million Query.

- That roughly translated to 75 GB billed from the query. This immediately raised an alarm because BigQuery is charged by data processed per query. If each query scans 75 GB of data, then we’re looking at approximately 194,400,000 GB of data scanned per month. According to BigQuery’s on-demand pricing scheme, it would cost us $949,218.75 USD per month!

- We created a clustered dataset on two feature columns from the query’s WHERE clause. We then ran the exact same query and the log now showed 508.1 MB billed. That’s 150 times less data scanned than the previous unclustered table. That would bring our cost down to approximately $1,370.67 USD per month, which is way more reasonable.

- I can't wait for the pie stack. Have some CAKE: The New (Stateful) Serverless Stack:

- C - CockroachDB Serverless

- A - Authorization, Authentication, Session, and User Management

- K - Kubernetes

- E - Event-driven serverless platforms

- So it's not a stack, it's more of a recipe, which is fine, but a stack should be immediately usable without first deciding on all the ingredients and then having to figure out how to prepare them.

- Also, How to build modern gaming services – with reference architecture.

- What do you do when vertical scaling reaches as high as it can go? Netflix went horizontal. Consistent caching mechanism in Titus Gateway:

- After reaching the limit of vertical scaling of our previous system, we were pleased to implement a real solution that provides (in a practical sense) unlimited scalability of Titus read-only API. We were able to achieve better tail latencies with a minor sacrifice in median latencies when traffic is low, and gained the ability to horizontally scale out our API gateway processing layer to handle growth in traffic without changes to API clients.

- The mechanism described here can be applied to any system relying on a singleton leader elected component as the source of truth for managed data, where the data fits in memory and latency is low.

- The JVM giveth and taketh away. Netflix got some back. Seeing through hardware counters: a journey to threefold performance increase:

- we expected to roughly triple throughput per instance from this migration, as 12xl instances have three times the number of vCPUs compared to 4xl instances. We can see that as we reached roughly the same CPU target of 55%, the throughput increased only by ~25% on average, falling far short of our desired goal. What’s worse, average latency degraded by more than 50%, with both CPU and latency patterns becoming more “choppy.”

- In this blogpost we described how we were able to leverage PMCs in order to find a bottleneck in the JVM’s native code, patch it, and subsequently realize better than a threefold increase in throughput for the workload in question. When it comes to this class of performance issues, the ability to introspect the execution at the level of CPU microarchitecture proved to be the only solution. Intel vTune provides valuable insight even with the core set of PMCs, such as those exposed by m5.12xl instance type.

- How do you go from data to crowd pleasing images? It's a long pipeline. James Webb telescope pictures didn’t begin as stunning images. Here’s how they started out — and how researchers brought them to life:

- For all the meticulous precision that went into the JWST’s design and construction, the data coming from it, in its rawest form, is uneven.

- Thankfully, the JWST engineers have a solution for that — a complete calibration map of how to compensate for the variations in each pixel in every instrument on the Webb telescope.

- supernova87a: I have friends/former colleagues who work on these pipelines, and I can tell you that it's not a stretch to say that there are dozens if not hundreds of people whose entire working lives are about characterizing the sensors, noise, electronics, so that after images are taken, they can be processed well / automatically / with high precision…Every filter, sensor, system has been studied for thousands of person-hours and there are libraries on libraries of files to calibrate/correct the image that gets taken. How do you add up exposures that are shifted by sub-pixel movements to effectively increase the resolution of the image? How to identify when certain periodic things happen to the telescope and add patterns of noise that you want to remove? What is the pattern that a single point of light should expect to be spread out into after traveling through the mirror/telescope/instrument/sensor system, and how do you use that to improve the image quality?

- NimConf 2022 videos are available.

- Events: Fat or Thin. Agree, you generally want fat events because you want to do as much work up front as you can. Events should not drive backend load to rehydrate data that was already available at the time the event was created. It's not a coupling issue because all the code needs to be in sync in any case. If your events are going across system boundaries then your decision criteria may be different. Great discussion on HackerNews.

- It's Time to Replace TCP in the Datacenter

- It is time to recognize that TCP's problems are too fundamental and interrelated to be fixed; the only way to harness the full performance potential of modern networks is to introduce a new transport protocol into the datacenter. Homa demonstrates that it is possible to create a transport protocol that avoids all of TCP's problems.

- Excellent discussion on HackerNews. We could do better than TCP/IP in controlled network scenarios. Same within appliances and racks.

- Also, Aquila: A unified, low-latency fabric for datacenter networks, CliqueMap: Productionizing an RMA-Based Distributed Caching System, Snap: a Microkernel Approach to Host Networking.

- Amazon agrees. They've created SRD (scalable reliable datagram). It focuses more on performance and less on reliability, because you know, a datacenter isn't the internet. SRD offers multi-pathing, microsecond level retries, runs on dedicated hardware (nitro). SRD is an Ethernet-based transport. SRD reduces EBS tail latency, which is key because average latency doesn't matter for data. So better overall performance and massive improvement in edge cases.

- It's all in the set up. Scaling PostgresML to 1 Million ML Predictions per Second on commodity hardware..

- Scaling Mastodon is Impossible. Is that really a bad thing? Though it does seem likely, since centralization has always won, we'll see centralized virtual layers on top of the fediverse.

- How to reduce 40% cost in AWS Lambda without writing a line of code!:

- Higher memory configurations bring very little benefit and high risk.

- The batch size was unchanged. We observed that it will not affect the performance or costs

- The parallelizationFactor was changed from 3 to 2. This setting caused a little delay in queue ingestion but it’s at a three-digit ms level

- The maximumBatching Window in Seconds was changed from 0 to 3. We observed that it only adds 2- digit ms delay to ingestion time and brings significant cost reduction.

- Runtime Architecture is changed from X86_64 to ARM64. This results in no visible performance increase for our case but AWS charges 25% less for ARM64

- we didn’t change the code at all. Welcome to the age of the cloud.

- When Is Serverless More Expensive Than Containers?. At 66 requests per second. That's over 170.2 million requests per month. So you have a lot of head room. App Runner is about 25% the cost of EC2. A good thing to keep in mind is serverless costs are linear with usage. You won't get to that inflection in the curve where you need another level of complexity to cope. They didn't include API gateway costs, which would likely change the numbers.

- Cumulus on How We Decreased Cloud Costs and Increased System Resilience:

- After seeing over 400% increase in our monthly bill, it was clear that we had to completely rethink how we were using the cloud.

- Overall, we lowered our monthly bill by 71%, Perhaps most importantly, the improvements we made yielded a highly resilient system.

- The legacy technologies used in the original system were priced at a premium by service providers.

- Our customers have “bursty” usage patterns.

- We had to anticipate and provision for peak usage levels since scaling our services took too much time.

- Leveraging serverless technologies, we decided to evolve our system from a monolithic structure to serverless microservices

- We shifted our primary storage from a relational database to DynamoDB tables

- Replaced shared-secret authentication with granular IAM policies

- Leveraged AWS Backup to create Point-In-Time-Recovery

- Eliminated AppSync cache by improving backend resources

- Reduced verbose data logging

- Want your Intel processor to be able to do addition this week? You'll soon be able to buy a weekly, monthly or yearly subscription for that. Intel Finalizes 'Intel on Demand' Pay-As-You-Go Mechanism for CPUs.

- Questions to ask on a date with a software engineer. LOL.

- How we reduced our annual server costs by 80% — from $1M to $200k — by moving away from AWS:

- a few years of growth later, we’re handling over 70,000 pages per minute, storing around 560 million pages, and paying well over $1,000,000 per year.

- we were able to cut costs by 80% in a little over three months

- Migrate the cached pages and traffic onto Prerender’s own internal servers and cut our reliance on AWS as quickly as possible. When we did a cost projection we estimated that we could reduce our hosting fees by 40%, and decided a server migration

- When we did a cost projection we estimated that we could reduce our hosting fees by 40%, and decided a server migration

- When the writes to S3 had been stopped completely, Prerender saved $200 a day on S3 API costs

- In the last four weeks, we moved most of the cache workload from AWS S3 to our own Cassandra cluster. The daily cost of AWS was reduced to $1.1K per day, projecting to 35K per month, and the new servers’ monthly recurring cost was estimated to be around 14K.

- The true hidden price for AWS is coming from the traffic cost, they sell a reasonably priced storage, and it’s even free to upload it. But when you get it out, you pay an enormous cost. In our case, it was easy around the $30k — $50k per month. By the end of phase two, we had reduced our total monthly server costs down by 41.2%.

- This step involved moving all the Amazon RDS instances shard by shard. Our monthly server fees dropped below our initial estimate of 40% to a full 80% by the time all the cached pages were redirected.

- P99 Conf videos are available. Looks like lots of good content.

- How we built Pingora, the proxy that connects Cloudflare to the Internet:

- Today we are excited to talk about Pingora, a new HTTP proxy we’ve built in-house using Rust that serves over 1 trillion requests a day, boosts our performance, and enables many new features for Cloudflare customers, all while requiring only a third of the CPU and memory resources of our previous proxy infrastructure.

- We chose Rust as the language of the project because it can do what C can do in a memory safe way without compromising performance.

- The next design decision was around our workload scheduling system. We chose multithreading over multiprocessing in order to share resources, especially connection pools, easily. We also decided that work stealing was required to avoid some classes of performance problems mentioned above.

- We decided to implement a “life of a request” event based programmable interface similar to NGINX/OpenResty

- Overall traffic on Pingora shows 5ms reduction on median TTFB and 80ms reduction on the 95th percentile. The savings come from our new architecture which can share connections across all threads. This means a better connection reuse ratio, which spends less time on TCP and TLS handshakes. Pingora makes only a third as many new connections per second compared to the old service. For one major customer, it increased the connection reuse ratio from 87.1% to 99.92%, which reduced new connections to their origins by 160x.

- Pingora consumes about 70% less CPU and 67% less memory compared to our old service with the same traffic load.

- Pingora crashes are so rare we usually find unrelated issues when we do encounter one.

- Walmart shows all the optimizations that can be applied to any write-heavy API. Scaling the Walmart Inventory Reservations API for Peak Traffic.

- Scatter-Gather the API requests with a sticky session so a database partition is always processed by the same instance.

- In-Memory concurrency using actor pattern with mailbox to restrict the processing of a single partition to a single thread. This also helps with batch processing of the same partition requests.

- In-Memory snapshot state caching to reduce the number of reads.

- Videos from ServerlessDays New York 2022 are available.

- A useful discussion about what zero trust means and why you want it. The US government certainly does. OMB Zero Trust Memo, with Eric Mill. It means removing implicit trust inside the network. You don't log in at layer 3 or layer 4 and then have trust inside the network. You still have single-sign-on, but you log in at the application layer. Zero trust is about least privilege and getting more information about the users using the system. Pushing unencrypted on the internal network is putting a lot of implicit trust in the system. HTTPS everywhere.

Soft Stuff:

- Kiteco: Kite open sourced a lot of their code for AI code generation.

- Dark: a new way of building serverless backends. Just code your backend, with no infra, framework or deployment nightmares.

- gluesql: a SQL database library written in Rust.

- This looks very nice. FlashDB: An ultra-lightweight embedded database. Key-value database. Time Series Database.

- Supabase: an open source Firebase alternative. Start your project with a Postgres database, Authentication, instant APIs, Edge Functions, Realtime subscriptions, and Storage.