I write a lot about investigations into tricky bugs – CPU defects, kernel bugs, transient 4-GB memory allocations – but most bugs are not that esoteric. Sometimes tracking down a bug is as simple as paying attention to server dashboards, spending a few minutes in a profiler, or looking at compiler warnings.

Here then are three significant bugs which I found and fixed which were sitting in the open, just waiting for somebody to notice.

Server CPU Surprise

A few years ago I spent a few weeks doing some memory investigations on live game servers. The servers were running Linux in distant data centers so much of my time was spent getting permissions so I could tunnel to the servers, and learning how to effectively use perf and other Linux diagnostic tools. I found a series of bugs that were causing memory usage to be triple what it needed to be and I fixed those:

A few years ago I spent a few weeks doing some memory investigations on live game servers. The servers were running Linux in distant data centers so much of my time was spent getting permissions so I could tunnel to the servers, and learning how to effectively use perf and other Linux diagnostic tools. I found a series of bugs that were causing memory usage to be triple what it needed to be and I fixed those:

- I found map ID mismatches which meant that a new copy of ~20 MB of data was loaded for every game instead of being reused

- I found an unused (!) 50 MB (!!) global variable that was memset to zero (!!!), thus ensuring it consumed per-process physical RAM

- Miscellaneous smaller fixes

But that’s not what this story is about.

Having taken the time to learn how to profile our game servers I figured I should poke around a bit more, so I ran perf on the servers for one of our other games. The first server process that I profiled was… odd. The live view of the CPU sampled data showed that a single function was consuming 100% of the CPU time. Within that function it seemed to show that just fourteen instructions were executing. But that didn’t make sense.

My first assumption was I was using perf incorrectly or was misinterpreting the data. I looked at some other server processes and found that roughly half of them were in this strange state. The other half had CPU profiles that looked more normal.

The function in question was traversing a linked list of navigation nodes. I asked around and found a programmer who said that floating-point precision issues might cause the game to generate navigation lists with loops. They’d always meant to cap how many nodes would be traversed but had never got around to it.

So, mystery solved, right? Floating-point instabilities cause loops in the navigation lists, the game traverses them endlessly, the behavior is explained.

But… this explanation meant that whenever this happened the server process would get into an infinite loop, all players would have to disconnect, and the server process would consume an entire CPU core indefinitely. If that was happening wouldn’t we eventually run out of resources on our server machines? Wouldn’t somebody have, you know, noticed?

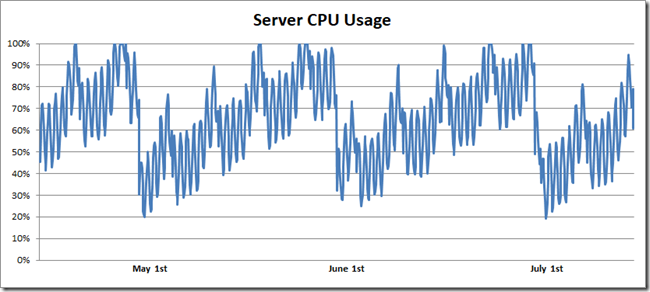

I tracked down the server monitoring and found a graph that looked something like this:

As far back as the monitoring went (a year or two) I could see the daily and weekly fluctuations of server load, and overlaid on that was a monthly pattern. CPU usage would gradually increase and then drop back down to zero. A bit more asking around revealed that the server machines were rebooted once a month. And finally everything made sense:

- On any particular run of a game there was a small chance that the server process would get stuck in an infinite loop

- When this happened the players would disconnect and the server process would stay in this loop until the machines were rebooted at the end of the month

- The CPU monitoring dashboard clearly showed that this bug was reducing server capacity by about 50% on average

- Nobody ever looked at the monitoring dashboard

The fix was a few lines of code to stop traversing after twenty navigation nodes, presumably saving a few million dollars in server and power costs. I didn’t find this bug by looking at the monitoring graphs, but anybody who looked at them could have.

I love that the frequency of the bug was perfectly set to maximize the cost without ever quite causing serious enough problems for it to be caught. It’s like a virus which evolves to make people cough, but not kill them.

Slow startup

Software developer productivity is intimately tied to the latency of the edit/compile/link/debug cycle. That is, having made a change to a source file how long does it take to be running a new binary with that change incorporated? I’ve done a lot of work over the years on reducing compile/link times, but startup times are also important. Some games do a huge amount of work every time they are launched. I’m impatient and I’m often the first person to spend a few hours or days trying to make game startup run a few seconds faster.

Software developer productivity is intimately tied to the latency of the edit/compile/link/debug cycle. That is, having made a change to a source file how long does it take to be running a new binary with that change incorporated? I’ve done a lot of work over the years on reducing compile/link times, but startup times are also important. Some games do a huge amount of work every time they are launched. I’m impatient and I’m often the first person to spend a few hours or days trying to make game startup run a few seconds faster.

In this case I ran my favorite profiler and looked at the CPU usage during the initial load. There was one stage that looked the most promising: about ten seconds spent initializing some lighting data. I was hopeful that there might be some way to speed up those calculations and maybe save five seconds or so from startup time. Before digging in too deeply I consulted with the graphics expert. They said:

“We don’t use that lighting data in this game” – “Just remove the call.”

Oh. Well. That was easy.

With half an hour of profiling and a one-line change the launch time to the main menu was cut in half, with no extraordinary effort required.

An ill-timed crash

The variable arguments in printf formatting means that it is easy to get type mismatches. The practical results vary considerably:

- printf(“0x%08lx”, p); // Printing a pointer as an int – truncation or worse on 64-bit

- printf(“%d, %f”, f, i); // Swapping float and int – could print nonsense, or might actually work (!)

- printf(“%s %d”, i, s); // Swapping the order of string and int – will probably crash

The standard says that these mismatches are undefined behavior so technically anything could happen, and some compilers will generate code that intentionally crashes on any of these mismatches, but these are some of the most likely results (aside: understanding why #2 often prints the desired result is a good ABI puzzle).

These mistakes are very easy to make so modern compilers all have ways to warn developers when they have a mismatch. gcc and clang have annotations for printf-style functions and can warn on mismatches (although, sadly, the annotations don’t work on wprintf-style functions). VC++ has (different, unfortunately) annotations that /analyze can use to warn on mismatches, but if you’re not using /analyze then it will only warn on the CRT-defined printf/wprintf-style functions, not your own custom functions.

The company I was working at had annotated their printf-style functions so that gcc/clang would emit warnings but had then decided to ignore the warnings. This is an odd decision since these warnings are 100% reliable indicators of bugs – the signal-to-noise ratio is infinite.

I decided to start cleaning up these bugs using VC++’s annotations and /analyze to make sure I found all of the bugs. I’d worked my way through most of the errors and had one final change waiting for code-review before I submitted it.

That weekend we had a power outage at the data center and all of the servers went down (there may have been some power-configuration mistakes). The on-call people scrambled to get things back up and running before too much money was lost.

That weekend we had a power outage at the data center and all of the servers went down (there may have been some power-configuration mistakes). The on-call people scrambled to get things back up and running before too much money was lost.

The funny thing about printf bugs is that they usually misbehave 100% of the time that they are executed. That is, if they are going to print incorrect data or crash then they usually do it every time that they run. So, the only way that these bugs stick around is if they are in logging code that is never read, or error handling code that is rarely executed.

It turns out that “restarting all servers simultaneously” hits some code paths that are not normally executed. Servers that are starting up go looking for other servers, can’t find them, and print a message like:

fprintf(log, “Can’t find server %s. Error code %d.\n”, err, server_name);

Oops. Variadic arguments mismatch. And a crash.

The on-call people now had an additional problem. The servers needed to be restarted, but that couldn’t be done until the crash dumps were examined, the bug was found, the bug was fixed, the server binaries rebuilt, and a new build deployed. This was a pretty quick process – a few hours I believe – but an entirely avoidable one.

The on-call people now had an additional problem. The servers needed to be restarted, but that couldn’t be done until the crash dumps were examined, the bug was found, the bug was fixed, the server binaries rebuilt, and a new build deployed. This was a pretty quick process – a few hours I believe – but an entirely avoidable one.

It felt like the perfect story to demonstrate why we should take the time to resolve these warnings – why ignore warnings that tell you that code will definitely crash or misbehave when executed? However nobody seemed to care that fixing this class of warnings would provably have saved us a few hours of downtime. Actually, the company culture didn’t seem interested in any of these fixes. But it wasn’t until this last bug that I realized I needed to move on to a different company.

Lessons?

If everyone on a project spends all of their time heads-down working on the features and known bugs then there are probably some easy bugs hiding in plain sight. Take some time to look through the logs, clean up compiler warnings (although, really, if you have compiler warnings you need to rethink your life choices), and spend a few minutes running a profiler. Extra points if you add custom logging, enable some new warnings, or use a profiler that nobody else does.

And if you make brilliant fixes that improve memory/CPU/stability and nobody cares, maybe find a company where they do.

Hacker news discussion here, reddit discussion here, twitter discussion here.

/analyze is a pain in CI because the build has already succeeded by that point. I want my builds to fail if certain /analyze issues are detected.

Similarly, it’s inexcusable that printf-style type mismatches are not compiler warnings (or errors) in VC++.

Back when I used /analyze regularly I ran a filter on the output and would fail the build if certain issues were detected – including printf-style format-string warnings and other accurate warnings. Failing on all /analyze warnings is no good because it has too many unreliable warnings – such a pity.

It’s great progress that VC++ can detect mismatches on printf itself, but it sure would be great if they extended that to printf-style functions.

Great post Bruce, thanks for sharing!

“And if you make brilliant fixes that improve memory/CPU/stability and nobody cares, maybe find a company where they do.” -Bruce

That is exactly how I felt in the last year and hence I’ve moved on… 😀

Good for you! I hope that your work is better appreciated now

“These mistakes are very easy to make so modern compilers all have ways to warn developers when they have a mismatch.”

Cleverly, F# has static type-checking into printf format strings. Errors there are type errors, and will halt compilation!

(You can still pass dynamic format strings to printf at runtime, but it requires an extra call to wrap it, so it’s an easy “dangerous” operation to search for, and nobody does it by accident.)

“And if you make brilliant fixes that improve memory/CPU/stability and nobody cares, maybe find a company where they do.”

That sounds great in principle. I’ve been in the field for 20 years, and I’m still looking. And I’ve worked in aerospace — they were one of the worst. If they don’t care, I have trouble believing anyone does.

Google cares. Code quality at Google is understood to be of high importance. When I have found things which I think need fixing I rarely have to spend any time convincing my coworkers to make or approve the change. Over-the-top improvements (where the complexity or tradeoffs are too great given the benefits) still get pushback, but in a way that feels appropriate. I guess that means that my beliefs are aligned with Google’s.

It is ultimately a matter of agreement between your personal code-quality beliefs and those of your coworkers, so the perfect match will vary from person to person.

Fortunately where I work they care, they have processes, and they abide by the processes. And if the process is broken, they fix it. If we don’t do our jobs right, people’s electricity might go out when it shouldn’t, so we take what we do seriously. Sometimes it can feel a bit suffocating since we spend so much time on process stuff, but at the end of the day I’m grateful to work for a company that actually cares.

As an example, we recently spent an inordinate amount of time doing ‘peer’ reviews of some third party code that we use which recently updated because apparently the vendor we purchased from doesn’t do their own reviews.

We found several critical bugs (and *countless* style problems…), and it only cost us several man months to find and test for them. 😦

If the company had done reviews themselves I’m sure they would have recognized the bugs in days and never released the bugs we found since they actually understand the code and the domain.

Unfortunately we can’t really switch to another vendor because the cost of switching is higher than the occasional review (we’ve been using their code for at least 20 years, and they were the best option back then), but boy would it be nice to not have to relive the last month of my life again…

Thanks for the story. I’m glad you found a job where the corporate level of caring is a good match for you. That is very important.

In a highly regulated environment you will find many (coding) rules as a result to get a certification of a government agency to release your product. In the past Quality Management caused often a corset of rules which did increase code quality only on paper. The auditors check if you have a fully described Quality Management Process and and everyone codes against your own rules based on a a few samples. The idea is that no auditor is smart enough to fully understand your product and can find your errors. Instead they audit on a meta level which only checks if your rules to prevent harm are properly implemented. Code quality is not part of that process as long as no one gets harmed. What I miss is a feedback loop from the implementers of technology back to the auditors if the implemented rules are doing more harm than good. Many rules are only there to cover someones job so he does not need to take the blame when something goes wrong. That is the main problem. As long as this attitude is dominating your processes you have a code quality problem.

Heh. I have heard of the “too much process” problem, and let’s just say that that was not the problem at the company that these stories are from.

I don’t have any great advice for somebody who is at a “too much process” company. Try to change the rules? Game the system? Rest and vest? Quit? I guess we all have to make our own decisions. Maybe the most important thing to understand is that different attitudes towards quality and bugs do exist, and developers therefore do have choices, because we can usually change employers.

people complained when I added a lint task to our build pipelines and it blocked builds with bad code, and I was forced to create separate lint pipelines which people ignore 😦

I also documented the setting up of git pre-commit linting hooks, which the developers won’t do.

What was the signal-to-noise ratio of the lint errors? It needs to be very high (ideally 100%, or 95% with easy ways to disable the other 5%) to avoid being frustrating.

Having the warnings be part of the local build cycle is ideal. Having it as part of the commit queue (prior to commit) is second best. Having it run after commit is worst, but can still be valuable. I have struggled with this balancing act – not always perfectly – when trying to get as much value as possible from compiler warnings and static analysis. Some tools (/analyze, for instance) make this balance harder to achieve.

ad Slow Startup – Once I was handling an issue that our over-night analyses was taking more then 6 hours and getting worse from day to day. I went to inspect what was going on, because indeed 30 minutes would be OK, but 6 hours that’s quite a lot of computational time spent there. After a while I realized that we have an array of about 5M items, and about 10M times we’re doing a look-up in there with O(n). And the lookup was string-based using ‘myitem#123’ string format. Changed it to an O(log(n)) lookup using just the integer. Went down to below 1 second in execution time 🙂

Good one rezna! 🙂 Lower quality developers misusing data structures and algorithms… What a surprise! 😀

You reminded me of a contract I took as a student. The accounting professor had requested a database setup from a previous student for an accounting teaching software that had to support multiple languages. So, that student created rows for each account using their textual name (in English) as the primary key! Of course, when he got to the other languages, he made new rows using names in the other languages! I just couldn’t believe it… especially since all those accounts had formal numbers attached to them that are standard in accounting! Anyway, it was a quick job to clean all of that and remove all the duplication by simply using those fixed integers as primary keys instead.

From six hours to under a second? Wow! More than four orders of magnitude improvement sounds like a good day

Yep, I even had to write a test because I couldn’t believe that myself. But the O(n) container was really slow. It was an object that was designed for loading small configuration pieces mainly, it was not designed for speed, it was designed for nice operations on small sets of items you sometimes had to merge/update with another set. But some developers found it “useful” also for large arrays of data 🙂