Top Alternatives to Kubernetes to Overcome Business Challenges

Concurrent configurations and continuous delivery need something out-of-the-box! For Airbnb, it was Kubernetes. They used it with an internal tool to support 1000 engineers. Kubernetes helped them with 500 deployments per day and 250 critical services.

However, it is not a magic wand and comes with challenges. Kubernetes has issues with security policy implementations due to its massive cluster size. Fortunately, many alternatives to Kubernetes, such as Azure Kubernetes Service and Rancher, provide role-based access control(RBAC). However, the choice of alternatives depends on specific business needs. So, choosing an option can be dicey for many businesses.

So, what’s the way out?

As a decision-maker, why do you need an alternative? It is a question that comes first to your mind. This is why analyzing the challenges of Kubernetes, and its impact is key to decision-making. Further, we will help you decide the best alternatives to Kubernetes to overcome challenges.

Top challenges of Kubernetes

Kubernetes provides scalability, reduced IT costs, and shorter release timeframes. However, it does not mean there are no bottlenecks with Kubernetes. According to research by D2iQ, 94% of surveyed participants believed that Kubernetes added complexity to their organizations. Identifying these challenges can help you decide on a suitable alternative.

#1. Application consistency

If you have a stateful app, data persistence is a challenge with Kubernetes. Containers are temporary, and it’s not one but a group of them keeping the service running. However, you can use the YAML template to create a manifest. It allows you to achieve the desired state in stateless apps.

However, if your application is stateful, data persistence is complex in Kubernetes. Further, there is data fragmentation over many services, data centers, environments, etc.

Containers can be problematic. Everything, including the app’s state and configuration files, is deleted on the termination of temporary containers. So, maintaining the state of the app and consistency becomes challenging. So, you need an alternative to Kubernetes that provides higher app consistency.

Another solution is to build an ephemeral-only solution. However, it can cause massive infrastructure management challenges. Kubernetes also has infrastructure management issues.

#2. Infrastructure management

Kubernetes single-node clusters can range from 500-600 to large 15,000- 20,000 clusters. Each node is a virtual machine or physical machine based on clusters. Scaling the single-node clusters can increase the scale of managing, tracking, and maintaining activities.

Further, unplanned downtime due to the failure of a single container is time-consuming to rectify. It also needs more effort to mitigate the error than a planned downtime. So, you need a solution with higher multi-cluster visibility and better configuration management.

The easiest way is to find a Kubernetes alternative that allows you to view, manage and consolidate clusters across environments. However, it depends on the cluster size and how urgent it is for your operations.

The proliferation of data is inevitable with a growing number of clusters. It affects both infrastructure management and scalability.

#3. Scaling Kubernetes

Kubernetes does offer scalability, and this is why many organizations use it. However, when it comes to dynamic scaling, Kubernetes pauses a challenge. This is because Kubernetes comes with a pre-built Horizontal Pod Autoscaler(HPA).

It allows you to automate the deployment of pods as per scaling needs. However, the problem lies in the configuration of HPA. During the deployment, it does not allow you to configure an HPA on the ReplicaSet or Replication controller.

A Replication controller is a structure that enables the replacement of pods, especially when one of them crashes. So, while HPA helps auto-scale Kubernetes, it does not allow the replacement of pods if there is a crash.

If a failsafe system is urgent and essential for your organization, choosing an alternative to Kubernetes makes sense. Infrastructure management impacts the total cost of ownership, another critical challenge of Kubernetes.

#4. The total cost of ownership

One of the reasons Kubernetes allows you to reduce operations and development costs is by automating several tasks. However, in a survey by FinOps/CNFC, 68% of respondents reported increased Kubernetes costs.

Kubernetes provides the ability to spin up codes from existing repositories for rapid time-to-market. However, such codes may not be optimized for resource usage. So, as the applications scale up or down, there is a wastage of resources due to suboptimal regulations.

A survey by StormForge on cloud spending for 2021 indicates that 48% of cloud resources are wasted due to Kubernetes-based complexities. In addition, it increases the total cost of ownership for any organization. Therefore, the best option is to choose an alternative to Kubernetes with minimal TCO.

Monitoring to track resource usage helps reduce TCO, but you also need it for security purposes. Kubernetes has security issues, making it challenging for many organizations to secure their containers.

#5. Securing Kubernetes

Kubernetes does not come with an Identity and Access Management(IAM) capability out of the box. So, monitoring is complex if you have several teams and users using the app across multiple environments.

Another critical challenge is to ensure that security patches reach every container within a short time. As cluster size grows, adding security patches across servers and enforcing policies becomes challenging.

Security issues in Kubernetes are not uncommon. For example, according to a Red Hat survey, 55% of respondents had to delay their app releases due to security issues in Kubernetes. So, the security issues in Kubernetes can impact the overall time to market.

Choosing an alternative with advanced security features is the best option. Similarly, you can also implement RBAC(Role-Based Access Control) to manage data access and enforce security policies. Another solution can be integrating third-party services to monitor activities across Kubernetes clusters. But, it all depends on the interoperability of Kubernetes.

#6. Interoperability issues

Kubernetes is not interoperable by default with other services and applications. For example, enabling cloud-native apps on Kubernetes makes the communication between applications complex.

Further, due to the lack of native API management in Kubernetes, tracking the behavior of applications and containers is tricky. Native API management enables better traffic visualization and service-to-service communications.

You can enable cloud-native apps through Open Service Broker APIs. On the other hand, you can choose an alternative to Kubernetes, which is interoperable with native cloud services.

You may have noticed that each challenge has one easy solution- Choosing an alternative to Kubernetes. Managed container services come in many different forms.

For example, you can choose from services like Kubernetes-as-a-service (KaaS), Platform-as-a-Service (PaaS), and Container-as-a-Service (CaaS). Let’s discuss some service providers who serve as an alternative to Kubernetes.

Alternatives to Kubernetes: Container as a Service (Caas)

A container as a Service(CaaS) is a cloud service that allows adding, removing, stopping, starting, and scaling of containers. It reduces the need to manage container orchestration and underlying cloud services simultaneously.

So, you can automate container deployments without worrying about the underlying infrastructure. However, the learning curve is the most significant aspect of choosing a CaaS provider as an alternative to Kubernetes. CaaS platforms are simpler to start with, providing fully managed services. Some of the best CaaS providers are:

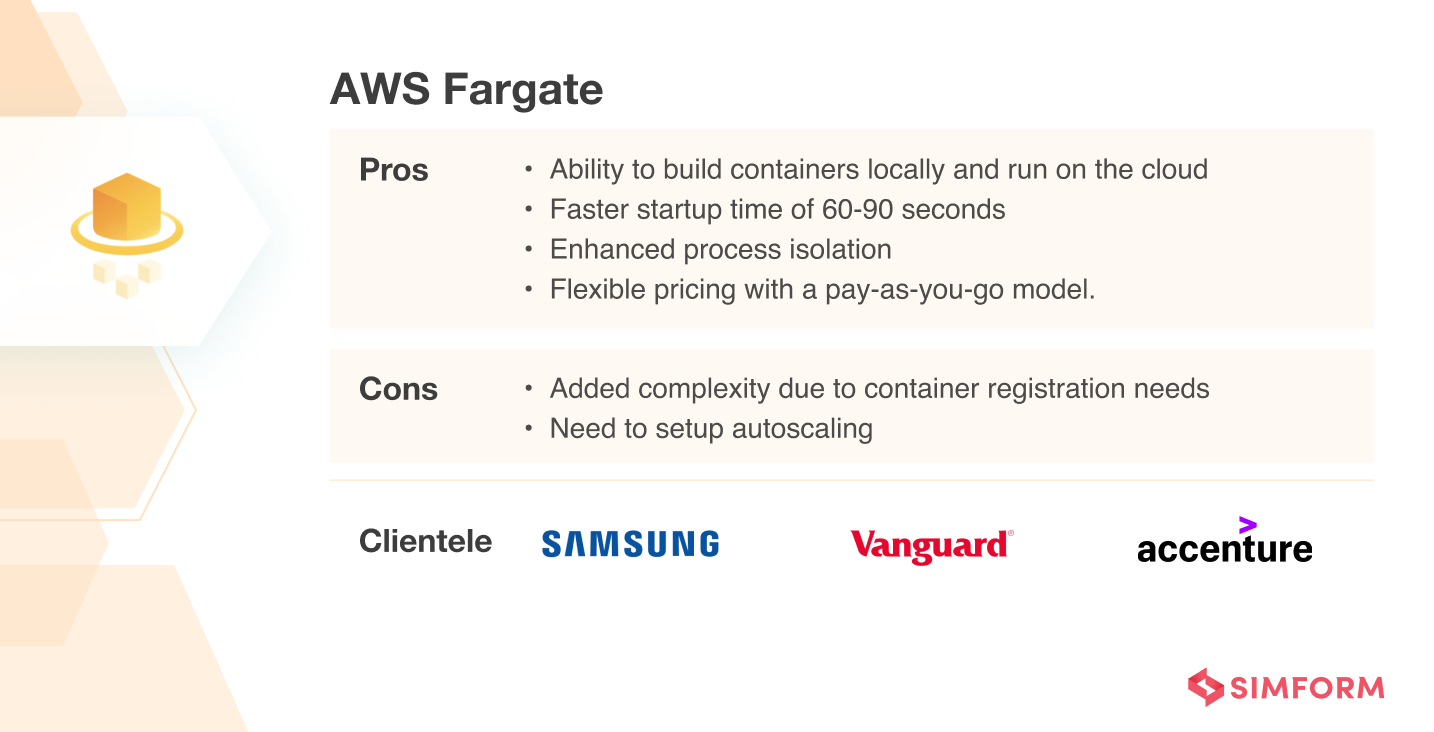

AWS Fargate

AWS Fargate is a serverless compute engine that lets you create containers locally through Docker and run them in the cloud. It’s an easy way to deploy containers on AWS. All you need to do is to follow these steps,

- Build a container image

- Specify the compute and memory requirements

- Define security and data access policies

- Launch containers

As you can observe, using Fargate is straightforward, making it an excellent alternative to Kubernetes.

Pros

- Build containers locally with Docker and run on the cloud

- Faster startup time of 60-90 seconds for containers

- Process isolation as all the tasks run without sharing the underlying kernel

- AWS integration allows usage of different cloud services

- Flexible pricing with a pay-as-you-go model.

Cons

- Added complexity due to the need for container registration in ECS

- Need to setup autoscaling as it does not come pre-built

When to use Fargate?

AWS Fargate is an ideal alternative to Kubernetes if you want your applications to adjust according to the traffic. For example, eCommerce apps have high peak traffic during sales like Cyber Monday and others. On the other hand, during non-peak hours, you may end up paying for unused resources. Due to flexible pricing for computing resources based on containerization, AWS Fargate helps reduce the wastage of resources.

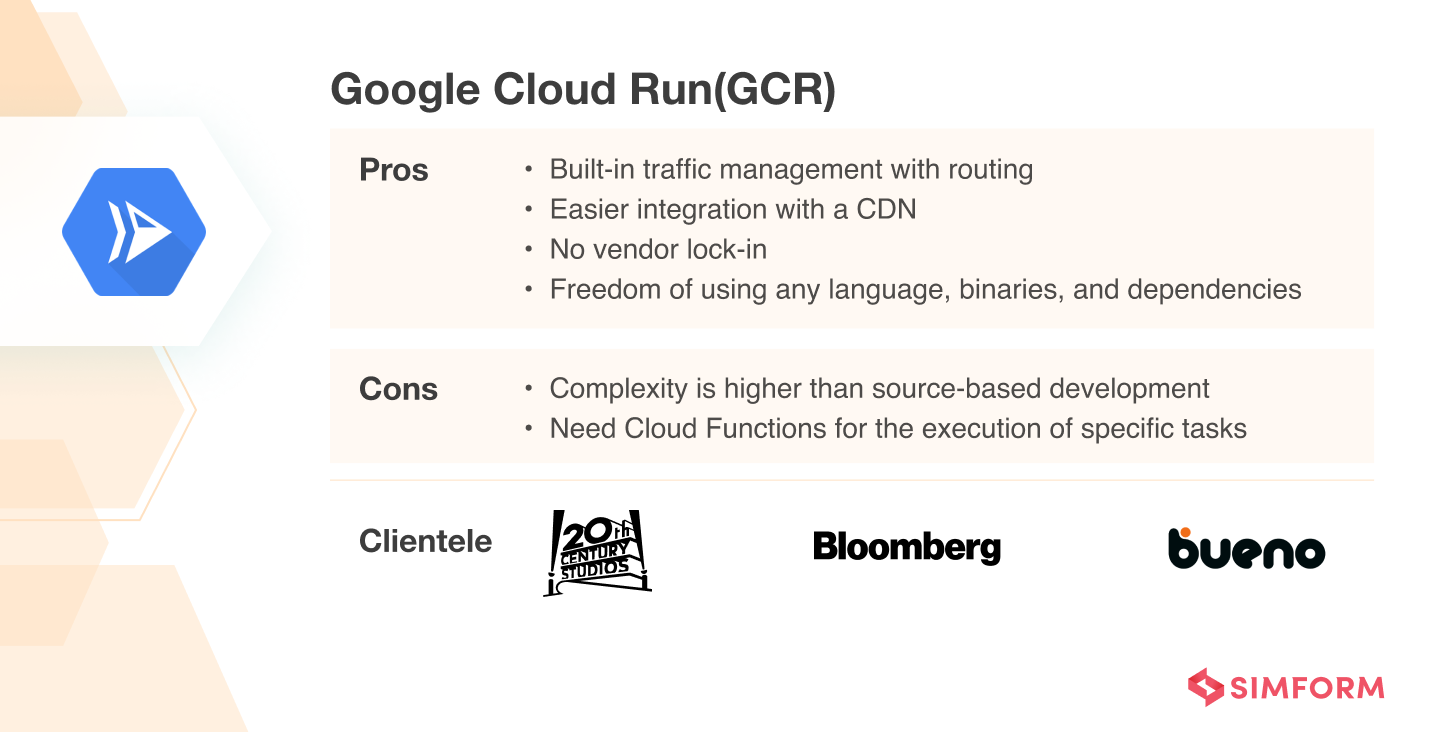

Google Cloud Run(GCR)

Google Cloud Run(GCR) is a serverless container platform that allows you to create, manage and deploy containers on the Google Cloud Platform. It does not require GKE clusters for the deployment of containers.

GCR is a stand-alone and fully managed CaaS service. It abstracts the infrastructure management and allows developers to focus on building apps through containers.

GCR allows you to develop and deploy highly scalable applications through containerization across programming languages like Go, Python, Java, and. NET.

A cloud-run service offers the infrastructure required to run a reliable HTTPS endpoint. All you need is a code that listens to the TCP port and handles HTTP requests.

It comes with built-in scale-up capabilities that can go up to 1000 container instances. Further, you can request more instances as per requirements. The best part about GCR is how it terminates the instances once there is no need for them, reducing the overhead costs of idle resources.

Pros

- Built-in traffic management with routing to a new immutable version or rollback feature to the older version.

- Easier integration with a CDN allows the serving of cacheable assets at locations closer to clients

- No vendor lock-in which enables you to use GCR with any platform

- Freedom of using any language, binaries, and dependencies

- The capability of performing both remote and local testing of containers

Cons

- Complexity is higher than source-based development

- Need Cloud Functions for the execution of specific tasks

When to use Google Cloud Run?

GCR is an ideal solution if you are developing an application that requires an HTTP server as a part of the architecture and wants to leverage containerization.

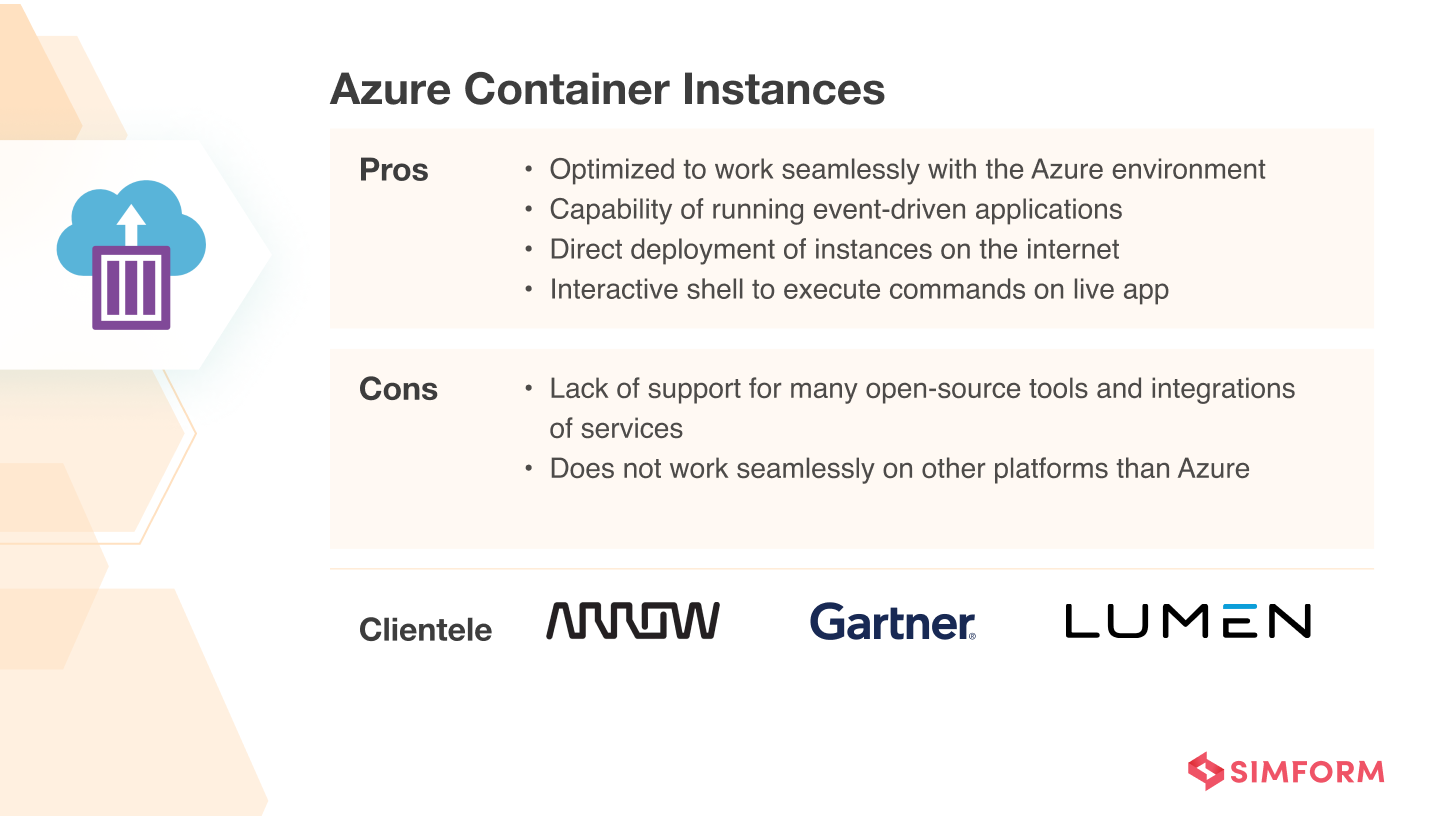

Azure Container Instances(ACI)

ACI provides on-demand containerization in a managed and serverless Azure environment and enables the operation of isolated containers without orchestrations. With ACI, you can run event-driven applications, process data, and build jobs rapidly. All you need is container images and a private Azure container registry or Docker-based registry.

It allows exposure of container groups directly to the internet. You need to specify a custom DNS for your instances. Further, you can also execute commands on the live application through an interactive shell. ACI provides optimum utilization of the resources through specifications that you define for CPU cores and memory.

Pros

- Optimized to work seamlessly with Azure environment

- Operating isolated containers does not need orchestration

- The capability of running event-driven applications

- Direct deployment of instances on the internet

- Interactive shell to execute commands on live app

Cons

- Lack of support for many open-source tools and integrations of services

- Optimized and designed only for Azure environment and does not work seamlessly on other platforms

When to use ACI?

ACI is an ideal solution if you want to develop an application with extensive data processing. You can also choose ACI when there are batch jobs like media transcoding in the application development.

KaaS as an alternative to Kubernetes

Kubernetes provide the facility of pods with all the resources you need to keep the containers running. However, there is one problem with pods- they are not permanent! So, how to ensure backend availability for your apps? This is where a Kubernetes-as-a-service(KaaS) platform can help you with simple endpoint APIs that update the app according to changes in the pod.

Some of the best KaaS-based services you can choose are,

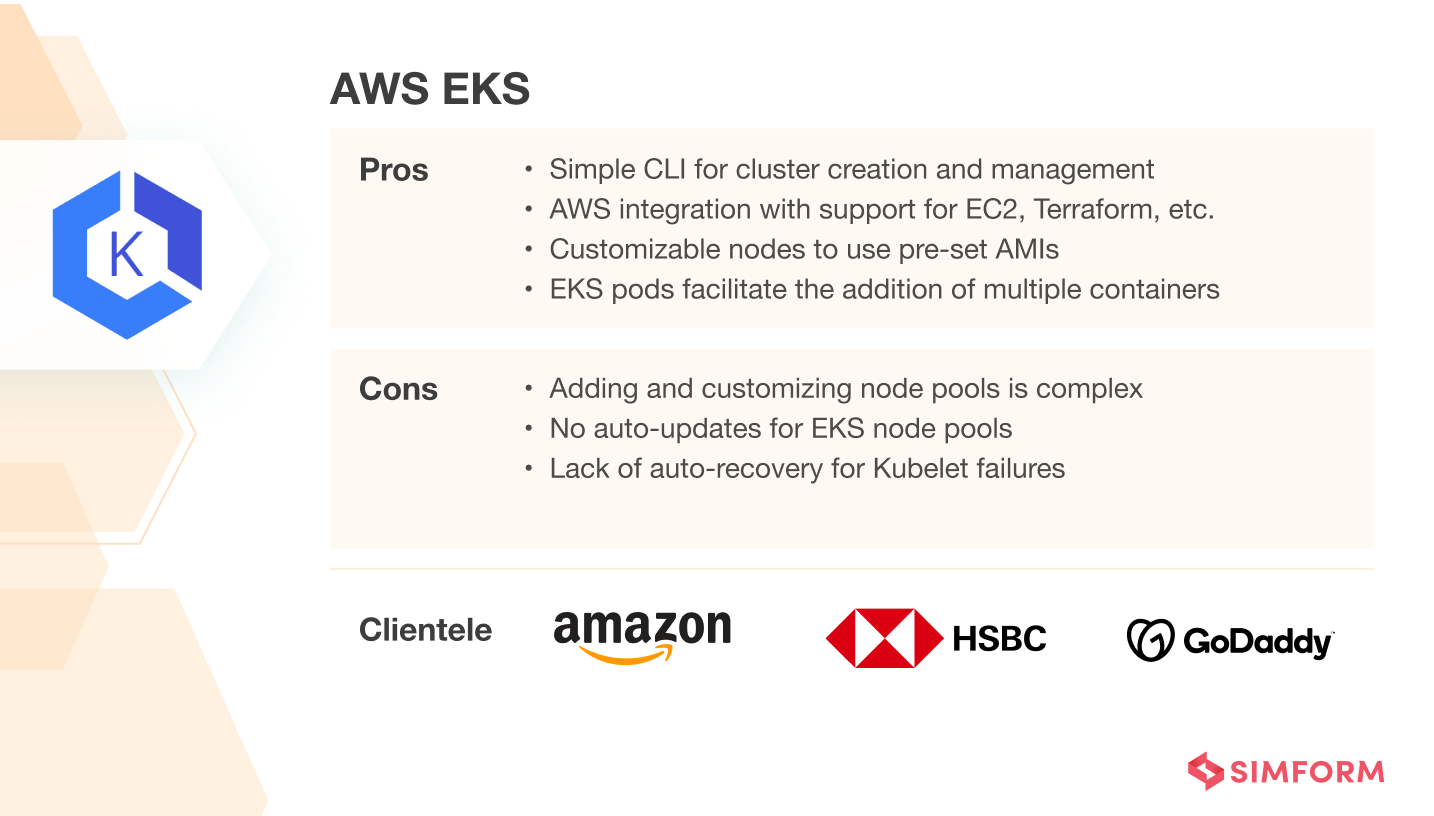

AWS EKS

AWS Elastic Kubernetes Service(EKS) allows you to run Kubernetes on AWS. It provides Kubernetes management services while staying compatible with the open-source Kubernetes. In addition, EKS enables you to manage Kubernetes clusters across multiple environments.

AWS EKS provides high availability through pre-built networking and security integrations in multiple availability zones. It also powers machine learning use cases by leveraging Amazon EC2 GPU instances. You can deploy inferences and training modules with Kubeflow using EKS.

AWS EKS Anywhere comes pre-built, enabling deployments on your local data centers. Further, you can run Kubernetes clusters on any environment and manage them through the EKS dashboard in AWS Console.

Pros

- Simple CLI called eksctl for creation and management of Kubernetes clusters.

- AWS integration allows you to use other services like EC2, Terraform, etc.

- Customizable nodes from EKS allow you to use custom machine images or pre-set AMIs.

- EKS pods allow to add multiple containers and have a shared resource

Cons

- Adding and customizing node pools is complex

- No auto-updates for EKS node pools

- Lack of auto-recovery for Kubelet failures

When to use AWS EKS?

AWS EKS helps with enhanced provisioning and management of Kubernetes clusters. So, if you want to control the master node better and avoid manual deployments, AWS EKS is the right choice.

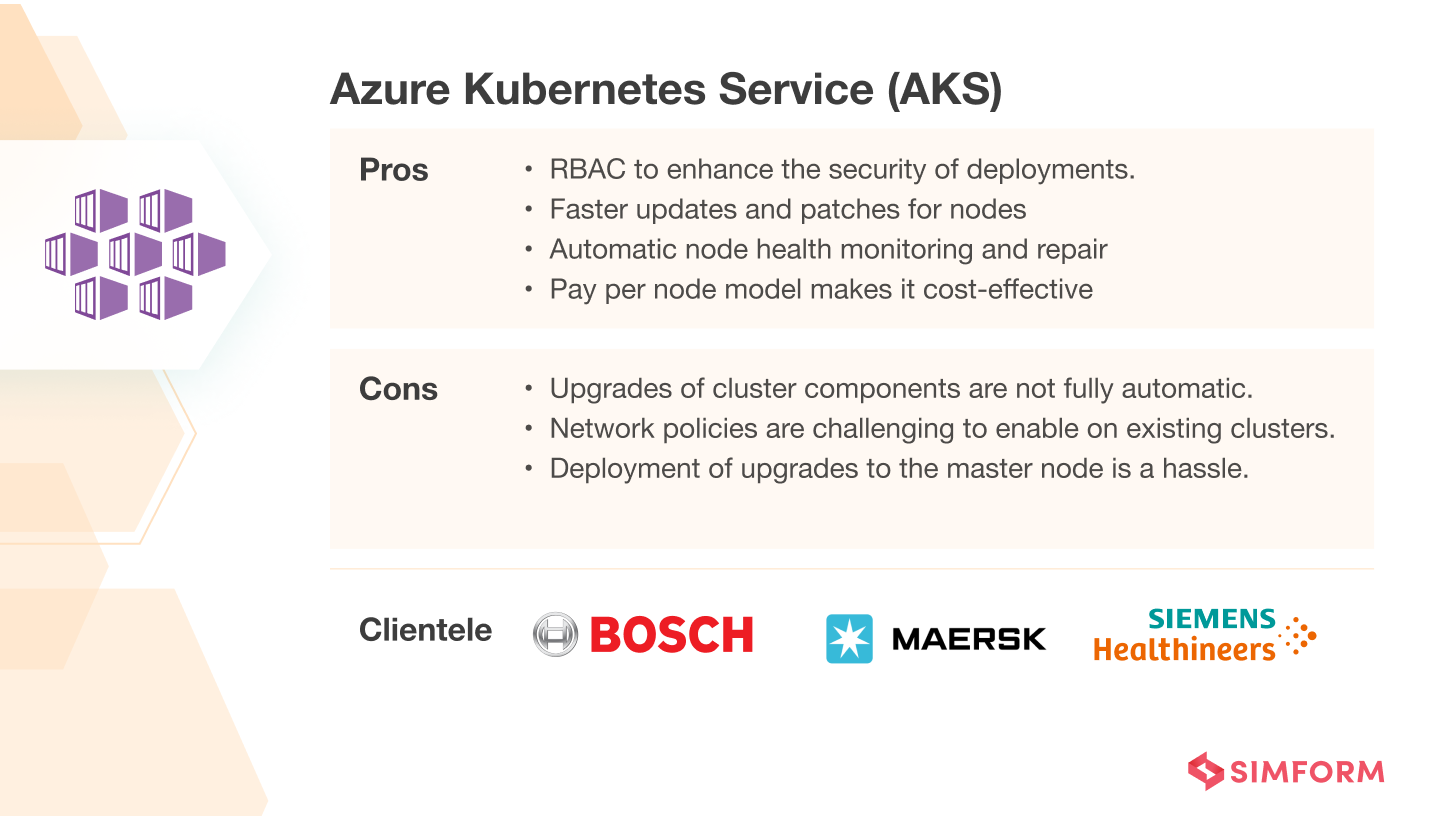

Azure Kubernetes Service (AKS)

Azure Kubernetes Service is another alternative to Kubernetes, which provides managed services. It allows you to deploy and manage containerized applications. In addition, AKS offers fully managed Kubernetes services and serverless capabilities.

Further, it provides event-driven auto-scaling and infrastructure management. AKS also enables elastic provisioning of the capacity. The Kubernetes alternative also comes with comprehensive authorization and authentication features.

It provides role-based access control(RBAC) for all your Kubernetes deployments offering better security.

Pros

- Enhanced scalability, powered by Kubernetes-based Event-Driven Autoscaling(KEDA)

- Kubernetes RBAC enhances the security of deployments.

- Faster updates and patches for nodes

- Automatic node health monitoring and repair

- Pay per node model makes it cost-effective

- The Control plane is automatically configured on the creation of a cluster

Cons

- Upgrades of cluster components are not fully automatic.

- Network policies are hard to enable on existing clusters.

- Deployment of upgrades to the master node is a hassle

When to use AKS?

AKS is the best option for businesses with auto-scaling needs. It allows you to use the virtual nodes in the Azure Container Instances(ACI). These instances can start within seconds and optimize resources for deployments.

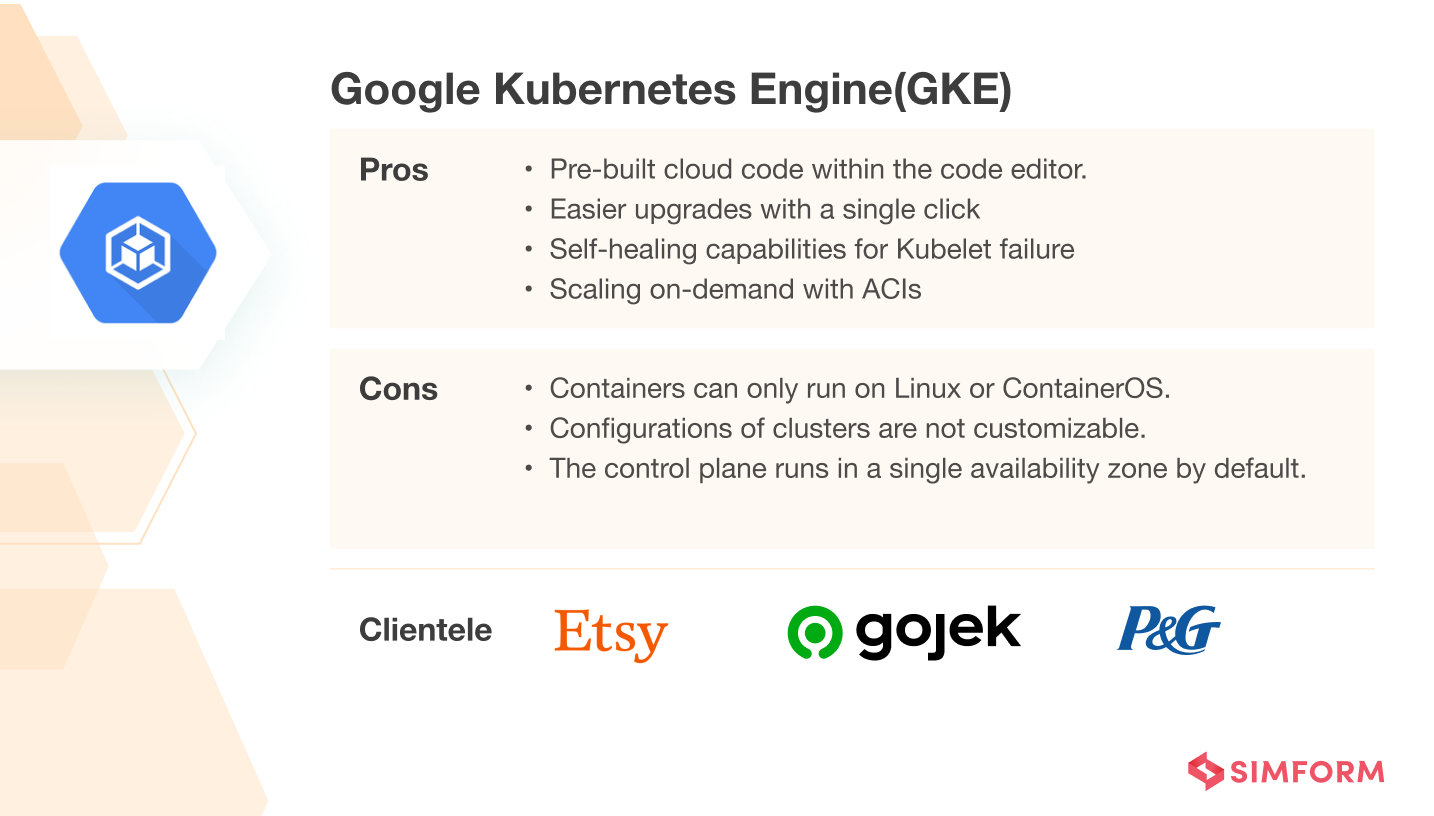

Google Kubernetes Engine(GKE)

GKE is an orchestration and management service for Kubernetes clusters. It allows you to run, manage and maintain the Kubernetes cluster on the Google Cloud. In addition, GKE enables automatic upgrades for the control panel and working nodes.

GKE also detects unhealthy nodes and repairs them automatically. It enables test automation for newer versions. With data-at-rest encryptions, GKE enables better security for your nodes. It also allows you to use serverless architecture through virtual nodes. So, you can run Kubernetes pods in the ACI for granular scaling.

Pros

- Pre-built cloud code enables you to deploy, manage and debug clusters in the code editor.

- Easier upgrades for all your nodes and clusters with a single click

- Self-healing capabilities when Kubelet fails

- Scaling on-demand with ACIs

- Higher security due to data-at-rest encryptions

Cons

- OS restrictions with containers can only run on Linux or ContainerOS.

- Configurations of clusters like Kube-DNS and IP-masq-agent are not customizable.

- The control plane runs in a single availability zone by default, and you can’t change it.

Why to use GKE?

Kubernetes offers container orchestration, but you have to maintain other components and services running on it. GKE provides these services out of the box, reducing the need to maintain them. Especially if you are looking to leverage the Google Cloud Platform for container deployments, GKE fits the bill.

PaaS as an alternative to Kubernetes

Platform as a Service(PaaS) providers like Rancher and Openshift provide a complete computing platform with Kubernetes at its core. However, PaaS is a broader term and does not just provide platforms for containerization. It is like renting a set of tools which here involves development tools, infrastructure, and operating systems.

Rancher

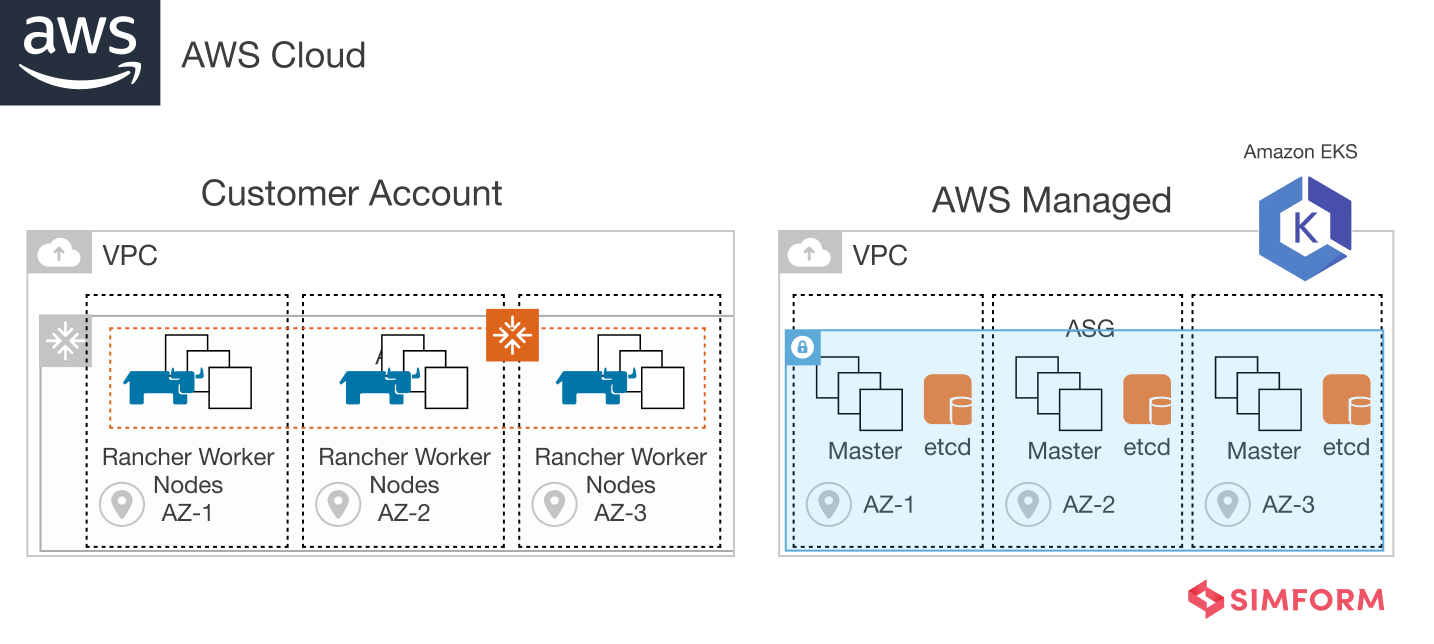

Rancher is a platform that simplifies container management and allows simplified cluster management with support for tools like Prometheus. Such tools enable cluster provisioning and monitoring of workloads and enhance security.

Furthermore, you can use Rancher with Amazon EKS for the full-cycle management of clusters. Rancher enforces RBAC on Amazon EKS and other cluster environments by integrating LDAP and SAML-based authentication. Using this combination, you can run clusters with a managed platform, monitoring the performance for better results.

For example, Municipal Property Assessment Corporation saw an 85% reduction in deployment time and 40% in cloud usage with this approach. However, if you don’t want to use EKS, Rancher comes with RKE(Rancher Kubernetes Engine). It simplifies the installation, running, and maintenance of Kubernetes across environments.

Pros

- Integration and interoperability with major cloud service providers

- Faster deployments with lightweight Kubernetes also called K3s

- Centralized user authentication and RBAC out of the box

- Support for tools like Grafana and Prometheus

- Integration support for Nginx controller and Istio dashboard

Cons

- Does not support Windows and macOS; only compatible with Ubuntu, CentOS, and RedHat.

- No support for the RKT engine, Swarm and Mesos

- A smaller ecosystem of components and lack of maturity for the platform

When to use Rancher?

If you have multiple clusters spread across many geographical locations, Rancher is the right choice. It allows you to maintain high availability across multiple clusters deployed in different zones.

RedHat Openshift

RedHat Openshift is an enterprise-grade container orchestration platform. It is a suite of container orchestration tools offered by RedHat Openshift. These tools are for different requirements like load balancing, networking, security, etc. In addition, RedHat Openshift allows you to use Kubernetes with all these tools pre-built in the suite.

So, you don’t need to add external services for such activities. Openshift is designed explicitly for cloud-based container orchestration. It offers the functionality of Platform as a Service(PaaS) and Container as a Service(CaaS).

As a CaaS, Openshift allows you to use pre-existing container images for deployments. However, the image needs to meet the requirements of the Openshift Container Initiative(OCI). Openshift also allows you to create deployment routes, mimic failures, and establish circuit breakers through service mesh.

Pros

- Pre-built tools on networking, security, and load balancing

- Source to image builder to convert your source code into containers

- Service mesh allows you to route deployments

- On-demand serverless capabilities

- Convention over configuration, command-line tool for ease of use

Cons

- Migrations between cluster versions can be risky due to compatibility issues.

- Monitoring in real-time is difficult

- Deployment changes can be tricky to implement

When to use Openshift?

If you are looking for a Kubernetes management platform with all the tools pre-built, Openshift is an ideal solution. In addition, enterprises that want to reduce third-party dependencies can use Openshift for their deployments.

Lightweight Kubernetes orchestrators as an alternative

Kubernetes is one of the most significant container orchestration services but not the only one. Orchestration platforms like Nomad and Docker Swarm are lightweight with extra features.

Nomad

Nomad is another container orchestration platform that allows app deployments at scale. It is an easy-to-install and cloud service agnostic platform. In addition, Nomad provides the flexibility of natively handling multi-cluster and multi-region deployments.

Nomad comes as a precompiled binary which you can work on any local machine. HashiCorp developed Nomad with the purpose of running applications with high availability. It automatically handles all the applications or nodes natively for high performance.

The smallest unit of Nomad is “tasks” equivalent to the pods in Kubernetes. Each task gets an IP allowing access through sidecar proxies. Nomad provides functionality similar to Ingress in Kubernetes, which enables quick adjustments in configurations.

Pros

- Reduced configuration management required for the app deployment

- Compatible with the existing networks through sidecar proxies

- Rapid adjustments in source allocation and configurations

- Precompiled binary makes Nomad easy to use

Cons

- Lacks maturity as an orchestration platform

- Vendor lock-in due to integration with Hashicorp suite

- Lacks community support due to infancy in the orchestration market

When to use Nomad?

If you are looking for an alternative to Kubernetes with high availability, flexibility, and scalability, Nomad is the right choice. However, what makes it more attractive than others is the ease of installation and quicker initiation.

Docker Swarm

Docker Swarm is an open-source platform for container orchestrations built by Docker. It uses the “Swarm” mode to run Docker containers. Swarm mode makes the Docker engine aware of its working with other instances. The best thing about Docker Swarm is – you can initialize it with a few lines of code.

The Docker command line initializes, enables, and manages Docker Swarm. It allows you to manage the container lifecycle and achieve the desired state. Docker Swarm uses multiple hosts to form a cluster like Kubernetes for load distribution.

What makes Docker Swarm a worthy alternative to Kubernetes is the ease with which you can initialize it. In addition, a Swarm cluster deploys the Docker engine on manager nodes. There are manager nodes that help in the orchestration of clusters and worker nodes that execute the tasks.

Pros

- Docker base allows you to initialize the Swarm nodes with a couple of commands.

Rest-like API (Swarm APIs) enables seamless integration and interactions between services. - Interoperable with Docker tools like Docker CLI and Docker Compose

- Ease of setup and running Docker environments

- Filtration & scheduling system to optimize node selection and cluster deployments

Cons

- Lack of automation as most of the resource provisioning capabilities are manual.

- Less functionality compared to Kubernetes.

- Has limitations in fault tolerance and high availability

When to use Docker Swarm?

Docker Swarm is an ideal alternative to Kubernetes if you already use the Docker platform. Another aspect is simplicity and a smooth learning curve. So, if you are new to the orchestration platforms, Docker Swarm is easy to set up and use.

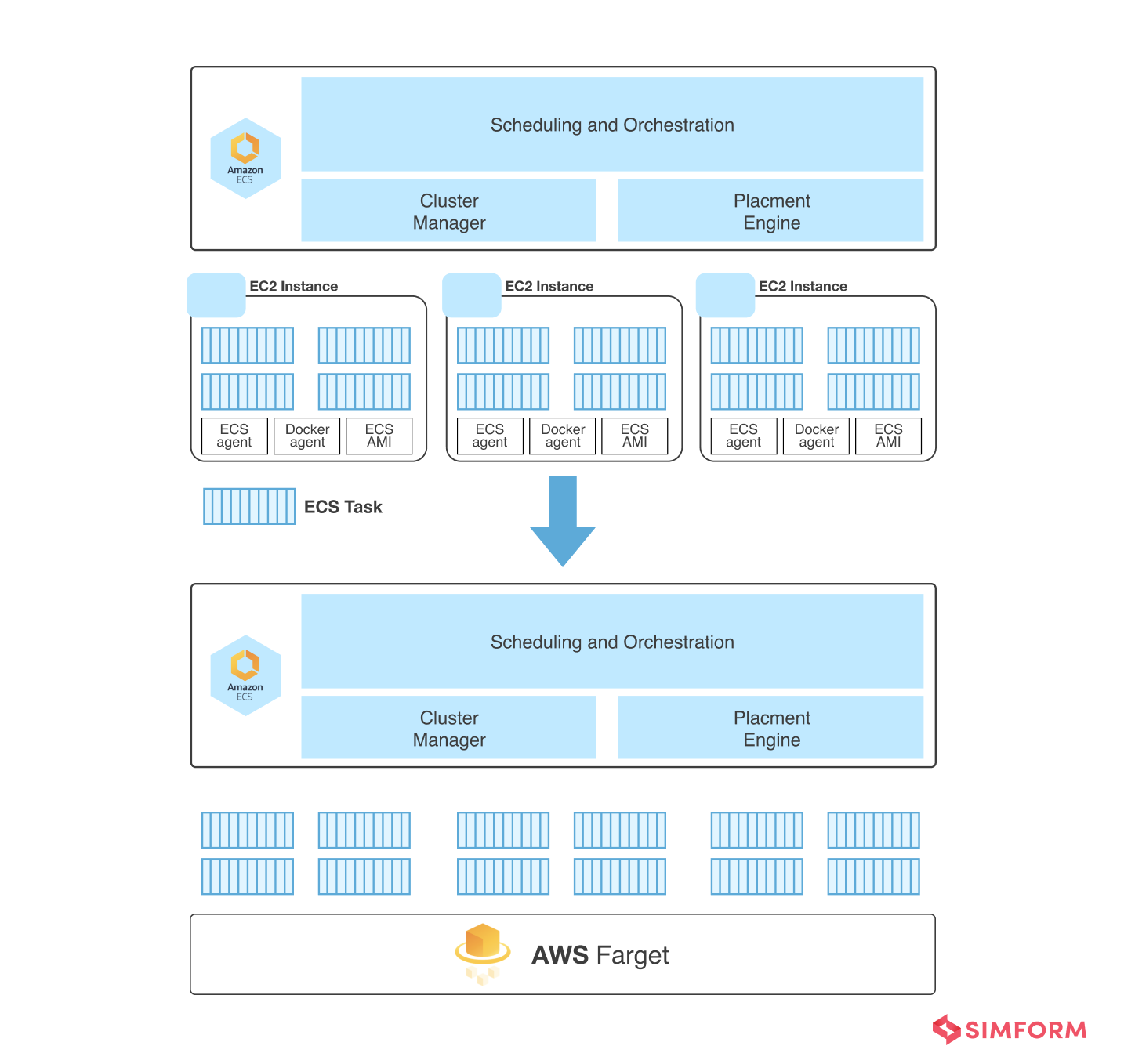

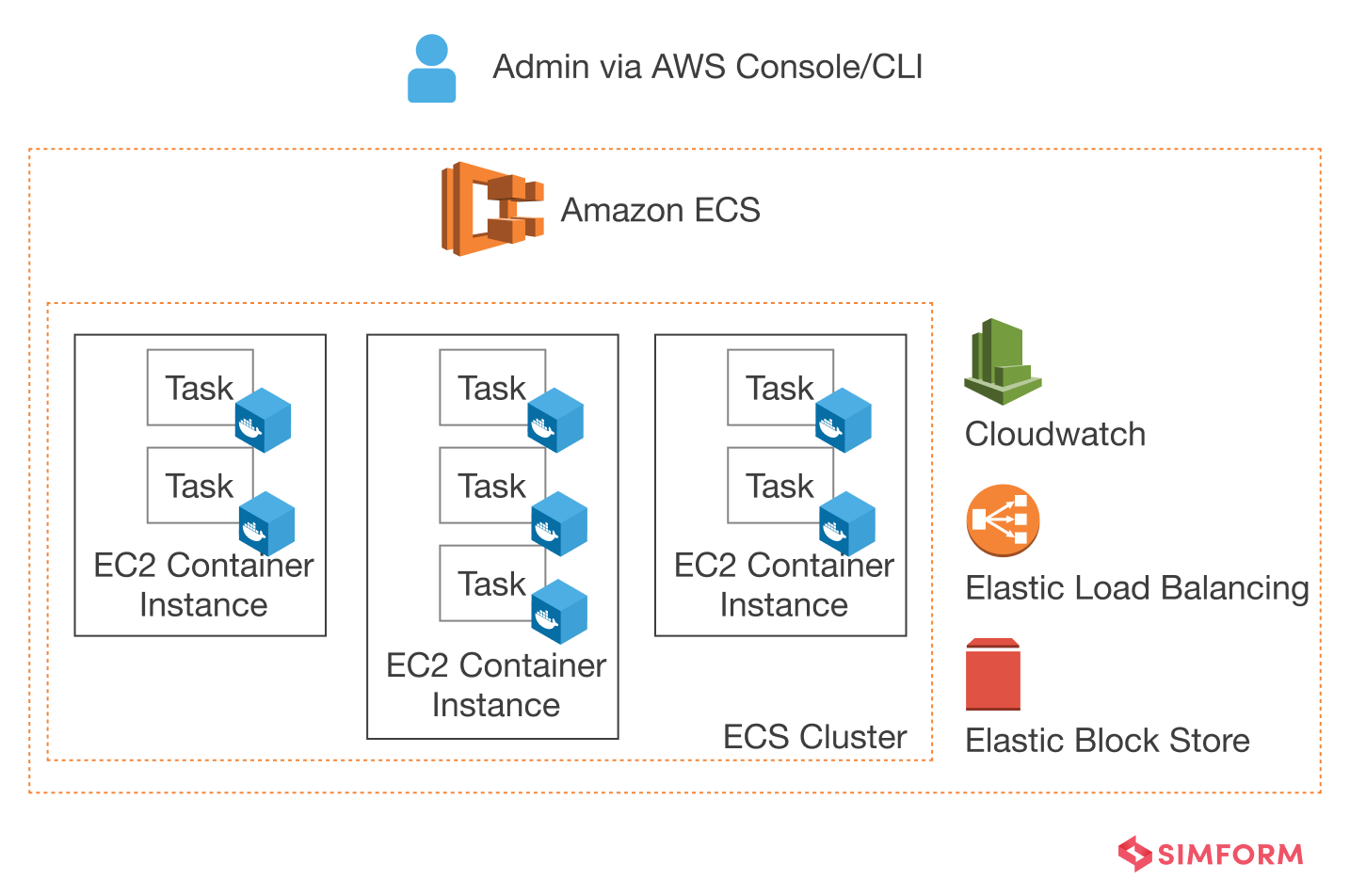

Amazon ECS

Amazon ECS provides management services to run containers. For example, it allows auto-scaling for specific use cases like high traffic during flash sales for eCommerce companies. ECS is also a good option for full-scale service, which needs several containers.

You need to create a docker image and upload it to the Amazon ECR(Elastic Container Repository). The best aspect of ECR is the docker image history. It has all the images related to an application for you to roll back to an earlier version.

ECS allows you to run containerized apps on EC2 instances. Each ECS cluster consists of tasks that run in containers. Further, you can use different AWS services with the ECS like,

- Elastic Load Balancer for routing traffic to containers

- Elastic Block Store for persistent block storage of tasks

- CloudWatch helps with the logging of metrics and scaling up or down of ECS services

- CloudTrail enables ECS API call logging

ECS allows you to define the task definitions in JSON. It specifies parameters of application like which container to use, types of ports, and data volume size. In addition, ECS allows auto-scaling of containers through task definitions.

Pros

- Better integration with the AWS ecosystem

- Provides control of the container deployments

- Autoscaling capabilities based on task definitions

- Easy rollbacks with the ECR

Cons

- The steeper learning curve for the ECS

- Vendor lock-in problems due to compatibility with the Amazon ecosystem

When to use ECS?

If you are looking for an alternative to Kubernetes with better integration in the AWS ecosystem, ECS is an ideal solution. It is also helpful for eCommerce organizations looking to auto-scale during peak traffic scenarios.

Conclusion

Here we have shed some light on alternatives to Kubernetes. The picture will become apparent when you consider the challenges and how these alternatives help!

Simform has been at the forefront of providing business advantages to clients with containerization to implement the decoupled architecture. For example, the Swift shopper was looking for a retail solution for offline stores. So, we used agile practices to provision development tasks.

Furthermore, our team hosted microservices on the EC2 instances and used share-nothing distributed architecture. It enabled Swift shopper’s clients to develop white-label POS apps quickly. Such apps can now serve 100,00,000 data points across 200,000 products.