Real World Programming with ChatGPT

Writing Prompts Isn’t As Simple As It Looks

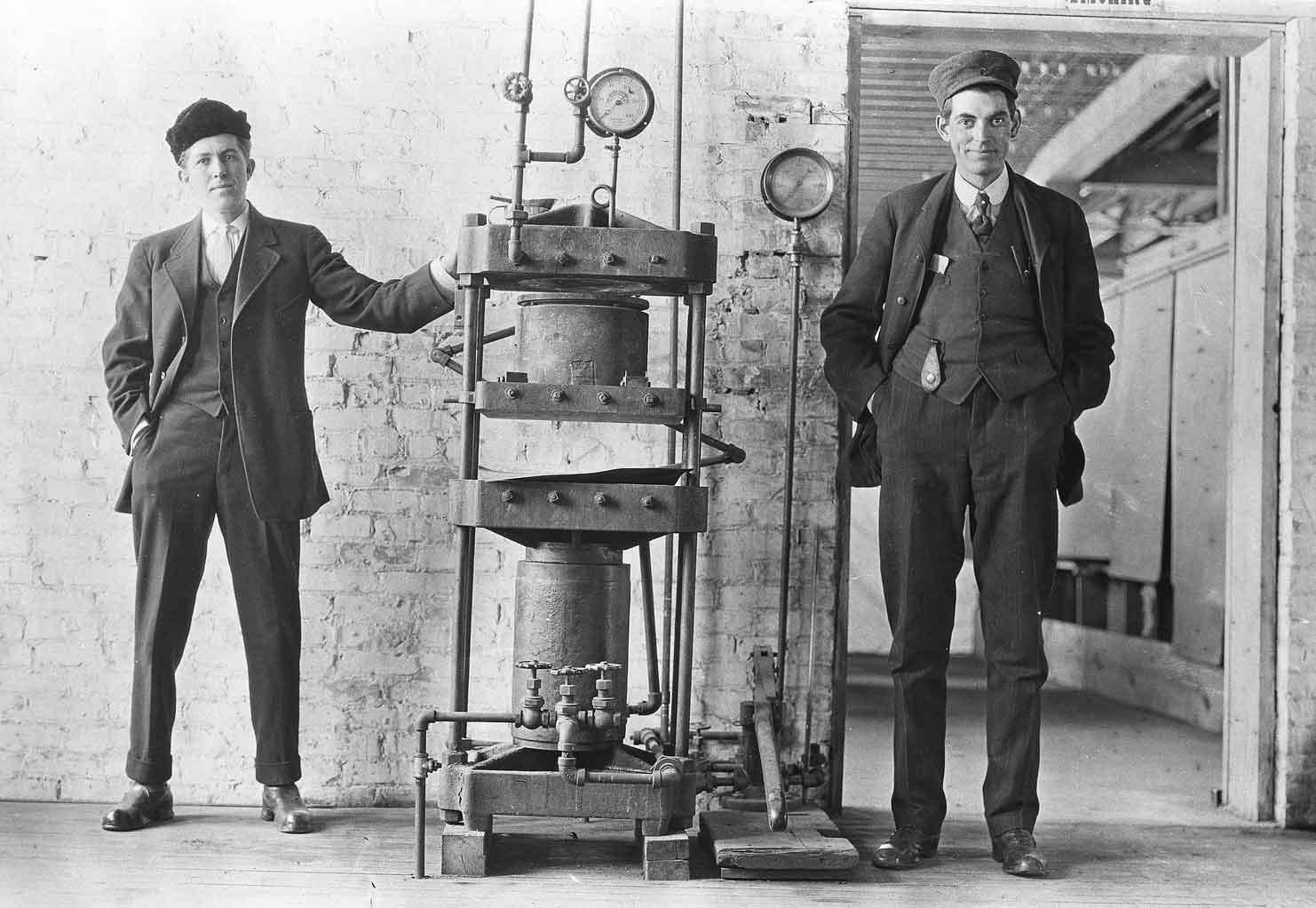

C. Vulcanized Products Co, Muskegon, Michigan, 1911 (source: Community Archives via Flickr)

C. Vulcanized Products Co, Muskegon, Michigan, 1911 (source: Community Archives via Flickr)

This post is a brief commentary on Martin Fowler’s post, An Example of LLM Prompting for Programming. If all I do is get you to read that post, I’ve done my job. So go ahead–click the link, and come back here if you want.

There’s a lot of excitement about how the GPT models and their successors will change programming. That excitement is merited. But what’s also clear is that the process of programming doesn’t become “ChatGPT, please build me an enterprise application to sell shoes.” Although I, along with many others, have gotten ChatGPT to write small programs, sometimes correctly, sometimes not, until now I haven’t seen anyone demonstrate what it takes to do professional development with ChatGPT.

In this post, Fowler describes the process Xu Hao (Thoughtworks’ Head of Technology for China) used to build part of an enterprise application with ChatGPT. At a glance, it’s clear that the prompts Xu Hao uses to generate working code are very long and complex. Writing these prompts requires significant expertise, both in the use of ChatGPT and in software development. While I didn’t count lines, I would guess that the total length of the prompts is greater than the number of lines of code that ChatGPT created.

First, note the overall strategy Xu Hao uses to write this code. He is using a strategy called “Knowledge Generation.” His first prompt is very long. It describes the architecture, goals, and design guidelines; it also tells ChatGPT explicitly not to generate any code. Instead, he asks for a plan of action, a series of steps that will accomplish the goal. After getting ChatGPT to refine the task list, he starts to ask it for code, one step at a time, and ensuring that step is completed correctly before proceeding.

Many of the prompts are about testing: ChatGPT is instructed to generate tests for each function that it generates. At least in theory, test driven development (TDD) is widely practiced among professional programmers. However, most people I’ve talked to agree that it gets more lip service than actual practice. Tests tend to be very simple, and rarely get to the “hard stuff”: corner cases, error conditions, and the like. This is understandable, but we need to be clear: if AI systems are going to write code, that code must be tested exhaustively. (If AI systems write the tests, do those tests themselves need to be tested? I won’t attempt to answer that question.) Literally everyone I know who has used Copilot, ChatGPT, or some other tool to generate code has agreed that they demand attention to testing. Some errors are easy to detect; ChatGPT often calls “library functions” that don’t exist. But it can also make much more subtle errors, generating incorrect code that looks right if it isn’t examined and tested carefully.

It is impossible to read Fowler’s article and conclude that writing any industrial-strength software with ChatGPT is simple. This particular problem required significant expertise, an excellent understanding of what Xu Hao wanted to accomplish, and how he wanted to accomplish it. Some of this understanding is architectural; some of it is about the big picture (the context in which the software will be used); and some of it is anticipating the little things that you always discover when you’re writing a program, the things the specification should have said, but didn’t. The prompts describe the technology stack in some detail. They also describe how the components should be implemented, the architectural pattern to use, the different types of model that are needed, and the tests that ChatGPT must write. Xu Hao is clearly programming, but it’s programming of a different sort. It’s clearly related to what we’ve understood as “programming” since the 1950s, but without a formal programming language like C++ or JavaScript. Instead, there’s much more emphasis on architecture, on understanding the system as a whole, and on testing. While these aren’t new skills, there’s a shift in the skills that are important.

He also has to work within the limitations of ChatGPT, which (at least right now) gives him one significant handicap. You can’t assume that information given to ChatGPT won’t leak out to other users, so anyone programming with ChatGPT has to be careful not to include any proprietary information in their prompts.

Was developing with ChatGPT faster than writing the JavaScript by hand? Possibly–probably. (The post doesn’t tell us how long it took.) Did it allow Xu Hao to develop this code without spending time looking up details of library functions, etc.? Almost certainly. But I think (again, a guess) that we’re looking at a 25 to 50% reduction in the time it would take to generate the code, not 90%. (The article doesn’t say how many times Xu Hao had to try to get prompts that would generate working code.) So: ChatGPT proves to be a useful tool, and no doubt a tool that will get better over time. It will make developers who learn how to use it well more effective; 25 to 50% is nothing to sneeze at. But using ChatGPT effectively is definitely a learned skill. It isn’t going to take away anyone’s job. It may be a threat to people whose jobs are about performing a single task repetitively, but that isn’t (and has never been) the way programming works. Programming is about applying skills to solve problems. If a job needs to be done repetitively, you use your skills to write a script and automate the solution. ChatGPT is just another step in this direction: it automates looking up documentation and asking questions on StackOverflow. It will quickly become another essential tool that junior programmers will need to learn and understand. (I wouldn’t be surprised if it’s already being taught in “boot camps.”)

If ChatGPT represents a threat to programming as we currently conceive it, it’s this: After developing a significant application with ChatGPT, what do you have? A body of source code that wasn’t written by a human, and that nobody understands in depth. For all practical purposes, it’s “legacy code,” even if it’s only a few minutes old. It’s similar to software that was written 10 or 20 or 30 years ago, by a team whose members no longer work at the company, but that needs to be maintained, extended, and (still) debugged. Almost everyone prefers greenfield projects to software maintenance. What if the work of a programmer shifts even more strongly towards maintenance? No doubt ChatGPT and its successors will eventually give us better tools for working with legacy code, regardless of its origin. It’s already surprisingly good at explaining code, and it’s easy to imagine extensions that would allow it to explore a large code base, possibly even using this information to help debugging. I’m sure those tools will be built–but they don’t exist yet. When they do exist, they will certainly result in further shifts in the skills programmers use to develop software.

ChatGPT, Copilot, and other tools are changing the way we develop software. But don’t make the mistake of thinking that software development will go away. Programming with ChatGPT as an assistant may be easier, but it isn’t simple; it requires a thorough understanding of the goals, the context, the system’s architecture, and (above all) testing. As Simon Willison has said, “These are tools for thinking, not replacements for thinking.”