Dynatrace Perform 2024 Guide: Deriving business value from AI data analysis

Dynatrace

JANUARY 23, 2024

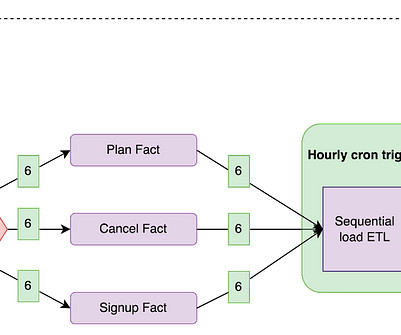

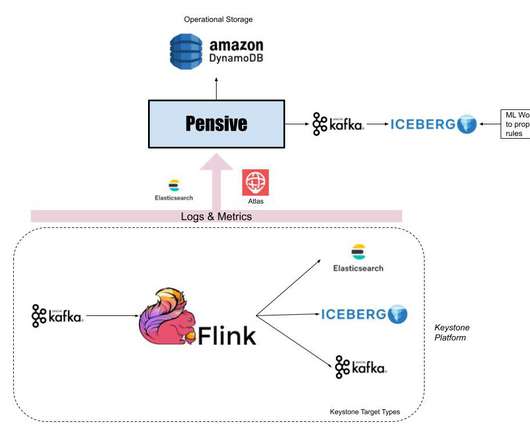

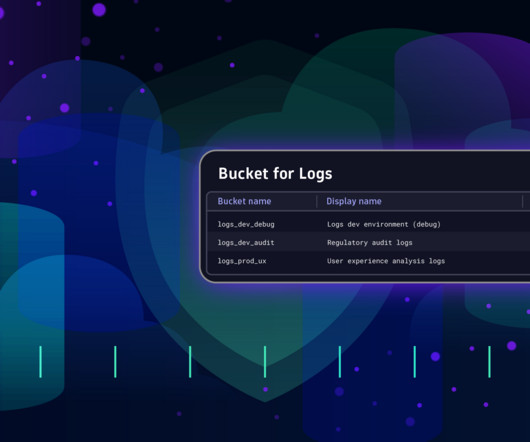

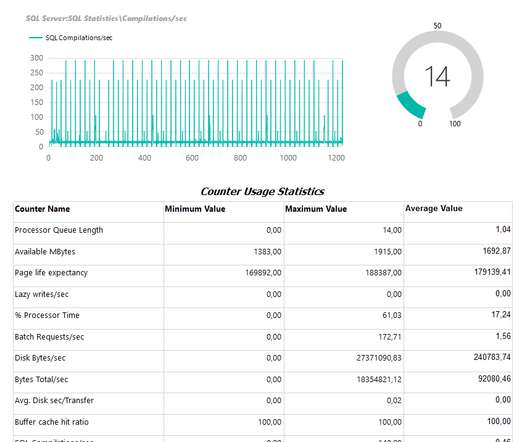

AI data analysis can help development teams release software faster and at higher quality. So how can organizations ensure data quality, reliability, and freshness for AI-driven answers and insights? And how can they take advantage of AI without incurring skyrocketing costs to store, manage, and query data?

Let's personalize your content