As global warming increases, growing IT carbon footprints make energy-efficient, carbon-optimized computing a top priority for many organizations. By some measures, cloud computing has a larger carbon footprint than the airline industry.

Growing awareness and increasing regulatory scrutiny have propelled carbon emissions data into the public consciousness. How will your organization respond to this global challenge? How can you reduce the carbon footprint of your hybrid cloud?

A structured approach

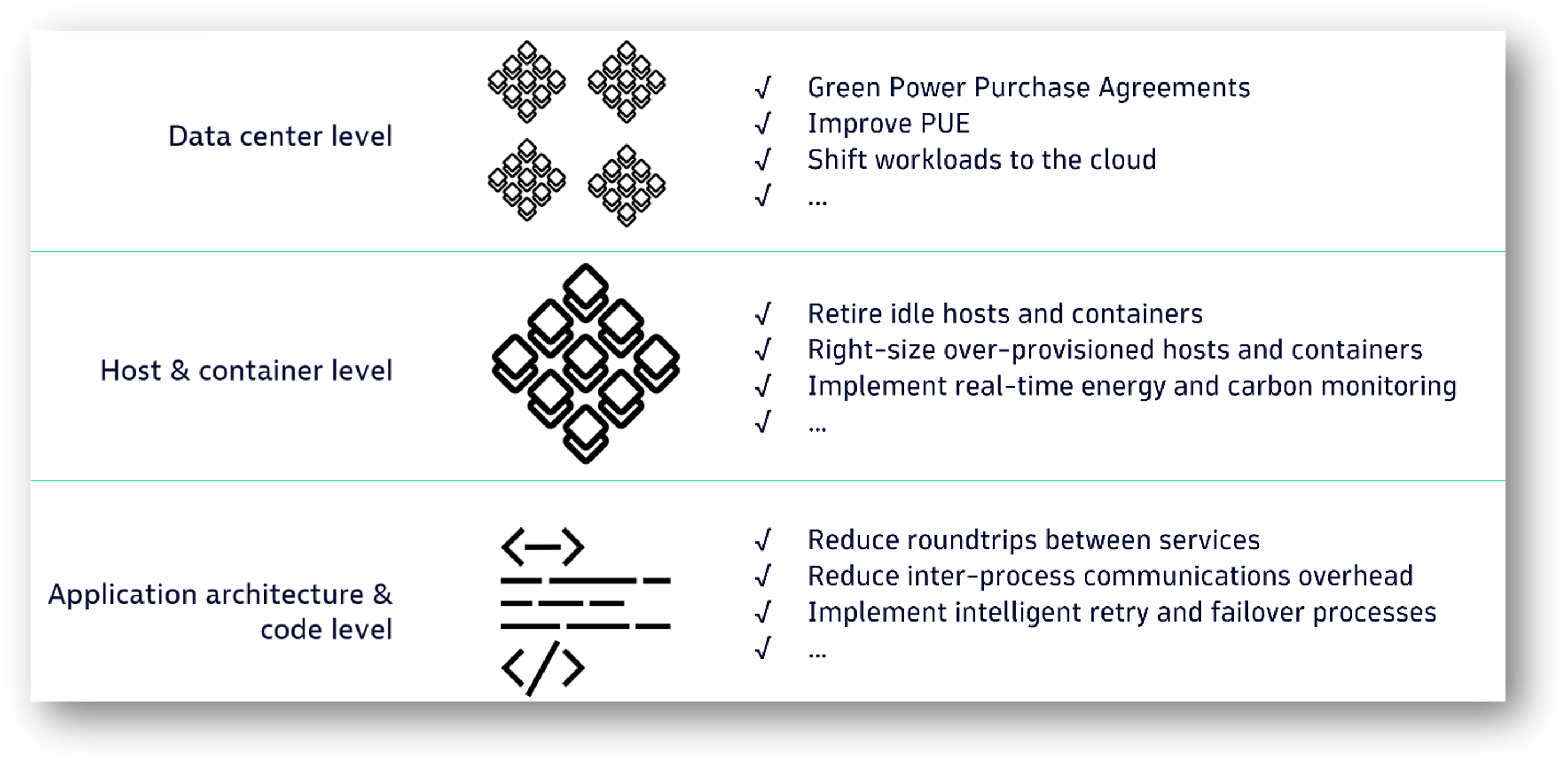

Reducing carbon emissions involves a combination of technology, practice, and planning. Evaluating these on three levels—data center, host, and application architecture (plus code)—is helpful. Options at each level offer significant potential benefits, especially when complemented by practices that influence the design and purchase decisions made by IT leaders and individual contributors.

Level 1: Data centers

This is the starting point for most organizations. There are some big moves possible here that are easy to understand, though not necessarily easy to implement. If you’re running your own data center, you can start powering it with green energy purchased through your utility company. This is a rather simple move as it doesn’t directly impact your infrastructure, just your contract with your electricity provider.

The complication with this approach is that your energy bill will likely increase. The law of supply and demand dictates green energy prices, and even though renewable energy is often cheaper to produce, the demand exceeds supply.

Next, we consider possible energy savings in the data center. You might optimize your cooling system or move your data center to a colder region with reduced cooling demands. Most approaches focus on improving Power Usage Effectiveness (PUE), a data center energy-efficiency measure. A PUE of 1.0 is the unattainable perfect score, meaning that all power is used for computing, and none is used for other purposes such as cooling or lighting. The average PUE for data centers is about 1.8; energy-efficient data centers—cloud providers—achieve values closer to 1.2.

Is the solution to just move all workloads to the cloud? Unfortunately, it’s not that simple. There might not be enough cloud capacity where you need it. Application architectures might not be conducive to rehosting. Data sovereignty regulations might constrain your hosting options. So, it’s time to consider the next level of optimization.

Level 2: Hosts and containers

You might run thousands of hosts and containers, many of which have been sized by prioritizing performance over energy consumption. How many of these have been over-provisioned? How many sit idle most of the time? Right-sizing and consolidating (or retiring) over-provisioned and idling hosts and containers represent two big opportunities for reducing energy consumption and carbon emissions. Of course, you need to balance these opportunities with the business goals of the applications served by these hosts. To illustrate how tricky this balancing act can be, here is an anecdote from our own internal effort to reduce our carbon footprint at Dynatrace:

Klaus: “Hey Thomas, we’ve identified this host as idling for the last month; nothing is happening on it. Can we shut it down?”

Thomas: “Not so fast, Klaus; this host is part of our Synthetic Monitoring node cluster. For failover and load SLA reasons, we require at least three nodes at every synthetic location. So you’ll have to look elsewhere for energy savings!”

Another anecdote comes from one of our customers. After identifying about 100 idle host instances to be shut down, they learned that these hosts were provisioned in anticipation of upscaling to support an upcoming major sales event.

These experiences illustrate a key point: Identifying instances to be right-sized or shut down is an easy—and good—first step, but you need to understand the business context to make informed decisions. And while these examples were resolved by just asking a few questions, in many cases, the answers are more elusive, requiring real-time and historical drill-downs into the processes and dependencies specific to each host.

From here, it’s time to consider the next level of energy optimization, green coding.

Level 3: Green coding

The topic of carbon reduction in data centers was new to me when I began digging into it just two years ago. It quickly became a “back to the future” experience for me. Starting with data center capacity and host rightsizing, it quickly became apparent that optimizing applications and their underlying source code was the responsibility of architects and engineers.

The Application Performance Management (APM) best practices we recommend to optimize user experience and application performance—driven initially by on-premises workloads and finite compute capacity—contribute to improved computational efficiency by reducing CPU cycles, optimizing inter-process communications, and lowering memory footprints. This computational efficiency also reduces energy consumption, which in turn reduces carbon emissions. Many of the same principles can be applied to green coding. A few examples:

- Reduce roundtrips between services (for example, the N+1 query pattern).

- Reduce the volume of data volumes requested from databases (for example, request all, filter in memory).

- Reduce inter-process communications overhead.

- Implement appropriate caching layers (for example, read-only cache for static data).

- Implement intelligent retry and failover processes.

For a deeper look into these and many other recommendations, my colleagues and I wrote an eBook about performance and scalability on the topic. We encourage you to take a look.

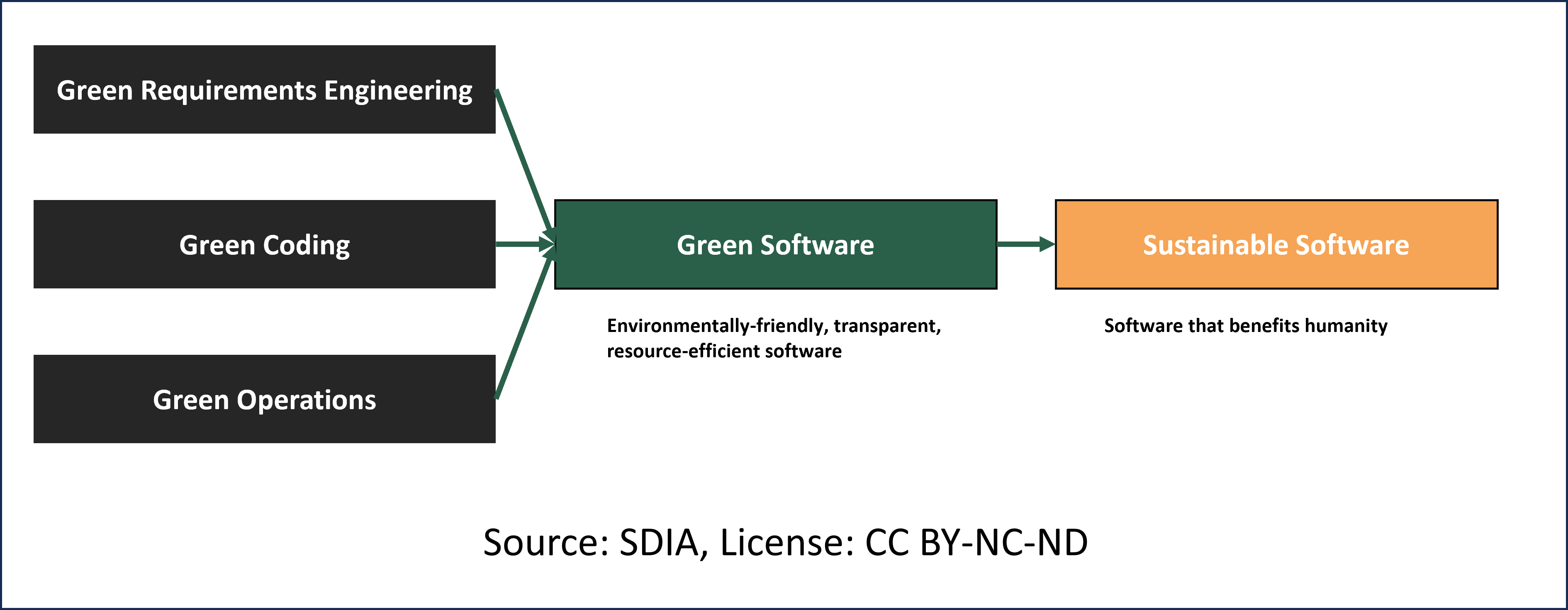

The sustainability community now refers to these best practices as “green coding;” in conjunction with green operations and green requirements engineering, green coding results in green software.

Dynatrace Carbon Impact app

The missing piece of the puzzle, at least for Level 2: Hosts and containers and Level 3: Green coding, is granular and actionable visibility. Cloud providers offer coarse carbon footprint tools that are designed for compliance reporting, not optimization. And data-observability solutions focus on performance, not carbon emissions.

In January 2023, Dynatrace released the Carbon Impact app, adding carbon emissions and energy consumption metrics to observability data. Dynatrace Smartscape® automatically adds real-time dependency and architectural context, from hosts to processes to software services. Carbon Impact can support your level 2 and level 3 optimization initiatives with the granularity and insights needed to reduce your carbon footprint without sacrificing your availability, performance, customer experience, and cost containment goals.

Want to learn more? Watch this Carbon Impact Observability Clinic recording to see Carbon Impact in action.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum