Have you ever encountered queries delayed by flow control but found no lagged secondaries? This article shows a possible scenario of why this happens.

Replica Sets provide high availability and redundancy to MongoDB clusters. There is always one primary node that can accept writes, but the replica set topologies vary depending on the use case and scale. A typical minimum replica set consists of two secondaries (PSS) or one secondary and one arbiter (PSA). The idea is that in case one node goes down, a majority of members are needed to elect the new primary.

Replica Set configuration problem

Successful failover does not mean the replica set is fully available, though!

This is clearly visible in the PSA topology when the write concern is set to the majority. As soon as one data-bearing node goes down, even though the replica set does a successful election and keeps operating, any write with write concern “majority” goes to a permanent stall until data redundancy capability is back.

What MongoDB administrators sometimes overlook is that even after automatic failover and successful elections, the unavailable members are still part of the replica set and are kept count for the internal algorithms. Now, often, replica set clusters consist of nodes belonging to more than one data center. To avoid issues related to write concerns when one data center goes offline, you may just use a custom setting that ensures durability, at least in one DC.

Let’s consider a replica set of six data-bearing nodes plus one arbiter. When primary DC goes down, we may end up with a situation like this after the elections:

1 2 3 4 5 6 7 8 9 10 | mongo> rs.status().members.map(function(m) { return {'name':m.name, 'stateStr':m.stateStr} }) [ { name: 'localhost:27137', stateStr: '(not reachable/healthy)' }, { name: 'localhost:27138', stateStr: '(not reachable/healthy)' }, { name: 'localhost:27139', stateStr: '(not reachable/healthy)' }, { name: 'localhost:27140', stateStr: 'SECONDARY' }, { name: 'localhost:27141', stateStr: 'PRIMARY' }, { name: 'localhost:27142', stateStr: 'ARBITER' }, { name: 'localhost:27143', stateStr: 'SECONDARY' } ] |

There is a voting majority preserved, so the replica set is available. Also, to avoid stalls, we set the default write majority to 2:

1 2 | mongo> db.adminCommand({getDefaultRWConcern: 1}).defaultWriteConcern { w: 2, wtimeout: 500 } |

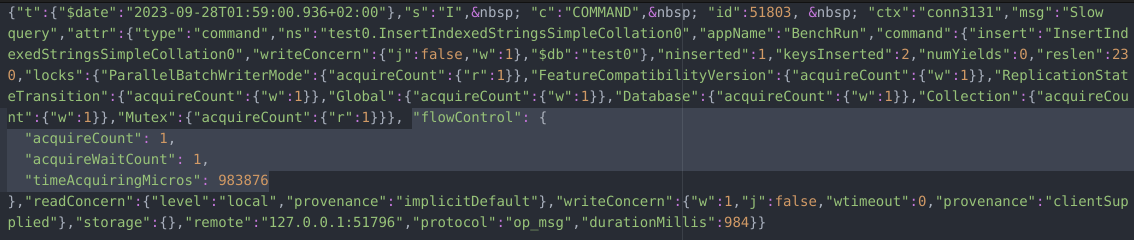

Everything seems to work fine; writes are accepted by the cluster. However, after some time (not immediately!), we spot slow queries like this:

We check the replication lag, but the available secondaries show none:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | mongo> rs.printSecondaryReplicationInfo() source: localhost:27137 { 'no replication info, yet. State': '(not reachable/healthy)' } --- source: localhost:27138 { 'no replication info, yet. State': '(not reachable/healthy)' } --- source: localhost:27139 { 'no replication info, yet. State': '(not reachable/healthy)' } --- source: localhost:27140 { syncedTo: 'Thu Sep 28 2023 01:59:53 GMT+0200 (Central European Summer Time)', replLag: '0 secs (0 hrs) behind the primary ' } --- source: localhost:27143 { syncedTo: 'Thu Sep 28 2023 01:59:53 GMT+0200 (Central European Summer Time)', replLag: '0 secs (0 hrs) behind the primary ' } |

So, why does flow control start to throttle writes?

1 2 3 4 5 6 7 8 9 10 11 | mongo> db.runCommand({ serverStatus: 1}).flowControl { enabled: true, targetRateLimit: 100, timeAcquiringMicros: Long("11525"), locksPerKiloOp: 81847, sustainerRate: 0, isLagged: true, isLaggedCount: 2, isLaggedTimeMicros: Long("14783002787") } |

Because flow control aim is to keep the “majority commit point lag“ under the specified target defined via flowControlTargetLagSeconds. In other words, this mechanism tries to keep the overall replication lag of the majority of data-bearing nodes under the target. Now, when the primary does not have access to the required number of secondaries, it must assume the lag is already above the target, hence starting to throttle writes by limiting the number of tickets (targetRateLimit) for locks needed to apply writes.

The required majority to avoid flow control is:

1 2 | mongo> rs.status().writeMajorityCount 4 |

But only three data-bearing nodes are up in our example case.

Read concern vs. write concern vs. flow control

While investigating the real customer case manifesting this problem, I found it challenging to understand some of the documentation on how flow control relates to read concern. Example confusing bits can be found at:

https://jira.mongodb.org/browse/SERVER-39667

https://www.mongodb.com/docs/manual/tutorial/troubleshoot-replica-sets/#flow-control

I requested clarification at: https://jira.mongodb.org/browse/DOCS-15223

Conclusion

Having a replica set up with a primary member does not necessarily mean the cluster is able to handle the traffic as expected. One should avoid situations where some failed members are kept being present in the replica set configuration. Not only because the situation described above can happen but also because of the quorum of voting members. Not to mention the overhead of constant attempts to reconnect with the missing nodes.

Therefore, when a node goes down, either remove it using rs.remove() query or if the downtime is expected to last shortly, like for planned maintenance, consider at least disabling flow control. You can do it online with:

1 | db.adminCommand( { setParameter: 1,enableFlowControl: false } ) |

Percona Distribution for MongoDB is a freely available MongoDB database alternative, giving you a single solution that combines the best and most important enterprise components from the open source community, designed and tested to work together.

Download Percona Distribution for MongoDB Today!